I'm looking into backing up our Nutanix Acropolis cluster to either Azure or AWS. What I'm curious about are the actual costs once you factor in not just their basic storage rate, but Puts and data writes and everything that happens during a backup and restore (rare as those would be).

We have around 5 TB total with all our VMs, with the daily snapshots averaging around 40GB in changed data. We would want to retain maybe a month's worth of backups with dailies and weeklies.

Is this enough data to do a decent estimate, or is it one of those 'Try and see' scenarios? I'd be interested in anybody else that's utilizaing it as a remote backup site and what kinds of costs they're seeing.

Thanks!

Clint

Answer

Cost for backup to Azure or AWS

+4

+4Best answer by AllBlack

I trialled this but it was not the right solution for us, for several reasons.Initially it looked like we could make a cost saving on Commvault licensing but the benefits disappeared as our backup set grew.

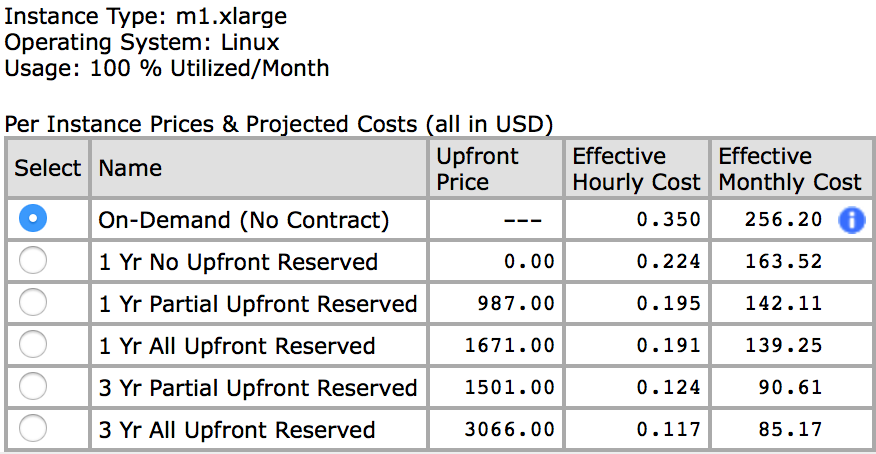

Once you reach a certain amount of data, I believe 10 TB, you will need to upgrade your virtual controller instance which will double your compute cost. We also got stung by the exchange rate.Initially the Kiwi dollar was 1:1 to the US dollar but that changed to 1:1.5 which drove up the cost quite a bit. In the end it was costing us like $1,200 a month which was more expensive than our on prem solution. I cannot recall the exact retention details we used.

The performance was not ideal either as we do not have AWS datacentres in New Zealand and the closest one is over in Australia.

I think Cloud Connect is a great solution for the right use case and will definitely investigate again when it supports DR

Once you reach a certain amount of data, I believe 10 TB, you will need to upgrade your virtual controller instance which will double your compute cost. We also got stung by the exchange rate.Initially the Kiwi dollar was 1:1 to the US dollar but that changed to 1:1.5 which drove up the cost quite a bit. In the end it was costing us like $1,200 a month which was more expensive than our on prem solution. I cannot recall the exact retention details we used.

The performance was not ideal either as we do not have AWS datacentres in New Zealand and the closest one is over in Australia.

I think Cloud Connect is a great solution for the right use case and will definitely investigate again when it supports DR

This topic has been closed for replies.

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.