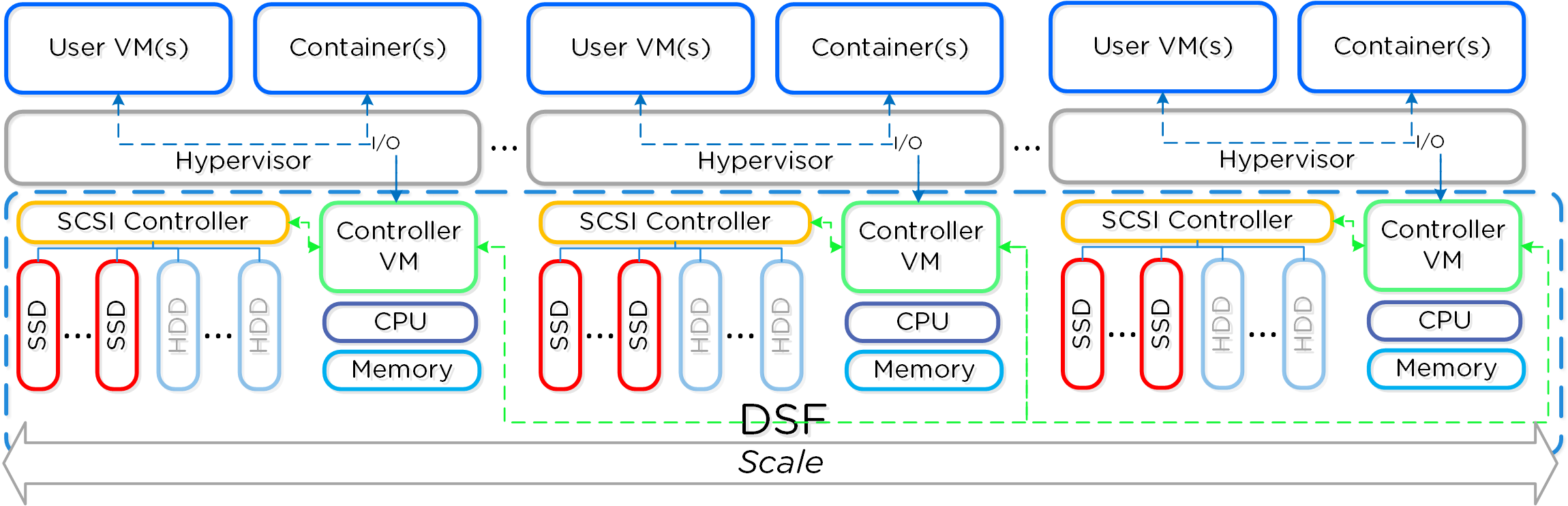

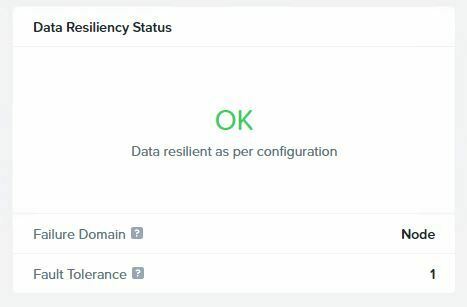

I am planning to make Nutanix PoC with the Community Edition. And if successful use Nutanix. The goal is to make a tree or four node cluster with local storage.

And how would it be best to prepare the tree nodes. The have buildin Hardware Raid controller. (Cisco UCS servers). I could put in up to 8 disks. They can be in same size or in deferent sizes. I would probably be some 1-2 TB SSD disks. I can make one or more Raid disks. (Raid 1, 5, 10). Would would make sense in a performance and redundancy perspective.

I have read the CE Getting started guide. Here there are mentioned:

- Storage Devices (Max 4 HDD/SSD)

- Cold Tier (500 GB or greater, maximum 18 TB (3x6 TB HDDs)

- Storage Devices, Hot Tier Flash (Single 200 GB SSD or Greater.

- Hypervisor Boot Device (32 GB pr. Node)

Witch storage needs to be fast? And should I have my hardware raid make the disks ?.

Would it make sense to build a raid 10 on eight equal sized disks and make logical drives fitting each of the above disks types?