Flow Virtual Networking Gateway VM High Availability

When you initially deploy your first cluster, you can create two to four FGW VMs vs only one prior. The resiliency change is a big deal but getting traffic in and out of the cluster became a lot easier for Azure/Nutanix admins. The addition of the Azure Route servers along with the deployment of BGP VMS means that User Defined routes don’t need to be created. Anytime manual operations can be removed from a design, it means a win for the customer.

Prior to AOS 6.7, if you deployed only one gateway VM, the NC2 portal redeployed a new FGW VM with an identical configuration when it detected that the original VM was down. Because this process invoked various Azure APIs, it took about 5 minutes before the new FGW VM was ready to forward traffic, which affected the north-south traffic flow.

To reduce this downtime, NC2 on Azure uses an active-active configuration. This setup provides a flexible scale-out configuration when you need more traffic throughput.

The following workflow describes what happens when you turn off an FGW VM gracefully for planned events like updates.

- The NC2 portal disables the FGW VM.

- Prism Central removes the VM from the traffic path

- The NC2 portal deletes the original VM and creates a new FGW VM with an identical configuration.

- The NC2 portal registers the new instance with Prism Central.

- Prism Central adds the new instance back to the traffic path.

For ungraceful or unplanned failures, the NC2 portal and Prism Central both have their own detection mechanisms based on keepalives. They take similar actions to those for the graceful or planned cases.

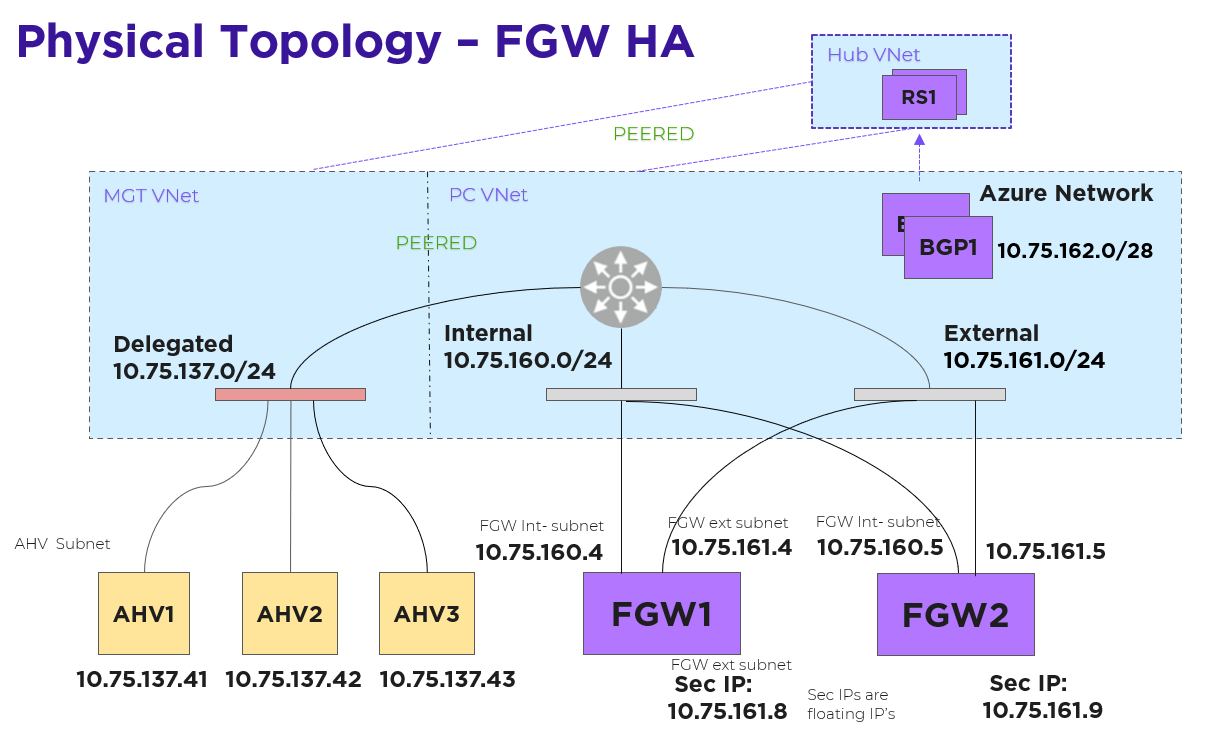

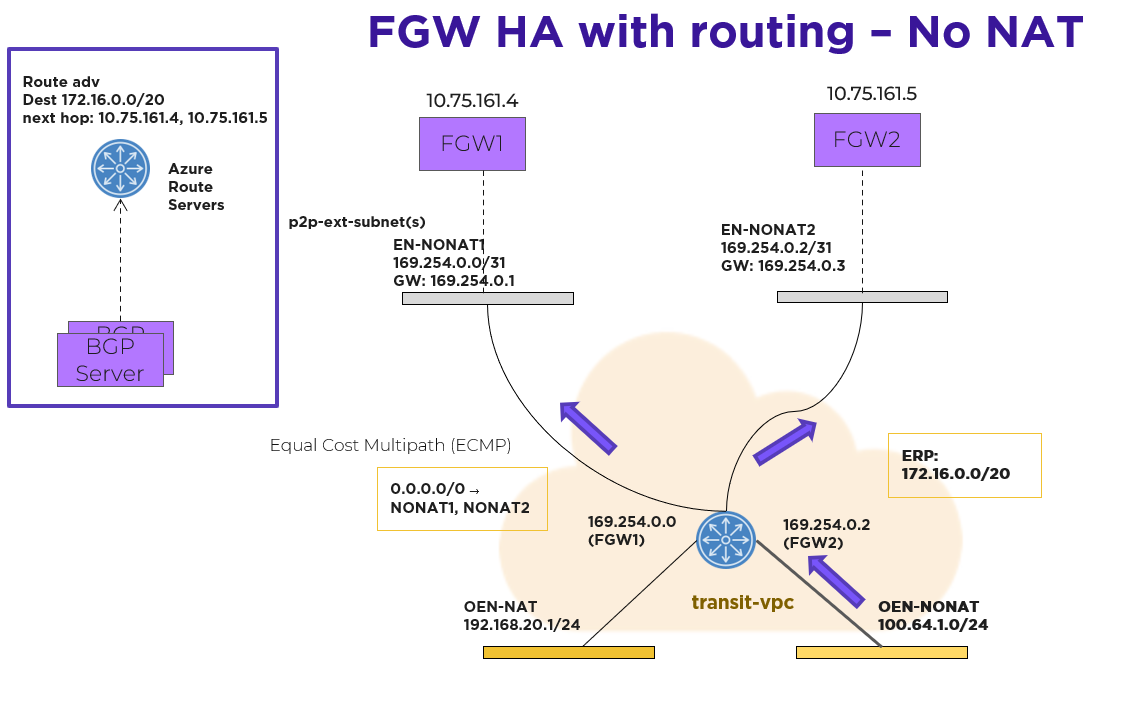

Each FGW instance has two NICs: one on the internal subnet that exchanges traffic with AHV and another on the external subnet that exchanges traffic with the Azure network. Each FGW instance registers with Prism Central, and Prism Central adds it to the traffic path. A point-to-point external subnet is created for each FGW and the transit VPC is attached to it, with the FGW instance hosting the corresponding logical-router gateway port. In the following diagram, EN-NONAT1 and EN-NONAT2 are the point-to-point external subnets.

Flow Virtual Networking Gateway Using the NoNAT Path

For northbound traffic, the transit VPC has an equal-cost multi-path (ECMP) default route, with all the point-to-point external subnets as possible next hops. In this case, the transit VPC distributes traffic across multiple external subnets hosted on different FGWs.

For southbound traffic using more than one FGW, a Border Gateway Protocol (BGP) gateway is deployed as an Azure native VM in the Prism Central VNet. Azure Route Servers deploy in the same Prism Central VNet. With an Azure Route Server, you can exchange routing information directly through BGP between any network virtual appliance that supports BGP and the Azure VNet, without manually configuring or maintaining route tables.

The BGP gateway peers with the Azure Route Servers. The BGP gateway advertises the externally routable IP addresses to the Azure Route Server, with each active FGW external IP address as the next hop. Externally routable IP addresses compose the address range that you want to advertise to the rest of the network in your Flow Virtual Networking user VPCs. Once the externally routable IP address is set in Prism Central at the user VPC, the Azure network distributes southbound packets across all the FGW instances.

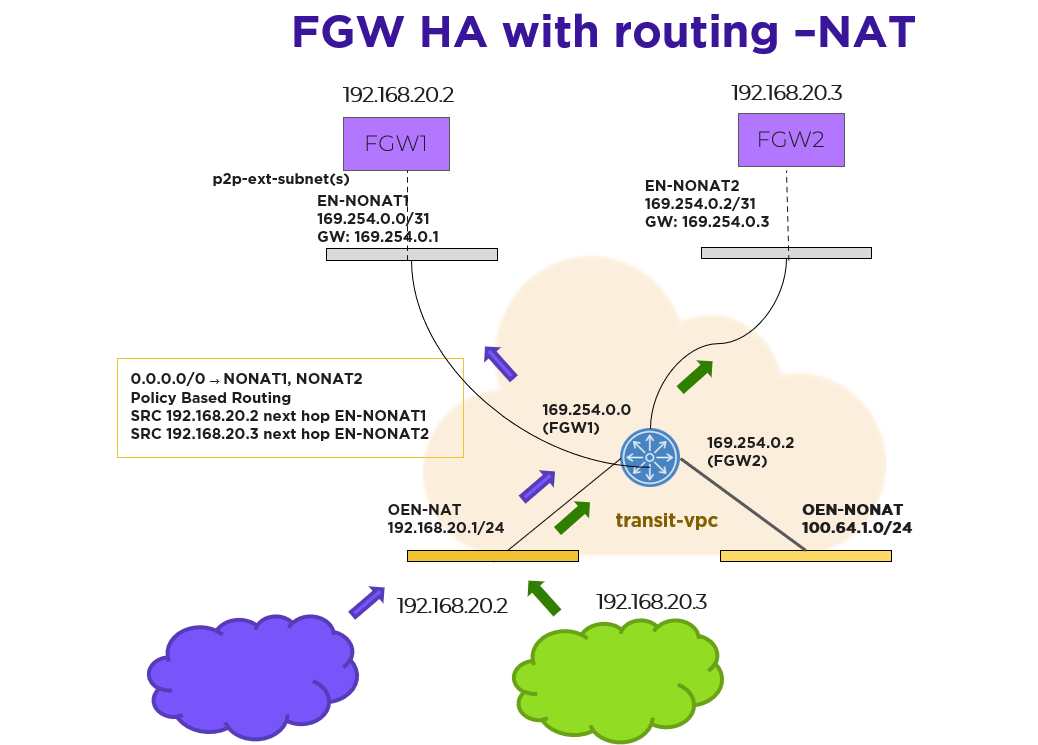

Prism Central determines which FGW instance should host a given NAT IP address and then configures each NAT IP address as a secondary IP address on each FGW. Packets sourced from those IP addresses can be forwarded through the corresponding FGW only. No-NAT traffic travels across all FGWs. NAT traffic originating from the Azure network and destined to a floating IP address goes to the FGW VM that owns the IP address because Azure knows which NIC currently has it.

Prism Central uses policy-based routing to support forwarding based on the source IP address matching the floating IP address. Flow Virtual Networking has built-in policy-based routing rules for custom forwarding that install routes automatically in the transit VPC.

Any questions, ask away!