Recently, we upgraded AOS and AHV in a 6-NX-1065-G5 nodes cluster. Those nodes have only 1 Gbps interfaces and the upgrade process got stuck many times while actively moving guest VMS to other nodes when setting each node to maintenance mode. Tech support told us this happened because new AOS and AHV versions were designed to work at 10 Gbps, which we know is a best practice, but a hardware upgrade would be needed in our scenario then.

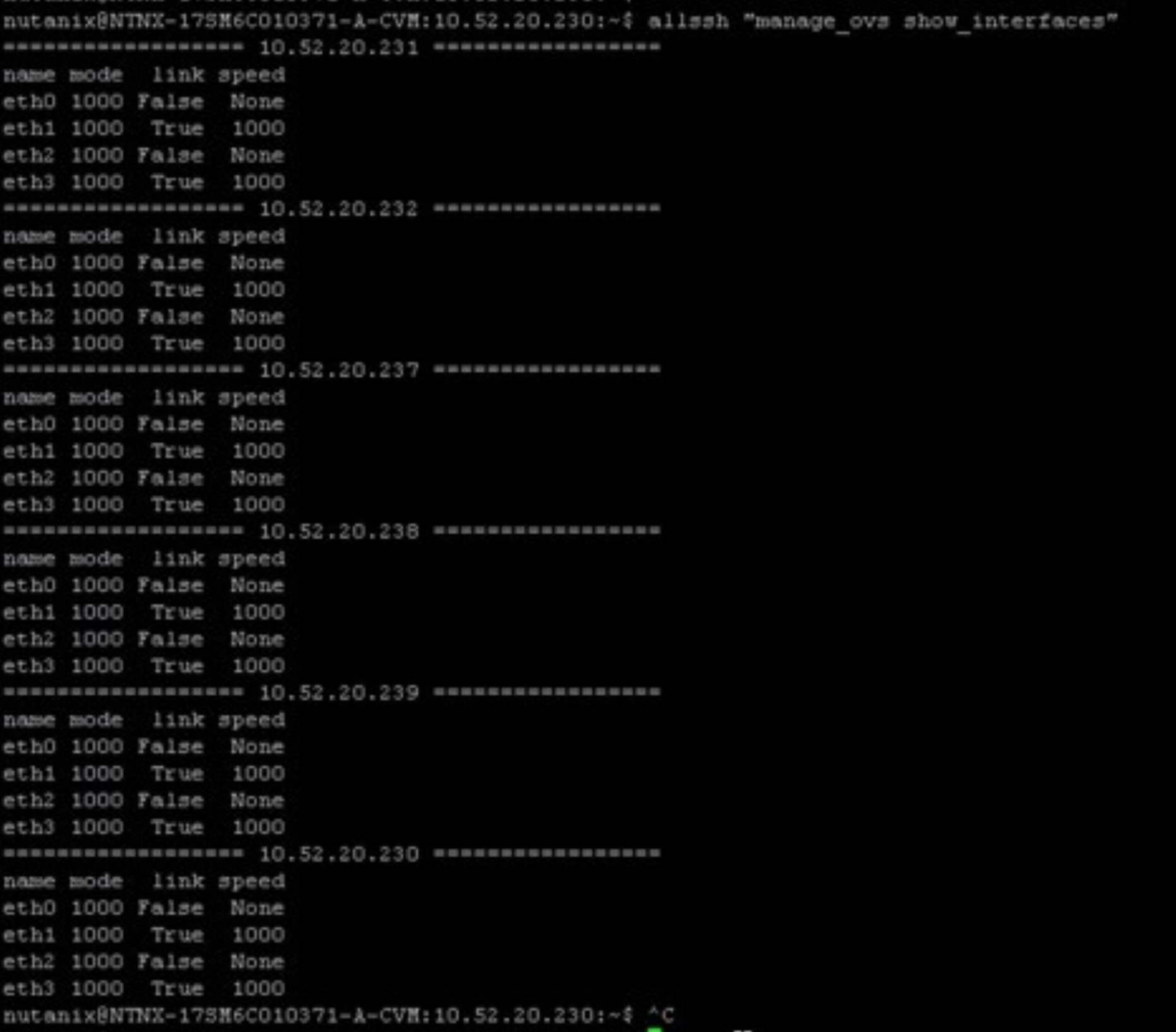

Question: I´m guessing configuring the four uplink interfaces as Balance-SLB bond may help, having a total bandwidth of 4 Gbps in optimal conditions, Has anybody gone through this problem and applied this cure? Was it useful or… Not enough?

Thanks in advance