Hi ,

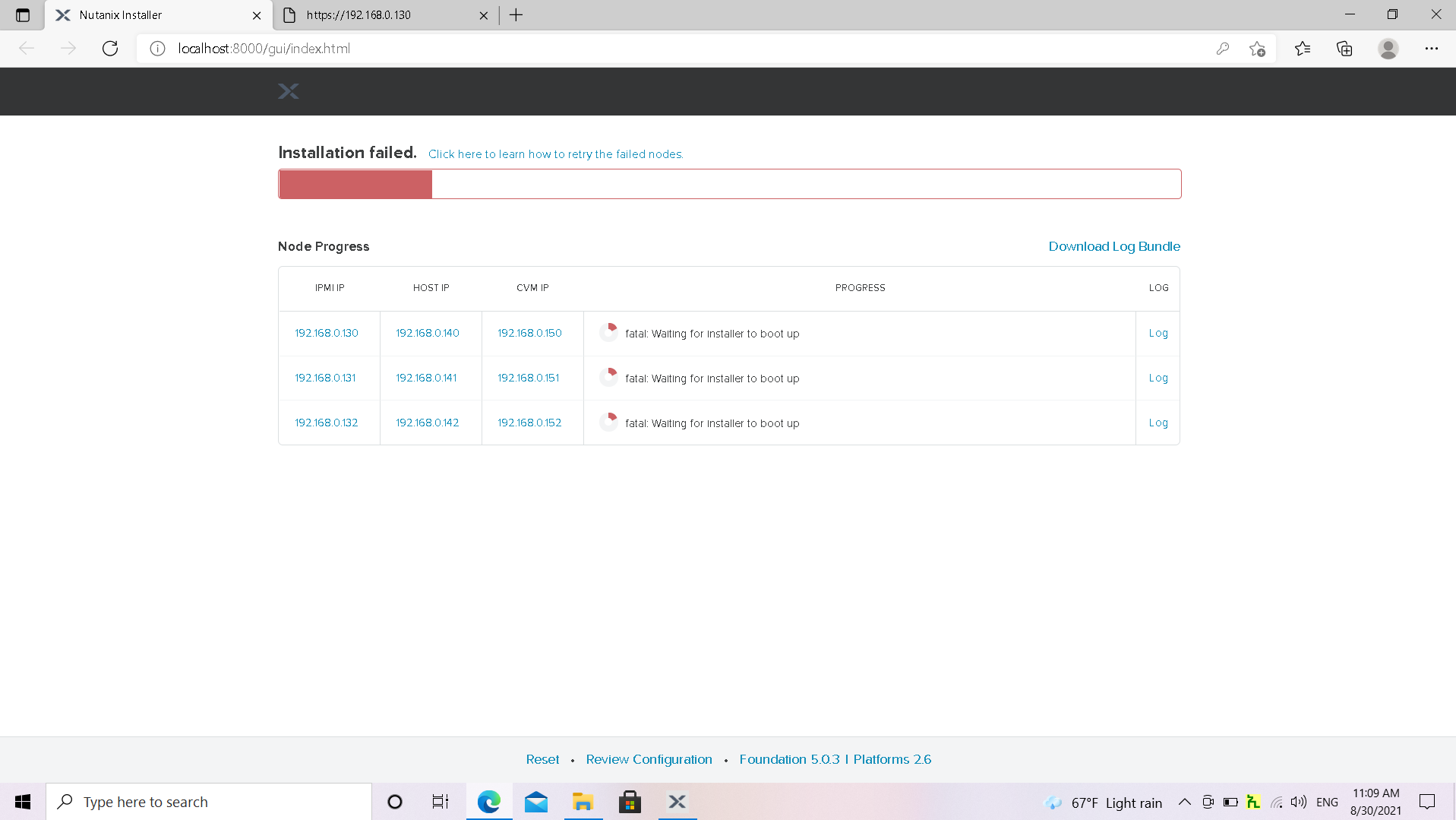

I am trying to setup a new Nutanix cluster on Cisco servers managed by UCSM but i am getting a fatal error , similar like below

Servers are managed by UCSM ,

Foundation can ping both UCSM ip and CVM&AHV management gateway

Exception: Server (WZ) did not shut down in a timely manner 2025-02-17 17:11:33,286Z imaging_step.py:123 DEBUG Setting state of <ImagingStepInitIPMI(<NodeConfig(10.x.x.x) @7190>) @77f0> from RUNNING to FAILED 2025-02-17 17:11:33,288Z imaging_step.py:123 DEBUG Setting state of <ImagingStepRAIDCheckPhoenix(<NodeConfig(10.x.x.x)) @7190>) @78b0> from PENDING to NR 2025-02-17 17:11:33,289Z imaging_step.py:182 WARNING Skipping <ImagingStepRAIDCheckPhoenix(<NodeConfig(10.x.x.x)) @7190>) @78b0> because dependencies not met, failed tasks: [<ImagingStepInitIPMI(<NodeConfig(10.x.x.x) @7190>) @77f0>] 2025-02-17 17:11:33,290Z imaging_step.py:123 DEBUG Setting state of <ImagingStepPreInstall(<NodeConfig(10.x.x.x @7190>) @a280> from PENDING to NR 2025-02-17 17:11:33,290Z imaging_step.py:182 WARNING Skipping <ImagingStepPreInstall(<NodeConfig(10.x.x.x) @7190>) @a280> because dependencies not met 2025-02-17 17:11:33,291Z imaging_step.py:123 DEBUG Setting state of <ImagingStepPhoenix(<NodeConfig(10.x.x.x) @7190>) @a790> from PENDING to NR 2025-02-17 17:11:33,292Z imaging_step.py:182 WARNING Skipping <ImagingStepPhoenix(<NodeConfig(10.x.x.x) @7190>) @a790> because dependencies not met 2025-02-17 17:11:33,292Z imaging_step.py:123 DEBUG Setting state of <InstallHypervisorKVM(<NodeConfig(10.x.x.x) @7190>) @a9a0> from PENDING to NR 2025-02-17 17:11:33,293Z imaging_step.py:182 WARNING Skipping <InstallHypervisorKVM(<NodeConfig(10.x.x.x) @7190>) @a9a0> because dependencies not met