FIO Test

+5

+5Does anyone test Nutanix with FIO but not with diagnostics VM?

When we testing Nutanix our processors on host all cores are almost 100 % loaded.

We started test 3.5.2.1 and get some disks maked offline, we return them and upgrade to 3.5.3.1.

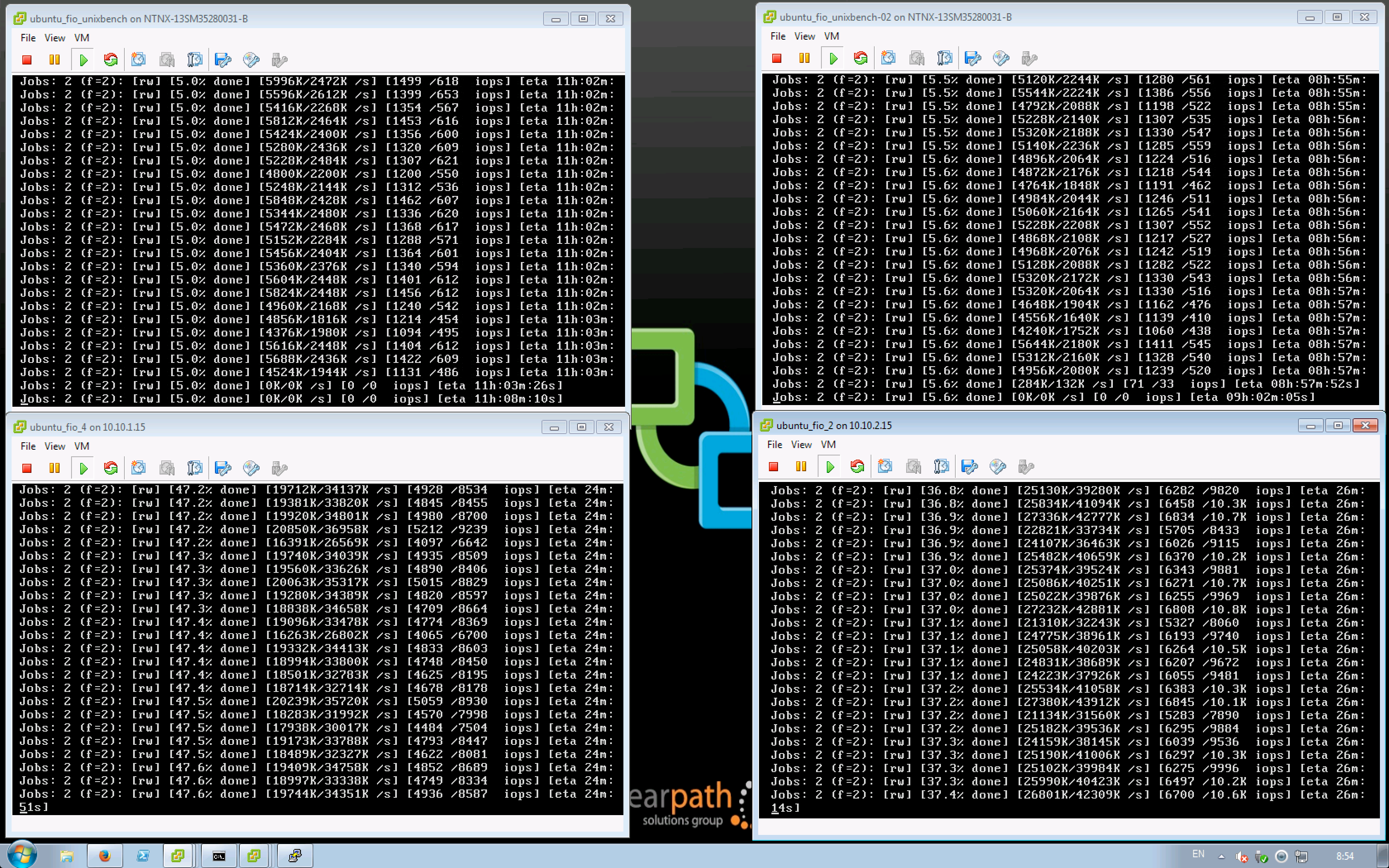

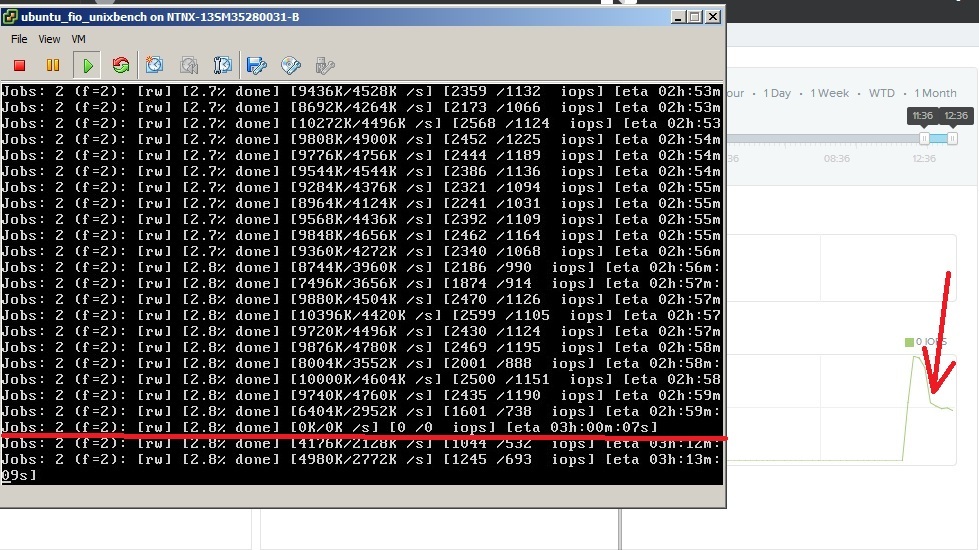

For the first time after install results is ok (13k IOPS for 400 Gb disk on 3350), but next day (all data is cold) we have afwul resuls with zero counters sometimes.For example 0 read/0 write. Nutanix is upper consoles on slide.

Does it normal resuls?

ncc check tell that everything is ok.

For test on slide we use 100Gb disk so we should be in one node.

Fio config

>sudo fio read_config.ini

root@debian:~# cat test_vmtools.ini

[readtest]

blocksize=4k

filename=/dev/sdb

rw=randread

direct=1

buffered=0

ioengine=libaio

iodepth=32

>sudo fio write_config.ini

root@debian:~# cat test_vmtools.ini

[writetest]

blocksize=4k

filename=/dev/sdb

rw=randwrite

direct=1

buffered=0

ioengine=libaio

iodepth=32

>sudo fio rw_config.ini

root@debian:~# cat test_vmtools.ini

[readtest]

blocksize=4k

filename=/dev/sdb

rw=randread

direct=1

buffered=0

ioengine=libaio

iodepth=32

[writetest]

blocksize=4k

filename=/dev/sdb

rw=randwrite

direct=1

buffered=0

ioengine=libaio

iodepth=32

Could anyone help me with test?

This topic has been closed for replies.

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.