When considering Hyper-converged (HCI) products, often vendors, partners, value-added resellers & customers compare multiple solutions which of course makes perfect sense.

During these comparisons, marketing material, in many cases “Tick-Box” style slides being compared and worse still being taken on face value which can lead to incorrect assumptions for critical architectural/sizing considerations such as capacity, resiliency and performance.

Let me give you a simple example:

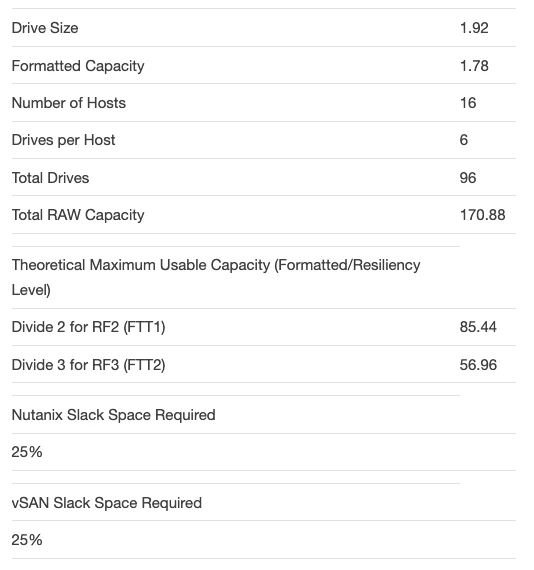

A customer chooses/needs 16 nodes to meet their requirements and they choose the most popular form factor being a 4 node per 2 rack unit (4N2U) for the density, power efficiency and high performance.

The nodes are populated with 6 x 1.92TB drives (All-Flash) as the cost of flash was justified for their use case/s.

So we’re left with 16 hosts * 6 drives = 96 total drives

96 x 1.92TB drives with a formatted capacity of ~ 1.78TB = 170.88TB

Then the customer compares vSAN and Nutanix which both use replication as a primary form of data protection (as opposed to traditional RAID) with either two copies (RF2 in Nutanix, FTT1 in vSAN) or three copies (RF3 or FTT2).

They do some quick maths and conclude something like this:

170.88TB / 2 (RF2 or FTT1) = 85.44TB usable

170.88TB / 3 (RF3 or FTT2) = 56.96TB usable

The customer/parters/VARs could conclude the usable capacity for both products are the same or at least the difference is insignificant for a purchasing or architectural decision, right?

If they did, they would be very mistaken for two critical reasons.

Reason 1: File System / Object Store Overheads

All storage products, new and old, including vSAN and Nutanix have overheads, this is very normal but the overheads need to be understood.

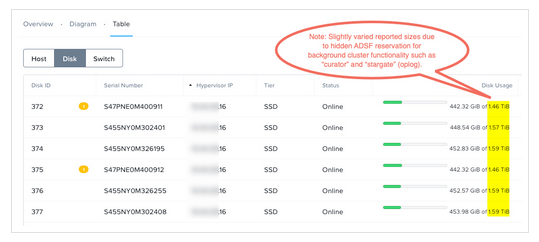

Starting with Nutanix ADSF, some capacity is reserved (and hidden) for background operations by “curator” such as disk balancing & garbage management and further capacity is reserved (and again, hidden) for the persistent write buffer known as the “Oplog”.

vSAN uses one SSD per “disk group” for cache and the entire SSD’s capacity is not part of the usable capacity, much like the Nutanix oplog capacity is not reported as usable capacity.

VMware recommends using multiple disk groups per node, so for this example, we’ll assume 2 disk groups and therefore two SSDs reserved for cache.

Important to note: In an all-flash vSAN configuration, 100% of the cache device is dedicated to write-buffering but only up to a maximum of 800GB (previously 600 GB) as of vSAN 6.7 u1.

Reference: Understanding vSAN Architecture: Disk Groups (April 2019)

Reason 2: Slack space requirements

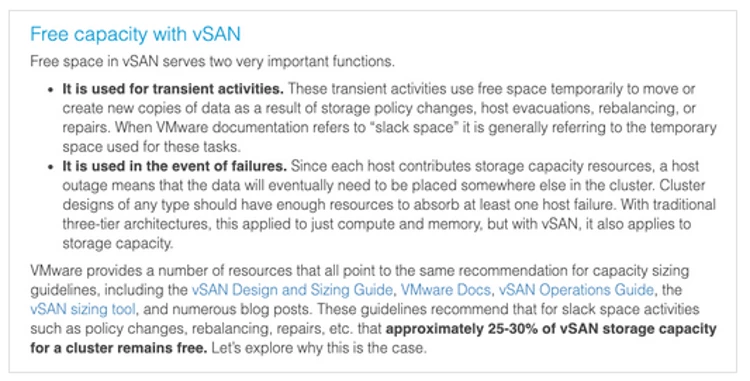

In a recent article titled: Revisiting vSAN’s Free Capacity Recommendations, VMware have remained consistent for the past few years in recommending 25-30% “slack space” for all vSAN clusters regardless of size.

As a rule of thumb, I don’t actually mind it as if that rule was applied to Nutanix customers would have very conservatively sized (arguably significantly oversized) environments. The downsides, however, is the cost would be significantly increased and in many cases may not be commercially viable and more importantly, the solution would likely vastly exceed the requirements to deliver the desired business outcome/s.

Side Note: One of the core values of (Nutanix) HCI is the ability to start small and scale as required. With the slack space requirements of vSAN, it does constraint this value somewhat. Nutanix, on the other hand, scales out extremely well for capacity, performance (linear) and resiliency as shown in this post:

Scale out performance testing with Nutanix Storage Only Nodes

Here is an extract from the above-quoted article regarding Free/Slack space:

With Nutanix ADSF, the capacity for transient activities (such as disk balancing and garbage management) are already factored in before usable capacity is reported to ensure this capacity is not inadvertently used which could lead to platform instability, performance issues and ultimately downtime. The capacity required for background activities is also much lower due to the 1MB/4MB granularity of Nutanix ADSF, as opposed to the vSAN objects which can be up to 255GB which can lead to fragmentation of capacity leading to unusable capacity or forcing manual operations to defragment the environment to utilize some of that capacity.

VMware is correct to call out the requirement for capacity to be reserved to tolerate failures, N+1 on a 4 node cluster would justify the 25% recommendation, but as we scale even to a very common 8 node cluster, 25% (the lower end of the recommended range) is already N+2 and in many environments, unnecessary.

If we scaled to a 16 node cluster, which is also very common (at least for Nutanix environments) then 25% would be reserving N+4. This value could be justified if the customer wanted to protect against the loss of an entire block (4N2U enclosure), but again would be excessive for many if not most environments.

At a 32 node cluster scale, the minimum VMware recommendation would have you reserving an equivalent of N+8. Giving VMware the benefit of the doubt, this level of reservation could be argued for a fairly small percentage of customers wanting rack awareness to protect against full rack failures within a cluster although I’d suggest other options such as 4 separate clusters would achieve higher resiliency/availability with half (4) the nodes reserved for slack/HA/failures.

But let’s get back to the usable capacity of both products and compare the real values to the high level assumption I see made in the field:

Formatted capacity of 170.88TB / 2 (RF2 or FTT1) = 85.44TB usable

Formatted capacity of 170.88TB / 3 (RF3 or FTT2) = 56.96TB usable

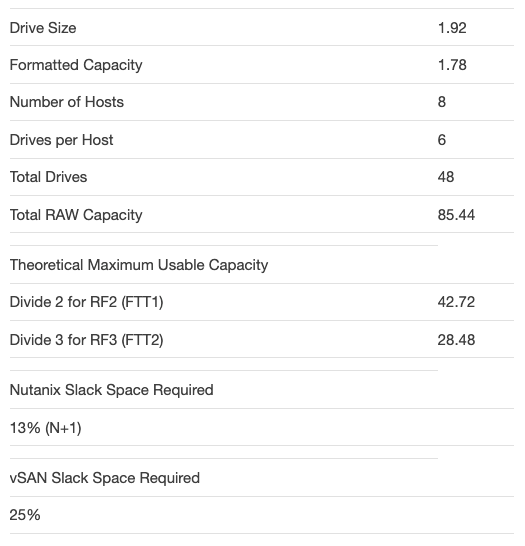

Let’s start with a 16 node cluster

Note: For all calculations, the minimum VMware recommendation of 25% is used, not the 30% value which is the high end of the recommended range.

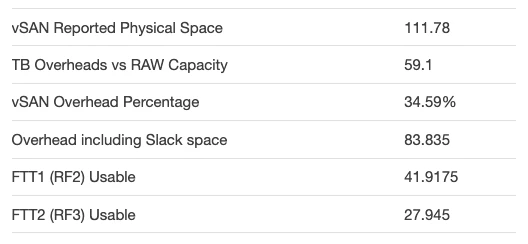

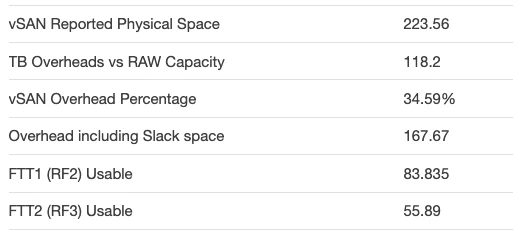

Now let’s look at the usable capacity for vSAN:

Below is a screenshot from a vSAN cluster with this exact configuration.

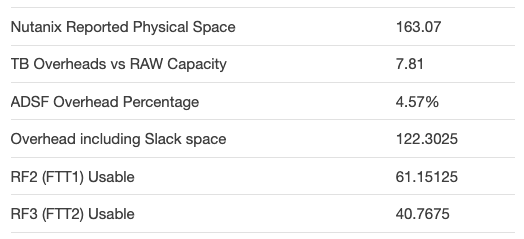

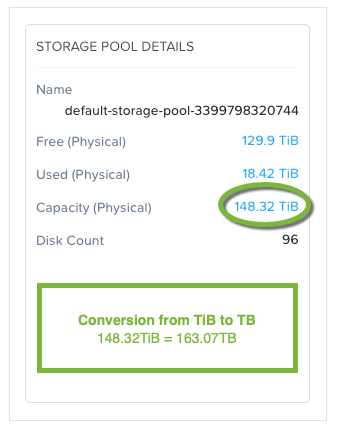

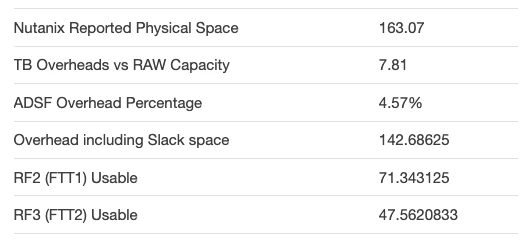

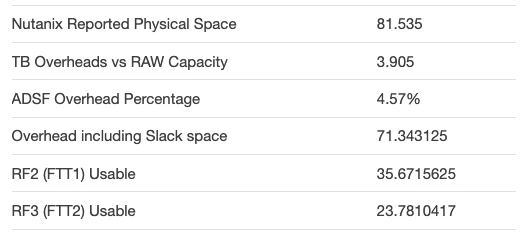

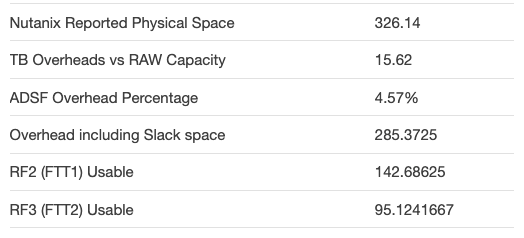

Next let’s compare the usable capacity for Nutanix ADSF:

And the screenshot of the Nutanix environment:

We don’t need to be mathematicians to see the difference in usable capacity is huge between the two products, but for those who are interested, vSAN has 31.45% LOWER usable capacity than Nutanix for the exact same hardware and slack space reservation (despite it not being required).

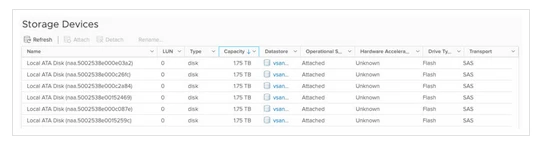

The below shows the Storage devices on one of the vSAN hosts:

The below shows the same storage summary for one of the Nutanix hosts:

What happens if we lower the slack space for Nutanix ADSF?

If we set the slack space for Nutanix to what I would typically recommend for a 16 node cluster (N+2 or 12.5% for a 16 node cluster) the calculations (below) become significantly more favourable to Nutanix with usable capacity increasing from 61TB to 71TB.

For those who think I’ve cherry-picked a cluster size to show an inflated advantage to Nutanix, let’s review a 4 and 8 node cluster with the same HW.

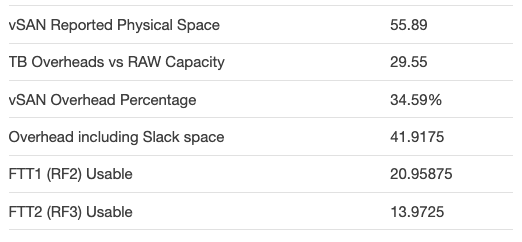

8 Node Cluster

vSAN 8 node cluster summary:

Nutanix 8 node cluster summary:

Again we see vSAN still has a LOWER usable capacity than Nutanix, but in this case it’s now 41.25% lower for the exact same hardware. Noting the slack space reservation has been reduced to 12.5% (N+1)

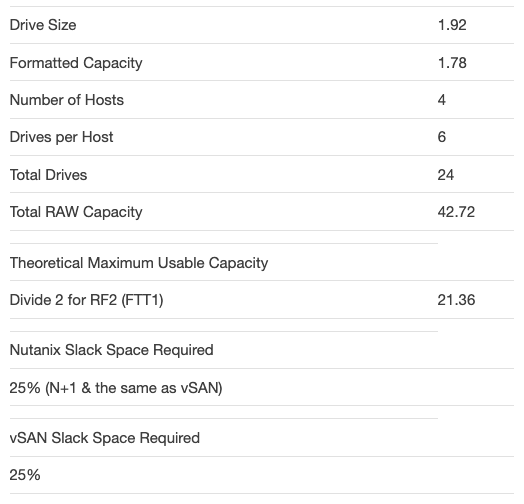

Next up, 4 node cluster comparison:

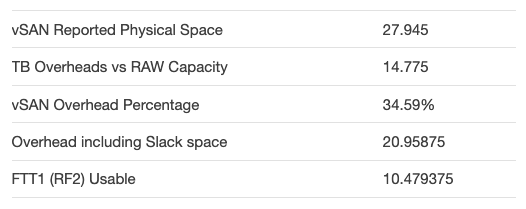

Now let’s look at the usable capacity for vSAN:

Note: 4 node clusters do not support RF3/FTT2 for vSAN or Nutanix.

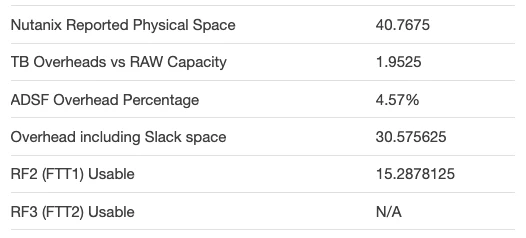

Next up the usable capacity for Nutanix ADSF:

In this scenario, the “slack space” is 25% for both products as both need to maintain at least N+1 to be able to tolerate a single node failure (N+1). This is the best case scenario for vSAN and we still see 31.45% lower usable capacity for vSAN compared to Nutanix, again for the exact same hardware and resiliency level.

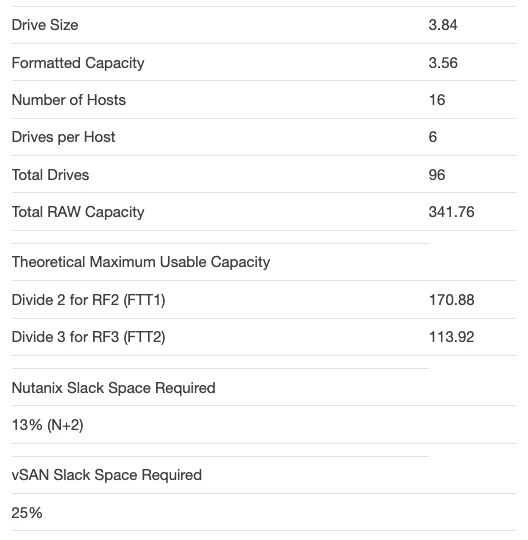

But what if we use larger drives?

vSAN results using 3.84TB SSDs:

Nutanix ADSF results using 3.84TB SSDs:

We still have a 41.25% advantage for Nutanix ADSF. The reason for this is simple as the underlying architecture where vSAN requires a large amount of slack space and the fact that cache drives do not contributing to usable capacity.

Is there a way to improve usable capacity for vSAN?

In short, Yes. I always try to be impartial and provide both sides and the following options can help increase usable capacity for vSAN.

1) Use a single disk group so only one SSD’s worth of capacity is “lost” to cache

While this will increase the number of “capacity” drive in a disk group and therefore increase the usable capacity, it comes at a significant cost as this will impact performance as only a single drive will be used for write/read cache. Assuming performance isn’t a factor, unfortunately resiliency is also impacted as a single cache SSD failure takes the entire disk group offline including all its usable capacity. If only a single disk group exists, the single cache SSD failure will also result in the node failure.

Note: All SSDs in a Nutanix node are used for the persistent write buffer (oplog) which is striped across all drives by default for optimal performance and to avoid creating hot spots on a small number of drives and ADSF avoids the capacity issues of using flash purely for cache.

2) Use more SSDs and/or higher capacity SSDs per disk group

In this example we have two disk groups with 1 cache drive and 2 capacity drives as this follows VMware’s recommendations for performance. If the nodes supported more drives, you could have up to 7 capacity drives per disk group.

In this case, again performance is reduced as you have a smaller ratio of cache to capacity. The more critical issue is that resiliency is again impacted as the entire disk group goes offline if the single cache drive fails. Very large disk groups significantly increase the risk to resiliency, performance impact of a failure and the time to restore resiliency due to a larger amount of data needing to be re-replicated.

With Nutanix ADSF, any single SSD failure is just that. “A single SSD failure”. As such only a single drives data needs to be re-protected as opposed to an entire disk group. The exception to this is a single SSD node, which that SSD failing results in a node failure, this is one reason why I don’t recommend single SSD systems regardless of platform and why I don’t like the disk group architecture of vSAN.

3) Use Compression / Deduplication

This may help increase storage efficiency but as both products support these capabilities, doing so won’t significantly change the difference in usable capacity between the solutions.

However if the vSAN solution is Hybrid, vSAN does not support Compression and Deduplication so Nutanix would have a further and significant advantage with usable capacity.

4) Use Erasure Coding / RAID5 or 6

Using vSAN’s implementation of RAID will increase usable capacity, but as with Compression and Deduplication. Nutanix supports Erasure Coding which means it wont bridge the gap in usable capacity between the two products.

Nutanix was in fact first to the HCI market (in 2015) with Erasure Coding which means the capacity savings are again a wash. vSAN RAID also works inline which sounds great, but in reality it means there is a direct impact on front end IO performance and even when the dataset is not suitable for RAID (performance wise due to overwrites) RAID will still be applied, at an unnecessary cost of host resources and front end performance.

Note: RAID configurations on vSAN are limited to All-Flash configurations only despite Erasure Coding / RAID being very attractive for in-frequently modified or accessed data such as WORM (Write Once Read Many), Snapshots, Backups, Archive, Object Stores which perform very well on Nutanix hybrid configurations which do support Erasure Coding (EC-X).

With that said, checkout my Nutanix – Erasure Coding (EC-X) Deep Dive which highlights the unique advantages of Nutanix implementation of Erasure Coding which avoids the front end IO impact and only applies to data which is desirable for Erasure Code (RAID) as opposed to a all or nothing approach such as vSANs implementation. This means Nutanix delivers the maximum capacity efficiency with no direct front end IO impact in the write path. This also means lower host resource (or CVM vCPU) usage by avoiding applying Erasure Coding to data which isn’t suitable.

Cluster fragmentation & the impact on usable capacity:

As vSAN uses an Object Store with objects up to 255GB each, its significantly less granular and writes/reads typically goto a subset of nodes regardless of the placement of the VM. This can result in some capacity being unusable due to the size of Objects being larger than the available capacity on any two nodes in the cluster.

With Nutanix ADSF, regardless of where the free capacity is, it can all be used and written to locally or remotely until less than 2 nodes have enough free space for the next 1MB extent to be written. (3 nodes in the case of RF3/FTT2).

This is achieved via Nutanix intelligent replica placement which places write IO (typically only replicas except in the case the local node is full) based on storage capacity and performance.

This capacity efficiency advantage will vary based on the workload type, for VDI it’s advantage will be minimal, for mixed workloads however it’s typically significant due to the differing sizes of VMs being supported by a cluster which would otherwise lead to fragmented storage.

For more info see: Nutanix Resiliency – Part 6 – Write I/O during CVM maintenance or failures which covers this concept and failure scenarios in more depth.

Summary:

When comparing Nutanix usable capacity with vSAN, as a rule of thumb you will typically need to ensure any vSAN configuration has between 20-45% more physical capacity depending on your disk group configuration to cater for the higher overheads discussed in this post for a fair comparison of usable capacity not factoring in data reduction which can be considered a wash (even).

What this demonstrates is Nutanix ADSF (Acropolis Distributed Storage Fabric) has significant performance, scalability and resiliency advantages over vSAN. Nutanix simplicity also helps ensuring customers (and architects) avoid having to understand the complexities of disk groups, while providing out of the box a solution which caters for background functions (curator/stargate) without those needing to be factored into “free/slack space”.

Regardless of if you choose Nutanix or vSAN or any other HCI or traditional storage product/solution, please always consider the resiliency/availability requirements and design accordingly as usable capacity is only one (albeit a very important) factor to consider.

Next up let’s compare Deduplication & Compression for Nutanix ADSF & VMware vSAN!

Thanks to Gary Little (@garyjlittle) for his assistance creating this article, his blog https://www.n0derunner.com/ which features articles focusing around Performance, Scalability and Enterprise Applications. Gary has been and remains a highly respected senior member of the Nutanix Performance and Engineering Team and I highly recommend you follow him.

Check out some of Gary’s posts below:

1. SQL Server uses only one NUMA Node with HammerDB

2. SuperScalin’: How I learned to stop worrying and love SQL Server on Nutanix

3. How scalable is my Nutanix cluster really?

This article was originally published at http://www.joshodgers.com/2020/01/30/usable-capacity-comparison-nutanix-adsf-vs-vmware-vsan/

2020 Nutanix, Inc. All rights reserved. Nutanix, the Nutanix logo and all Nutanix product, feature and service names mentioned herein are registered trademarks or trademarks of Nutanix, Inc. in the United States and other countries. All other brand names mentioned herein are for identification purposes only and may be the trademarks of their respective holder(s). This post may contain links to external websites that are not part of Nutanix.com. Nutanix does not control these sites and disclaims all responsibility for the content or accuracy of any external site. Our decision to link to an external site should not be considered an endorsement of any content on such a site.

2020 Nutanix, Inc. All rights reserved. Nutanix, the Nutanix logo and all Nutanix product, feature and service names mentioned herein are registered trademarks or trademarks of Nutanix, Inc. in the United States and other countries. All other brand names mentioned herein are for identification purposes only and may be the trademarks of their respective holder(s). This post may contain links to external websites that are not part of Nutanix.com. Nutanix does not control these sites and disclaims all responsibility for the content or accuracy of any external site. Our decision to link to an external site should not be considered an endorsement of any content on such a site.