Hello,

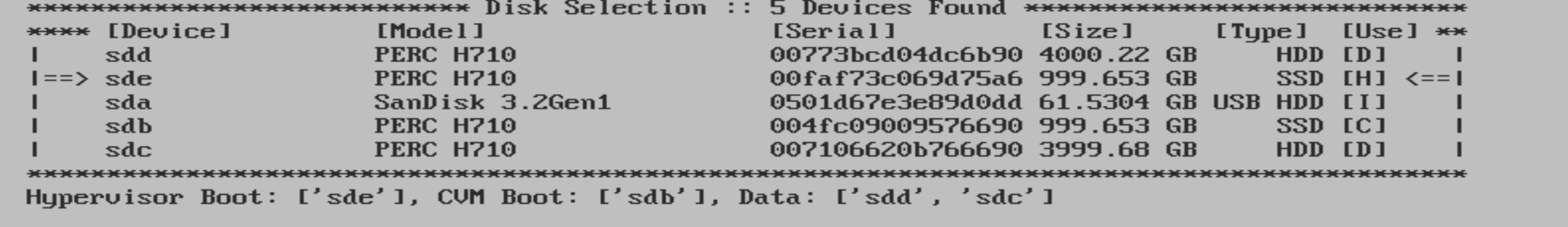

I am desperate, I have 2 disks and the USB stick with the ISo on my server.

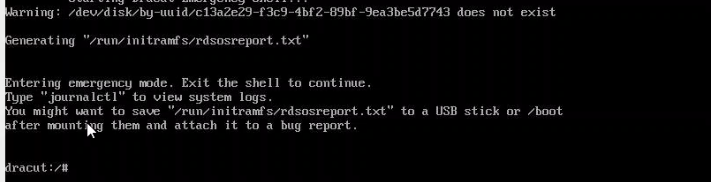

If I now want to install Nutranix, I get to the disk selection, but even if I assign the disks to the respective letters, only Phoenix ISO is still mounted at the end

Then the install aborts and I can go back to the disk selection.

If I enter H,C, or D, the system says either I need SSD's, or I must have 2 disks, which I have.

Do I need ESXI on the server, not really if I use the AHV version?