At Nutanix the last couple of days I’ve been invGPUvolved in the testing of the new vGPU features from vSphere 6 in combination with the new NVIDIA grid drivers so that vGPU would also be available for desktops delivered via Horizon 6 on vSphere 6.

During this initial phase I worked together with Martijn Bosschaart to get this installation covered and after an evening of configuring and troubleshooting I thought it would be a good idea to write a blogpost on this new feature that is coming.

What is vGPU and why do I need it?

vGPU profiles deliver dedicated graphics memory by leveraging the vGPU Manager to assign the configured memory for each desktop. Each VDI instance will have pre-determined amount of resources based on their needs or better yet based on the needs of their applications.

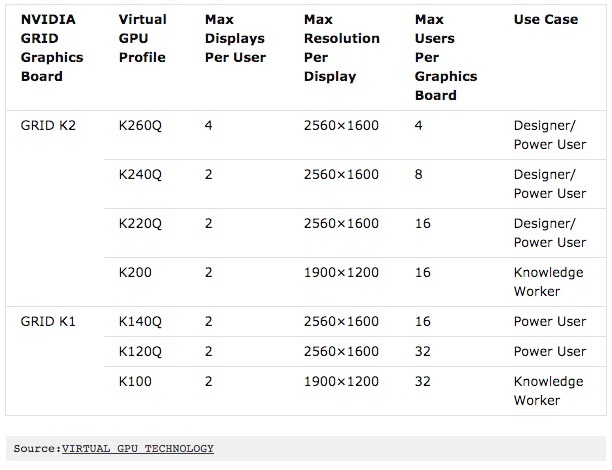

By using the vGPU profiles from the vGPU Manager you can share each physical GPU, for example a NVIDIA GRID K1 card has up to 4 physical GPU’s which can host up to 8 users per physical GPU resulting in 32 users with a vGPU enabled desktop per K1 card.

Next to the NVIDIA GRID K1 card there’s the K2 card which has 2 x high-end Kepler GPUs instead of 4 x entry Kepler GPUs but can deliver up to 3072 CUDA cores compared to the 768 CUDA cores of the K1.

vGPU can also deliver adjusted performance based on profiles, a vGPU profile can be configured on VM based in such a way that usability can be balanced between performance and scalability. When we look at the available profiles we can see that less powerful profiles can be delivered on more desktops compared to high powered VMs:

All the GPU profiles with a Q are being submitted to the same certification process as the workstation processors meaning that these profiles should (at least) perform the same as the current NVIDIA workstation processors.

Both of the K100 and K200 profiles are designed for knowledge workers and will deliver less graphical performance but will enhance scalability, typical use cases for these profiles are much more commodity than you would expect and with the growing graphical richness of applications the usage of vGPU will become more of a commodity as well. Just take a look at Office 2013, Flash/HTML or Windows 7/8.1 or even 10 with Aero and all other eye candy that can be enabled, these are all good use cases for the K100/K200 vGPU profiles.

The installation

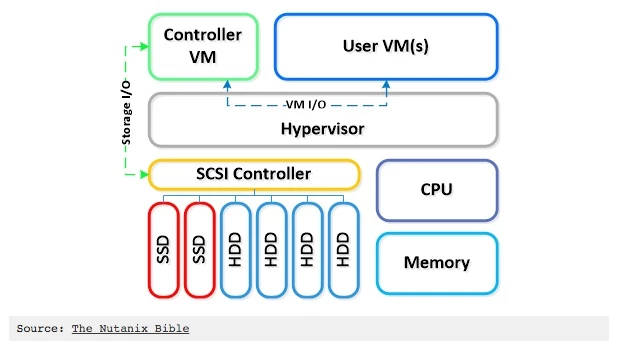

Our systems rely on the CVM, the Nutanix CVM is what runs the Nutanix software and serves all of the I/O operations for the hypervisor and all VMs running on that host. For the Nutanix units running VMware vSphere, the SCSI controller, which manages the SSD and HDD devices, is directly passed to the CVM leveraging VM-Direct Path (Intel VT-d). In the case of Hyper-V the storage devices are passed through to the CVM. Below is an example of what a typical node logically looks like:

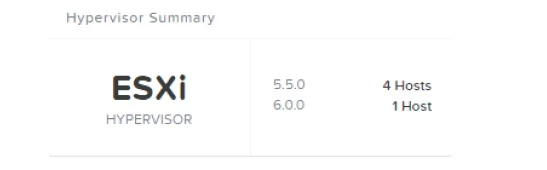

It turned out that our CVM played very nicely with the upgrade of vSphere 5.5 to vSphere 6 as it worked exactly as planned (don’t you just love a Software Defined Datacenter?) and I saw the following configuration in our test cluster:

The installation went without any problems so we could follow (very detailed) guide to setup the rest of the environment. Setting up vCenter 6 and Horizon 6.0.1 was fairly easy and well described but when we got down to assigning the vGPU profiles to the VM I was able to see the vGPU profiles but when starting the VM an error message would occur.

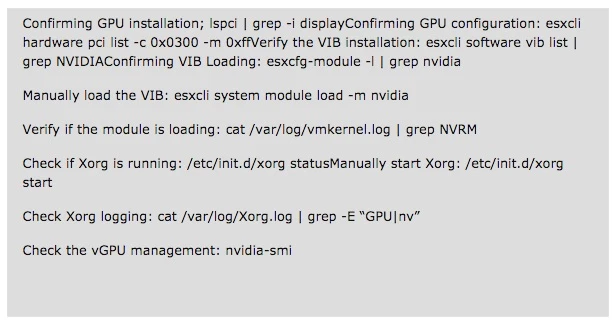

Useful commands for troubleshooting

In my case the block I was testing on was used for other testing purposes so I when I tried running Xorg it would not start, so I checked the vGPU configuration and noticed that the cards where configured in pciPassthru. That was why Xorg wasn’t running, to disable pciPassthru:

In the vSphere client, select the host and navigate to Configuration tab > Advanced Settings. Click Configure Passthrough in the top left.Deselect at least one core to remove it from being passed through

After that I had a different error in the logs of my VM:

vmiop_log: error: Initialization: VGX not supported with ECC Enabled.

With some help from Google I found the following explanation: Virtual GPU is not currently supported with ECC active. GRID K2 cards ship with ECC disabled by default, but ECC may subsequently be enabled using nvidia-smi.

Use nvidia-smi to list status on all GPUs, and check for ECC noted as enabled on GRID K2 GPUs. Change the ECC status to off on a specific GPU by executing nvidia-smi -i -e 0, where is the index of the GPU as reported by nvidia-smi.

After this change I was able to boot my VM, create a Master Image and deploy the Horizon desktops with a vGPU Profile via Horizon 6:

https://www.youtube.com/watch?v=UsDry2JY4pg

note 1: I was performing remote testing with limited bandwidth, as you can see the desktop did up to 66FPS.

note 2: Please be aware that although this testing was done on a Nutanix powered platform vSphere 6 is not supported by Nutanix at this moment, support will follow asap but be aware of this.

This is a repost from the blog My Virtual Vision by Kees Baggerman

Be the first to reply!

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.