What is Helm?

Helm is a tool that simplifies how your IT operation team install and manage Kubernetes applications. You could consider it like a package manager in Linux (apt/yum) but for Kubernetes.

What is a Helm chart?

A Helm chart is just a package that contains the Kubernetes application. This is similar to a .deb/.rpm package on your preferred Linux distribution.

What are the Helm use cases?

If you are wondering why use Helm is a good idea, here some of the reasons:

- Most of the popular software has a Helm chart to facilitate its installation on Kubernetes

- If you are looking to share your own Kubernetes application, a Helm chart is the way to go

- Consistency during deployments is a key aspect, charts bring reproducible builds of your applications

- Kubernetes applications are a group of manifest files. A chart helps you to manage the complexity of those Kubernetes manifest files

- Manage releases of Helm packages

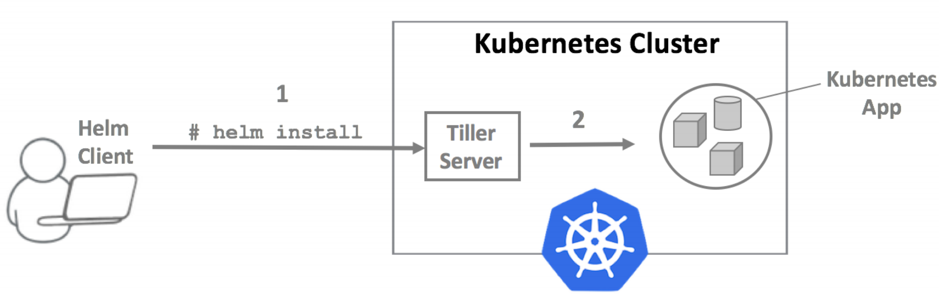

Helm architecture

From Helm website:

- Helm has two parts: a client (helm) and a server (tiller)

- Tiller runs inside of your Kubernetes cluster, and manages releases (installations) of your charts.

- Helm runs on your laptop, CI/CD, or wherever you want it to run.

- Charts are Helm packages that contain at least two things:

* A description of the package (Chart.yaml)

* One or more templates, which contain Kubernetes manifest files

- Charts can be stored on disk, or fetched from remote chart repositories (like Debian or RedHat packages)

Security concern around Tiller (Helm Server)

Tiller is responsible for managing the releases of your Kubernetes Apps. Tiller runs with root access on your Kubernetes cluster what poses a great risk, and someone can get unauthorised access to your server.

This security risk is addressed on the future Helm v3 release. Until the new version is out, we have a way to mitigate this risk on Helm v2.

Tillerless to the rescue!

Tillerless is a Helm plug-in developed by Rimas Mocevicius at Jfrog. In a nutshell what this plug-in does is to run Tiller locally on your workstation or in CI/CD pipelines without installing Tiller on your Kubernetes clusters.

Installation

Before you start with the Tillerless Helm installation it is important you have kubectl and the kubeconfig file properly configured and working on your machine. Helm client has a dependency with the kubeconfig file.

Helm client

First install the Helm client (helm). I recommend you to follow the steps on this link for your OS platform. In my case I’m a macOS user so I’m using Homebrew to install Helm.brew install kubernetes-helm

Initialise Helm

Make sure you initialise Helm with the right flag, --client-only. If you don’t do it Tiller will be installed on your Kubernetes cluster.helm init --client-only

Output:$HELM_HOME has been configured at /Users/jose.gomez/.helm. Not installing Tiller due to 'client-only' flag having been set Happy Helming!

Install Tillerless plug-in

With Helm initialised the next step is to install the Tillerless plug-in. Remember, this plug-in will make Tiller to run on your workstation instead of the Kubernetes cluster.helm plugin install https://github.com/rimusz/helm-tiller

We are ready to start using Tillerless Helm.

Using the plug-in locally

Tillerless by default starts locally and with the namespace kube-system. You can start with a different namespace, but make sure the Kubernetes namespace previously exists. On this post we are using the default namespace used by Tillerless, kube-system.helm tiller start

Output:Installed Helm version v2.9.1 Copied found /usr/local/bin/tiller to helm-tiller/bin Helm and Tiller are the same version! Starting Tiller... Tiller namespace: kube-system

A new shell has been opened where you can run on a secure manner any helm command. For the purpose of this post we are going to deploy the Consul chart to check everything is running.helm install --name consul stable/consul

Output:NAME: consul LAST DEPLOYED: Sun Dec 2 14:03:00 2018 NAMESPACE: default STATUS: DEPLOYED RESOURCES: ==> v1beta1/StatefulSet NAME DESIRED CURRENT AGE consul 3 1 0s ==> v1beta1/PodDisruptionBudget NAME MIN AVAILABLE MAX UNAVAILABLE ALLOWED DISRUPTIONS AGE consul-pdb N/A 1 0 0s ==> v1/Pod(related) NAME READY STATUS RESTARTS AGE consul-0 0/1 Pending 0 0s ==> v1/Secret NAME TYPE DATA AGE consul-gossip-key Opaque 1 0s ==> v1/ConfigMap NAME DATA AGE consul-tests 1 0s ==> v1/Service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE consul ClusterIP None 8500/TCP,8400/TCP,8301/TCP,8301/UDP,8302/TCP,8302/UDP,8300/TCP,8600/TCP,8600/UDP 0s consul-ui NodePort 172.19.210.185 8500:31820/TCP 0s NOTES: 1. Watch all cluster members come up. $ kubectl get pods --namespace=default -w 2. Test cluster health using Helm test. $ helm test consul 3. (Optional) Manually confirm consul cluster is healthy. $ CONSUL_POD=$(kubectl get pods -l='release=consul' --output=jsonpath={.items[0].metadata.name}) $ kubectl exec $CONSUL_POD consul members --namespace=default | grep server

It takes about five minutes to fully deploy Consul. You can check the status with the following command:helm test consul --cleanup

When Consul is ready the previous command should have the following output. You will get the PASSED message once the deployment is done.RUNNING: consul-ui-test-yht8o PASSED: consul-ui-test-yht8o

Next step is to check what port has been assigned to the consul-ui service. You can get the information on the chart installation output. Check the service section of it.==> v1/Service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE consul ClusterIP None 8500/TCP,8400/TCP,8301/TCP,8301/UDP,8302/TCP,8302/UDP,8300/TCP,8600/TCP,8600/UDP 1m consul-ui NodePort 172.19.210.185 8500:31820/TCP 1m

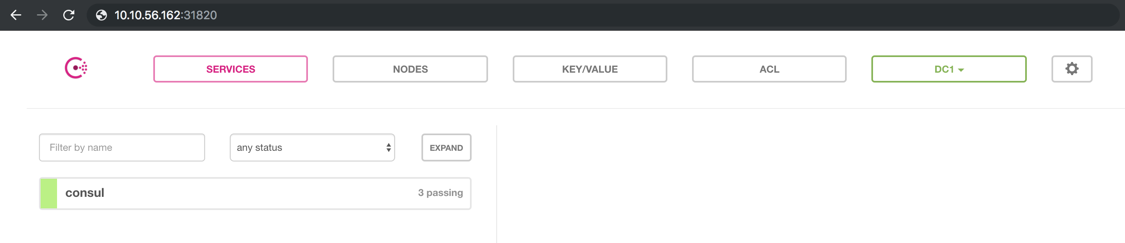

On my case the consul-ui port 8500 has been mapped with the port 31820. Open a web browser and use the IP address of one of your workers and your mapped port. A quick way to get your worker IP addresses.kubectl get node -o yaml | grep address

Output:addresses: - address: 10.10.56.161 - address: tillerless-f42dcf-k8s-master-0 addresses: - address: 10.10.56.162 - address: tillerless-f42dcf-k8s-worker-0

Here a screenshot for the Consul deployment with Tireless Helm

When you have finished with your Helm tasks you can close the shell and stop the Tiller service.exit helm tiller stop

Conclusion

With Tireless Helm you don’t have the need to install Tiller on your Kubernetes cluster or create any kind of RBAC for it. With this approach you get a secure way to deploy your Helm charts.