Hello,

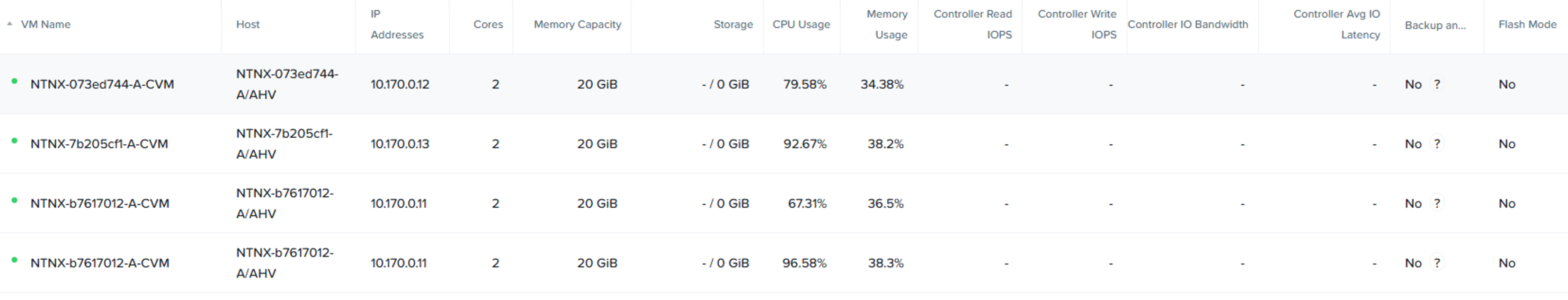

After 3 node cluster deployment, I can see 4 CVMs.

Two of them looks like the same VM but duplicated.

How to fix it and remove one of them? I cannot update vs0 on the node where two CVMs are placed.

AHV/CVM reboot did not help. And I cannot use acli on that node.

nutanix@NTNX-b7617012-A-CVM:10.170.0.11:~$ ncli vm list

Id : 00062cfc-0318-cd70-17eb-005056b90eac::7fa39b58-506c-40f9-b5b8-54146d84855e

Uuid : b452d890-a399-49b3-b415-e975c489d577

Name : NTNX-b7617012-A-CVM

VM IP Addresses : 10.170.0.11, 192.168.5.2, 192.168.5.254

Hypervisor Host Id : 00062cfc-0318-cd70-17eb-005056b90eac::5

Hypervisor Host Uuid : f20c4224-3d4f-4472-bb79-2e8aa0968bc5

Hypervisor Host Name : NTNX-b7617012-A

Memory : 20 GiB (21,474,836,480 bytes)

Virtual CPUs : 2

VDisk Count : 0

Protection Domain :

Consistency Group :

Id : 00062cfc-0318-cd70-17eb-005056b90eac::9aef76d3-3584-49a1-af9c-4b50c900dd9d

Uuid : 1a269e5c-0aee-4af3-af21-e036ccf0e80f

Name : NTNX-b7617012-A-CVM

VM IP Addresses : 10.170.0.11, 192.168.5.2, 192.168.5.254

Hypervisor Host Id : 00062cfc-0318-cd70-17eb-005056b90eac::5

Hypervisor Host Uuid : f20c4224-3d4f-4472-bb79-2e8aa0968bc5

Hypervisor Host Name : NTNX-b7617012-A

Memory : 20 GiB (21,474,836,480 bytes)

Virtual CPUs : 2

VDisk Count : 0

Protection Domain :

Consistency Group :

Id : 00062cfc-0318-cd70-17eb-005056b90eac::a26669b2-6d6e-4a2d-89ad-085902f41792

Uuid : ddad9870-29f1-4b41-b247-007b10463ac5

Name : NTNX-073ed744-A-CVM

VM IP Addresses : 10.170.0.12, 192.168.5.254, 192.168.5.2

Hypervisor Host Id : 00062cfc-0318-cd70-17eb-005056b90eac::6

Hypervisor Host Uuid : 4d97eeb4-86f3-4818-877d-598864bdf7a2

Hypervisor Host Name : NTNX-073ed744-A

Memory : 20 GiB (21,474,836,480 bytes)

Virtual CPUs : 2

VDisk Count : 0

Protection Domain :

Consistency Group :

Id : 00062cfc-0318-cd70-17eb-005056b90eac::f696a5af-ace4-411b-804d-d1223fdaf246

Uuid : c2203270-3a25-4e52-8e96-4d05dadb8ee7

Name : NTNX-7b205cf1-A-CVM

VM IP Addresses : 10.170.0.13, 192.168.5.2, 192.168.5.254

Hypervisor Host Id : 00062cfc-0318-cd70-17eb-005056b90eac::7

Hypervisor Host Uuid : d355c39c-46c8-4b02-a2d6-c5e445b5c0d6

Hypervisor Host Name : NTNX-7b205cf1-A

Memory : 20 GiB (21,474,836,480 bytes)

Virtual CPUs : 2

VDisk Count : 0

Protection Domain :

Consistency Group :

nutanix@NTNX-b7617012-A-CVM:10.170.0.11:~$ acli

Failed to connect to server: [Errno 111] Connection refused