Hello!

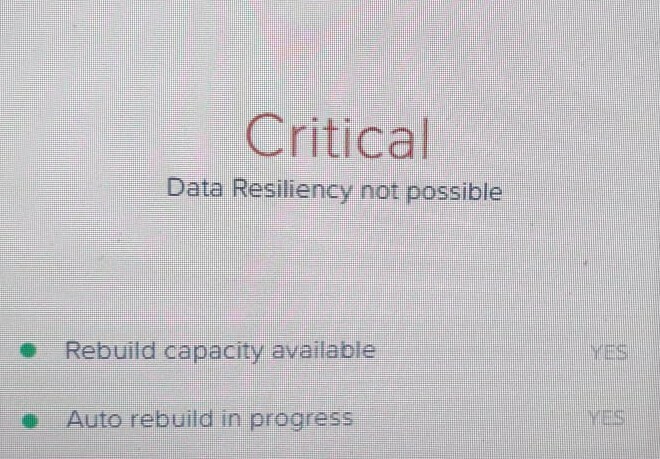

We have 5 node RF3 cluster. I tried shutting down 2 of 5 node at a time. Cluster stays online, but rebuild never happens. Is it normal? Should 5 node RF3 cluster rebuild after loosing 2 nodes? Can it survive loosing 1 more node after already loosing 2 (only 2 of 5 stays online)?