This post was authored by Michael Haigh, Technical Marketing Engineer, Nutanix

You’re likely aware that Kubernetes Pods (and containers in general) are ephemeral, meaning it’s expected that they can die at any time. If you’re new to Kubernetes, this can be quite alarming, however Kubernetes objects like ReplicaSets or Deployments will automatically replace these failed Pods.

This replacement of Pods does present a problem around network connectivity. While each Pod has a unique IP that’s internal to the Kubernetes cluster, a newly generated Pod is not guaranteed to have the same IP. This means that you cannot rely on Pod IPs to access your Kubernetes based application. Instead, Kubernetes has three main ways of providing external access to your application:

- NodePort: A Kubernetes Service which exposes your Pods via a port (generally 30000–32767), which when used in conjunction with a Kubernetes node’s IP (http://nodeIP:port) provides external access. This is the easiest way to provide access to your application, but is not recommended for production workloads.

- LoadBalancer: Another Kubernetes Service that exposes your Pods, which by default is only available via public cloud providers. The exact implementation varies by cloud provider, but this type of Service provides a unique IP address or domain name for your application. This is the simplest production-viable method for exposing your application.

- Ingress: A separate Kubernetes object which provides access to a Kubernetes Service (note that NodePort and LoadBalancer themselves are Services), rather than direct Pod access. Ingress provides advanced features like load balancing, SSL termination and name-based virtual hosting, but relies on a 3rd party implementation of an IngressController in order to work. This is the most feature rich, yet complicated method of exposing your Kubernetes applications.

While a NodePort Service will allow you or your end users the ability to access your Kubernetes based application, relying on the combination of a node IP and a five digit port number is not ideal. For the simplest production-viable method it is preferred to utilize a LoadBalancer Service, which allows you to access your application with a single IP or domain name.

As mentioned, by default,a LoadBalancer Service is only available on cloud providers, not privately hosted Kubernetes clusters like Nutanix Karbon. However, as we’ll see in this blog post, it’s a very straightforward process to utilize MetalLB to provide LoadBalancer Services for Nutanix Karbon.

Note: while Ingress and IngressController are great methods of exposing your Kubernetes application, they’re outside the scope of this blog.

Prerequisites

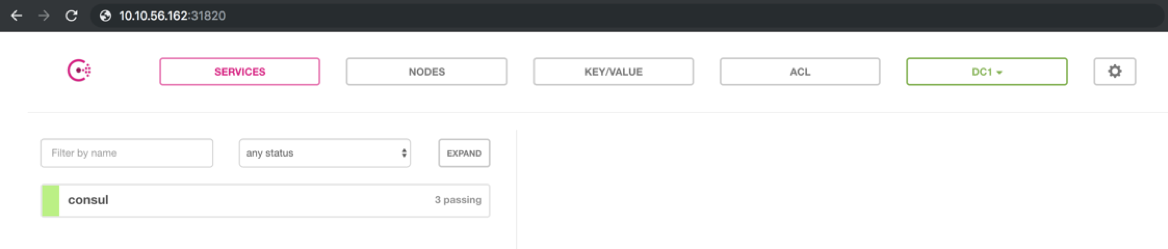

This blog post will be building off my colleague Jose Gomez’s Tillerless Helm blog post, so it’s recommended to run through that prior to this post. In that post, Jose goes through configuring Helm, and installing Consul through a Helm chart. By default, the Consul Helm chart utilizes a NodePort type of Service, which is evident at the end of Jose’s post where he finds a Node IP (10.10.56.162), and utilizes port 31820 to access the Consul application:

Accessing Consul via a NodePort

In this blog post, we’ll deploy the same Consul application, but instead use a LoadBalancer Service rather than NodePort.

MetalLB Installation

MetalLB can be installed cluster-wide via a single kubectl command (depending on the age of this post, it is recommended to navigate to the MetalLB installation page to ensure there hasn’t been an updated release).

$ kubectl apply -f https://raw.githubusercontent.com/google/metallb/v0.7.3/manifests/metallb.yaml After running the above command, you should see various components of MetalLB get created. For instance, to view the pods that were created:

$ kubectl -n metallb-system get pods NAME READY STATUS RESTARTS AGE controller-7cc9c87cfb-f7rxt 1/1 Running 0 3m28s speaker-q5x7w 1/1 Running 0 3m28s speaker-qnbqg 1/1 Running 0 3m28s speaker-qwtjl 1/1 Running 0 3m28sNow that installation is complete, it’s time to move on to the configuration step.

MetalLB Configuration

Generally speaking, MetalLB can be configured in two ways:

- Layer 2 Configuration - this method relies on the IPs that MetalLB hands out to be in the same L2 domain as the Kubernetes nodes.

- BGP Configuration - this method does not have the above L2 limitation, however it does rely on knowledge about your router’s BGP configuration.

In this blog post, we’ll be focusing on the simpler Layer 2 configuration. If you’re not aware of the network that your Karbon cluster is deployed into, you can run the following command to narrow things down:

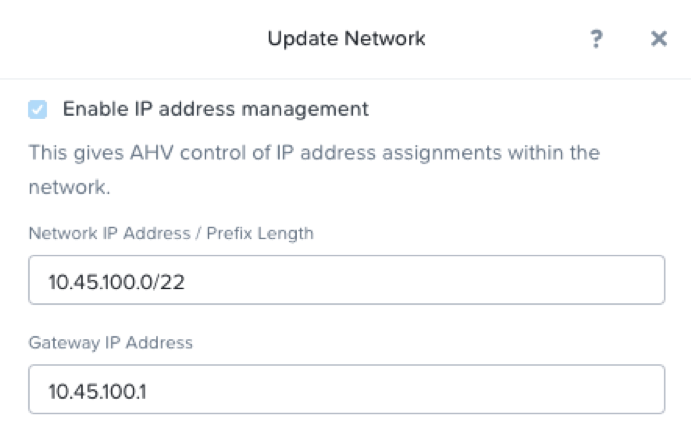

$ kubectl describe nodes | grep Internal InternalIP: 10.45.100.128 InternalIP: 10.45.100.110 InternalIP: 10.45.100.191 InternalIP: 10.45.100.139 InternalIP: 10.45.100.133In Prism, I see that the matching network is 10.45.100.0/22:

Karbon Kubernetes Cluster Network

This means that MetalLB in Layer 2 mode will only work if I designate available addresses from the 10.45.100.1 to 10.45.103.254 IP range. In my environment, I set aside address 10.45.100.11 through 10.45.100.15 for MetalLB to use. To configure MetalLB, we’ll need to create and apply a Kubernetes ConfigMap:

$ cat << EOF > metallb-config.yaml apiVersion: v1 kind: ConfigMap metadata: namespace: metallb-system name: config data: config: | address-pools: - name: default protocol: layer2 addresses: - 10.45.100.11-10.45.100.15 #substitute your range here EOF $ kubectl apply -f metallb-config.yaml That’s it! After running three simple commands (MetalLB install, ConfigMap create, ConfigMap apply), any LoadBalancer type of Service will automatically be handed an IP from the available range by MetalLB. Let’s see this in action by deploying Consul with a Helm chart.

Consul Deployment with a LoadBalancer Service

As mentioned earlier, we’ll be adding on to this Tillerless Helm blog post. So if you haven’t already, be sure to

- Install Helm on your workstation

- Initialize in client-only mode

- Install the helm-tiller plugin

- Start helm tiller

At this point, we’re ready to install Consul, but we’ll do that with an additional set parameter:

$ helm install --name consul --set uiService.type=LoadBalancer,HttpPort=80 stable/consul The first set key value pair instructs helm to create a LoadBalancer Service instead of the default NodePort, while the second changes the default port 8500 to port 80, so we will not have to append any ports to our IP. In the output of that command, you should see the Service section call out a Service of type LoadBalancer:

==> v1/Service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE consul ClusterIP None 80/TCP... 1s consul-ui LoadBalancer 172.19.62.30 10.45.100.11 80:31263/TCP 1s You can also view your Service and its corresponding IP with a kubectl command:

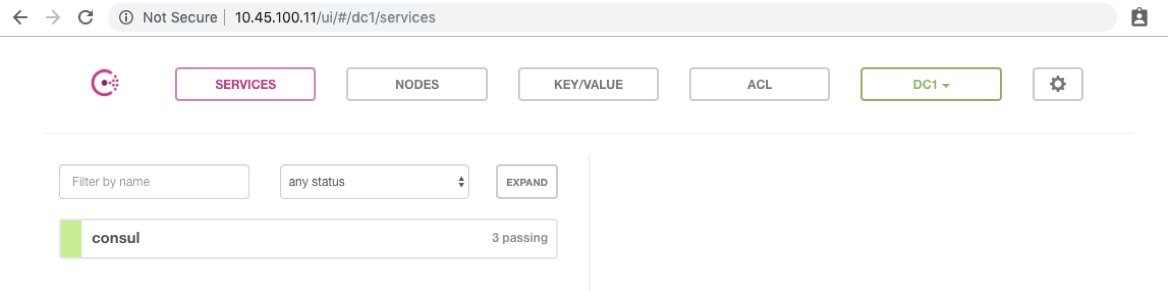

$ kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE consul ClusterIP None 80/TCP... 117s consul-ui LoadBalancer 172.19.62.30 10.45.100.11 80:31263/TCP 117s kubernetes ClusterIP 172.19.0.1 443/TCP 3h40m To verify our deployment, copy the External-IP of the consul-ui service (in my case 10.45.100.11) and paste it into your web browser. We should see the same Consul UI as was shown in Jose’s blog post:

Accessing Consul via a LoadBalancer

It’s worth noting that MetalLB in layer 2 mode, a particular service IP (10.45.100.11 in our example) is routed to a single Kubernetes worker node. From there, kube-proxy spreads the traffic to all of the underlying pods. While each pod will be utilized, total bandwidth of the Service is limited to the bandwidth of a single worker node. If this limitation is not acceptable, please look into using MetalLB in BGP mode.

Conclusion

LoadBalancer Services allow you to expose your Kubernetes based applications to your end users with simple IPs or domain names, rather than relying on long, random port numbers. With just a couple of simple commands you can configure your Karbon Kubernetes clusters to automatically hand out IPs when a LoadBalancer Service is created. This allows for your on-prem Kubernetes clusters to have the same great end user experience of public cloud hosted clusters. Thanks for reading!

Disclaimer: This blog may contain links to external websites that are not part of Nutanix.com. Nutanix does not control these sites and disclaims all responsibility for the content or accuracy of any external site. Our decision to link to an external site should not be considered an endorsement of any content on such site. ️ 2019 Nutanix, Inc. All rights reserved. Nutanix, the Nutanix logo and the other Nutanix products and features mentioned herein are registered trademarks or trademarks of Nutanix, Inc. in the United States and other countries. All other brand names mentioned herein are for identification purposes only and may be the trademarks of their respective holder(s).

️ 2019 Nutanix, Inc. All rights reserved. Nutanix, the Nutanix logo and the other Nutanix products and features mentioned herein are registered trademarks or trademarks of Nutanix, Inc. in the United States and other countries. All other brand names mentioned herein are for identification purposes only and may be the trademarks of their respective holder(s).