Hi guys,

Is there a way to change the min. snap retention count for local site AND remote site?

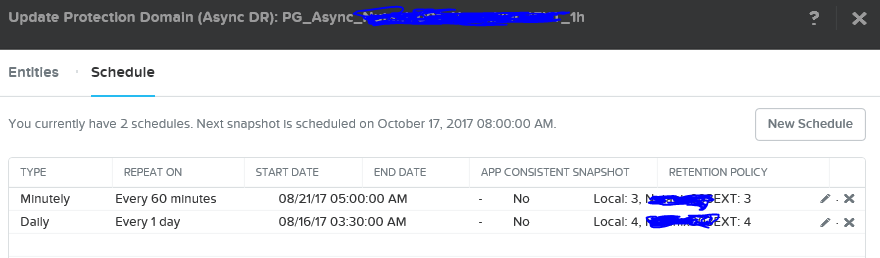

We have a PD wich has two schedules.

-every 60 mins - keep 3:3

-every 24 hours - keep 4:4

The problem now is that if for example the replication of the 60 min schedule fails because of network problems, or because the replication takes longer than normaly, the snapshots on the remote site expires.

So let's say the disaster happens monday night at 1am. If the first admin arrives at 7am, every 60min snapshot will be expired and we can only work with the older 24hour based snaps.

In the nutanix kb i can only find this article:

https://portal.nutanix.com/#/page/kbs/details?targetId=kA0600000008dn2CAA

which says: "...It's also important to note that the min-snaps value only affects local protection domains, not remote sites..."

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.