HI Team,

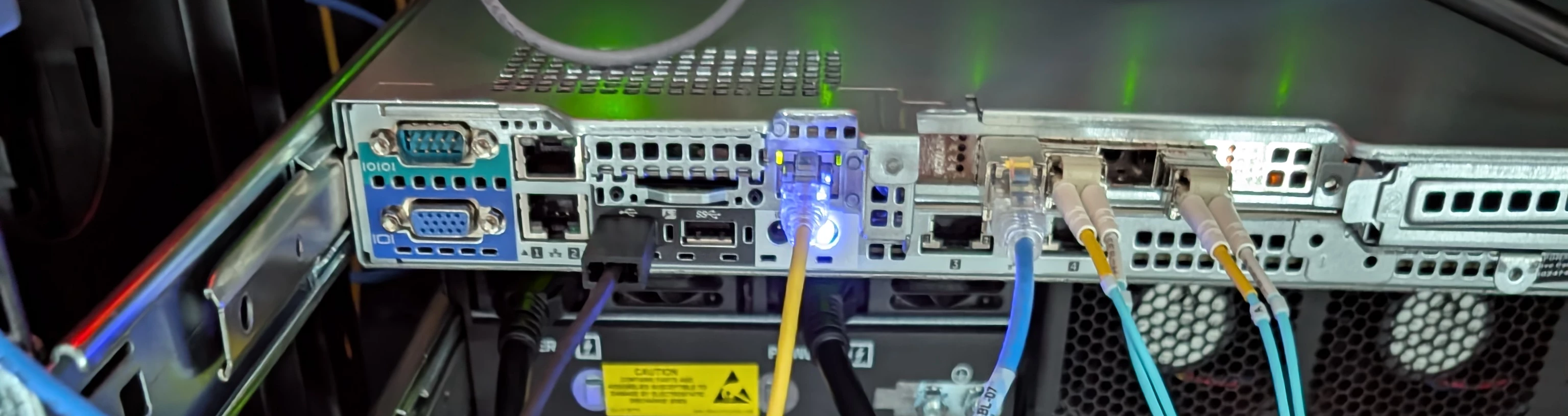

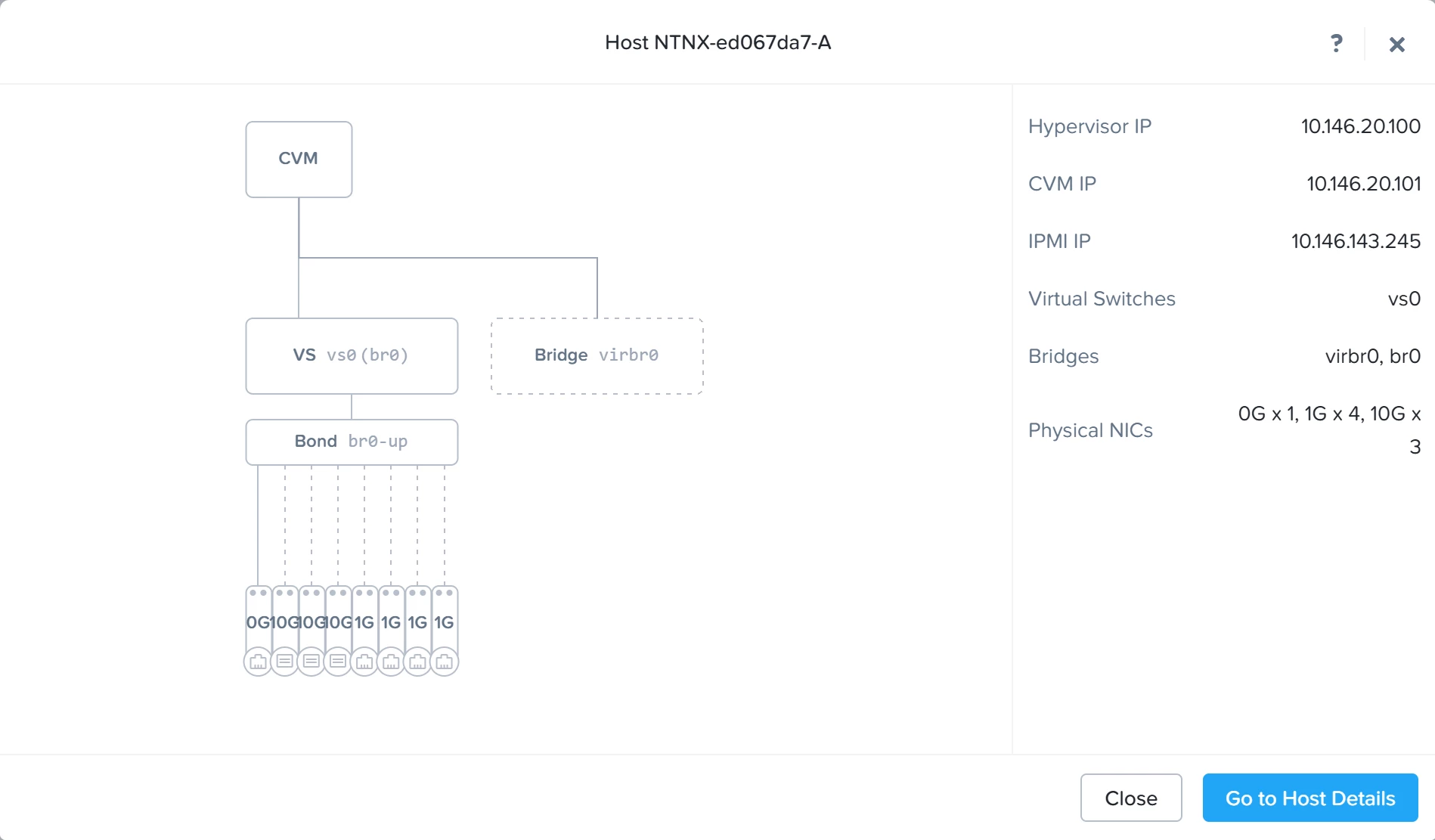

Recently I have installed Nutanix CE on Dell Power Edge R430. I am facing issue while trying to create network configs for creation of VMs. My lab network is shown below,

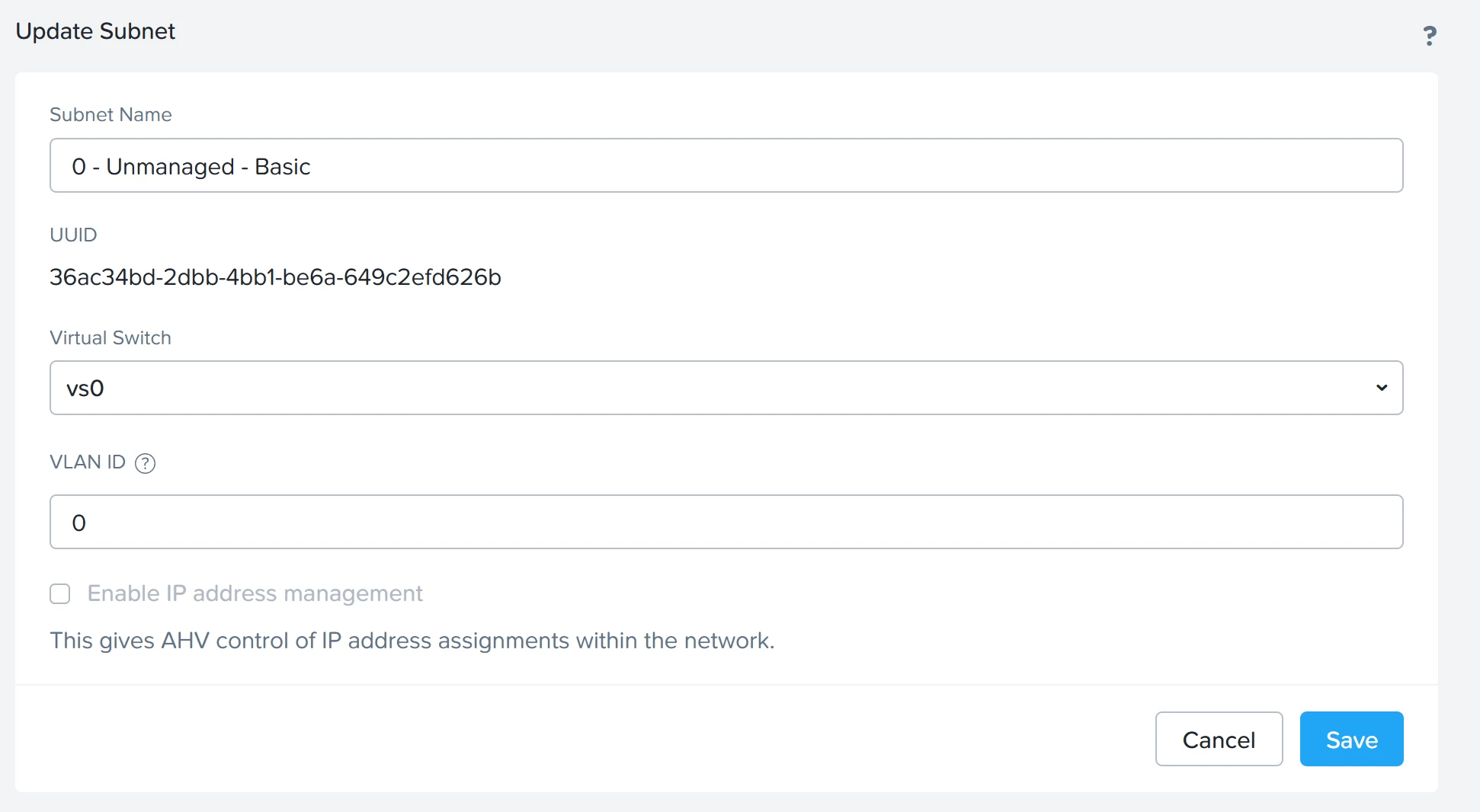

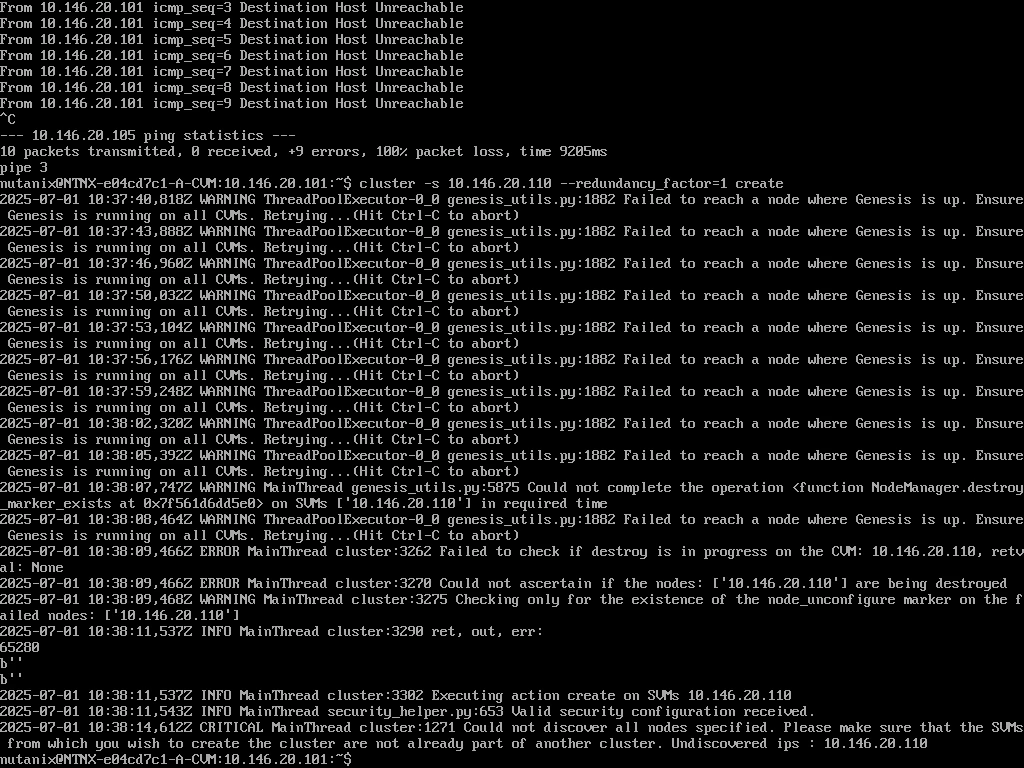

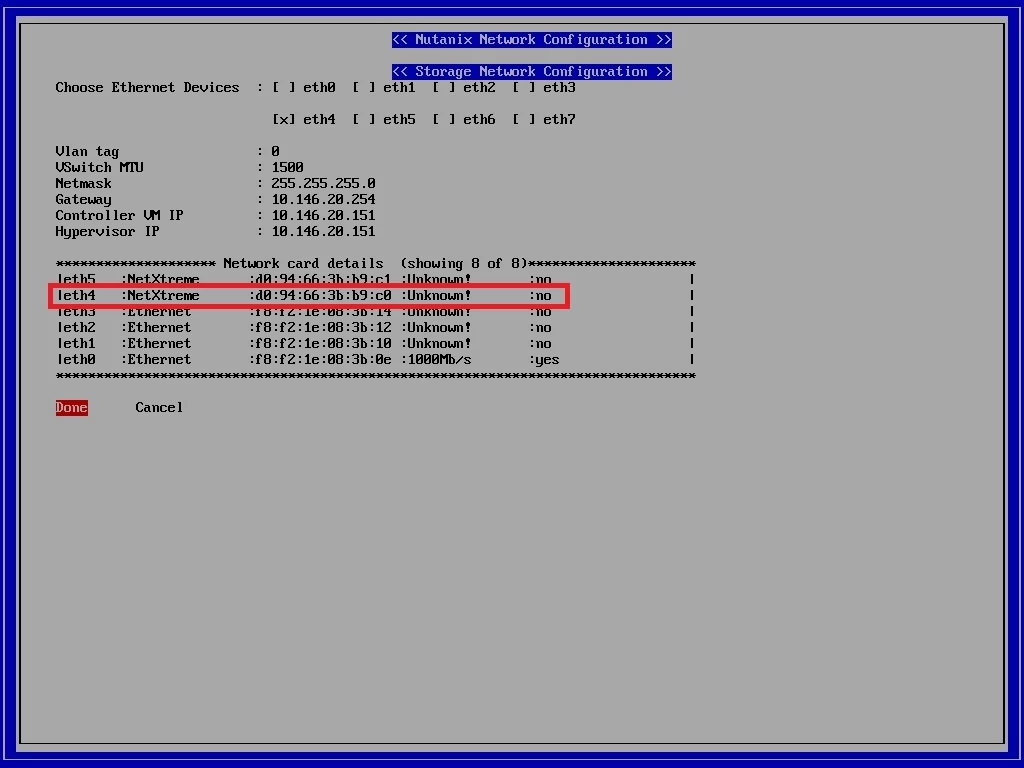

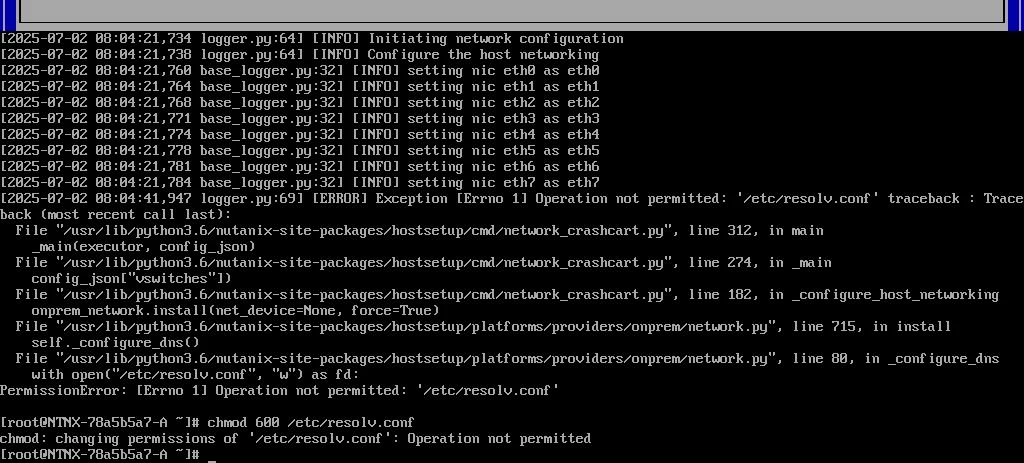

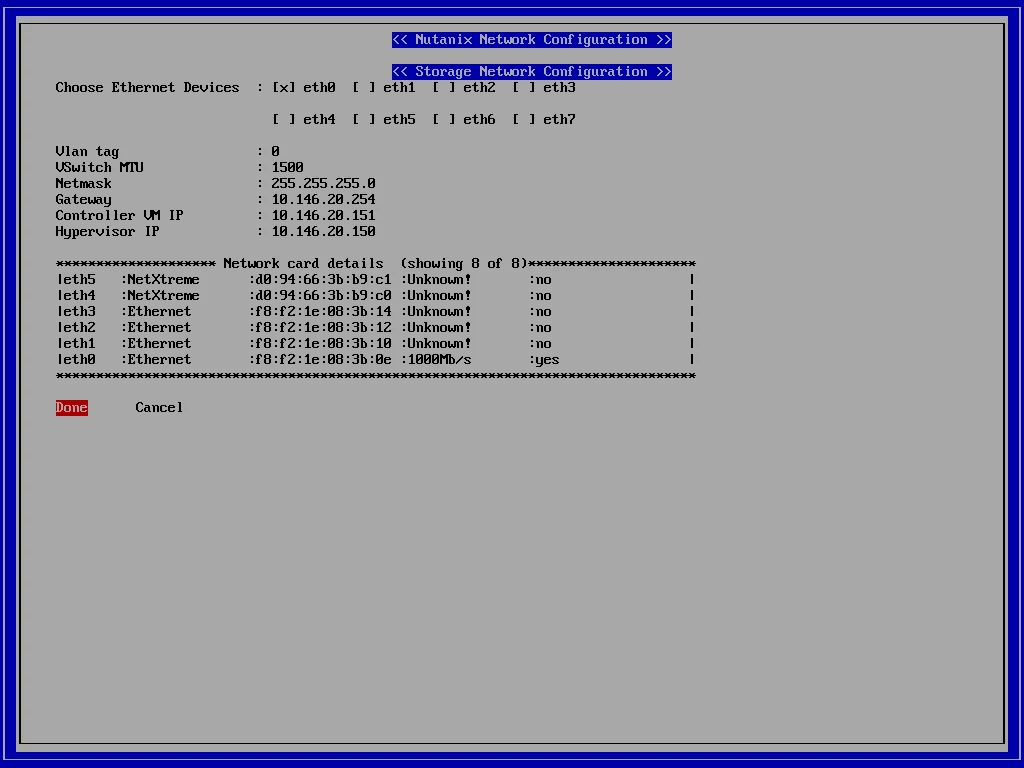

I have interfaces from eth0 - eth7. eth4 is connected to Network 10.146.20.0/24, I would like to configure the same in Nutanix, so that the VMs that I create fall under the same subnet. I tried creating subnet and booted an Linux instance to check the reachability. I am unable to reach GW from that linux.

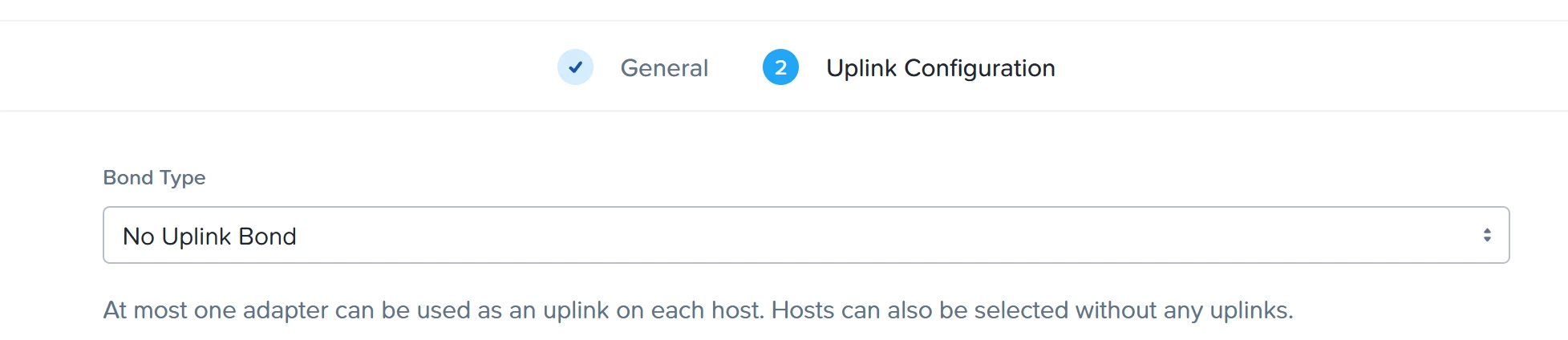

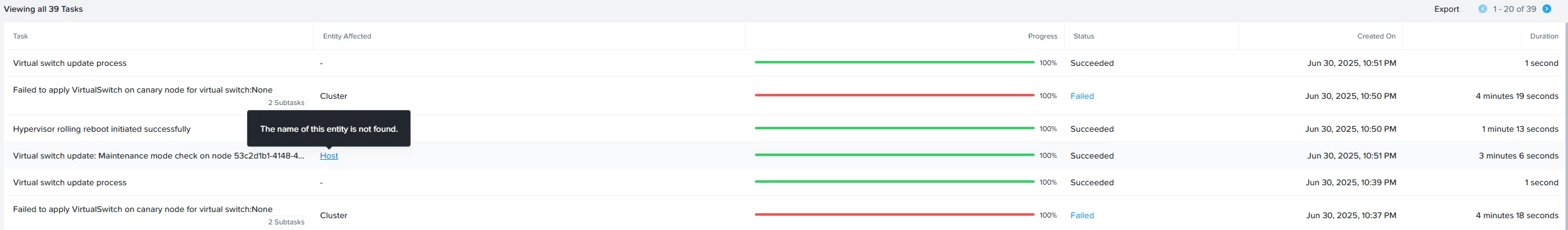

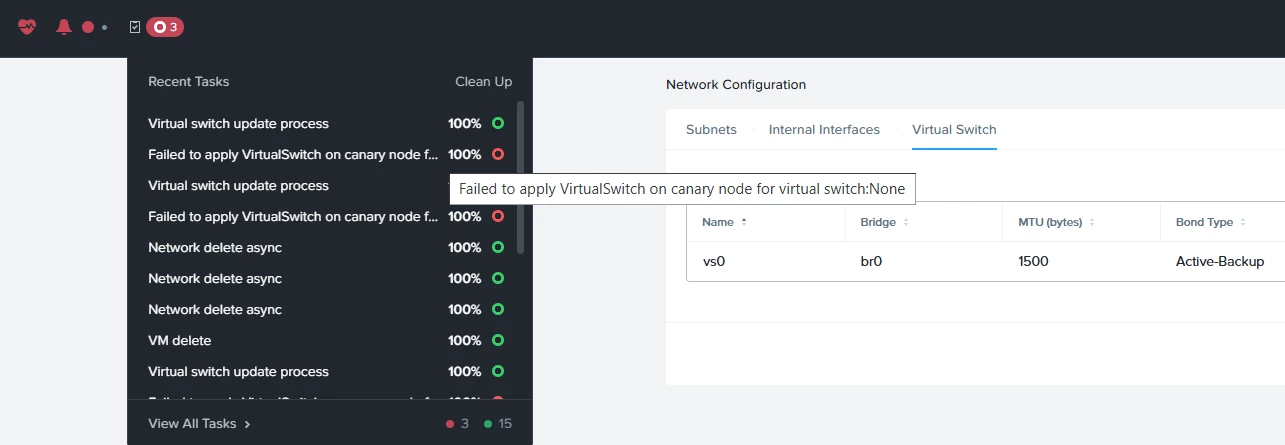

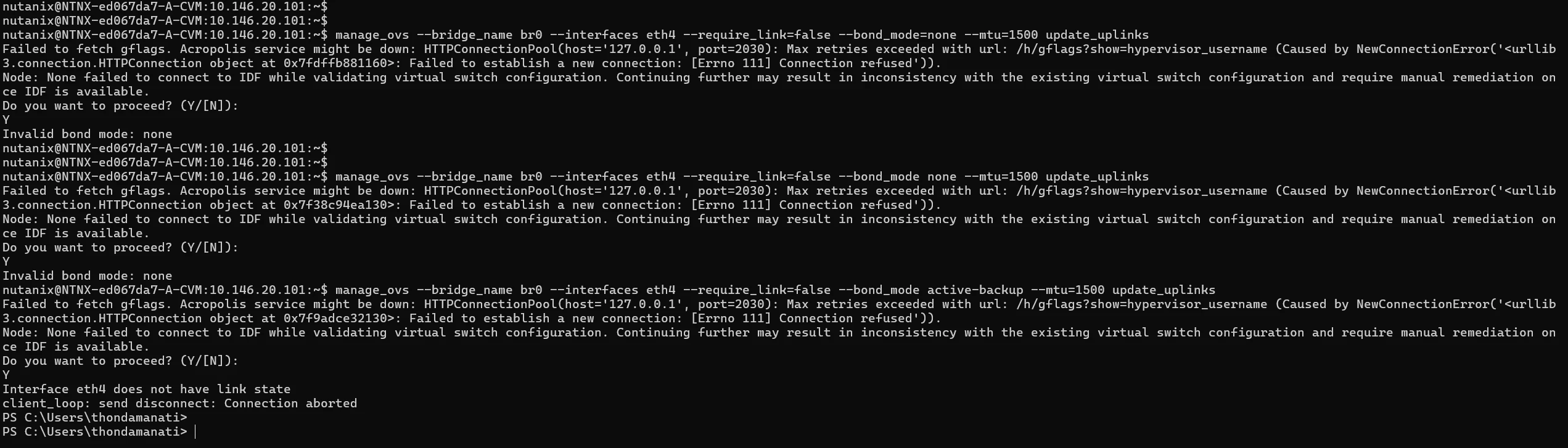

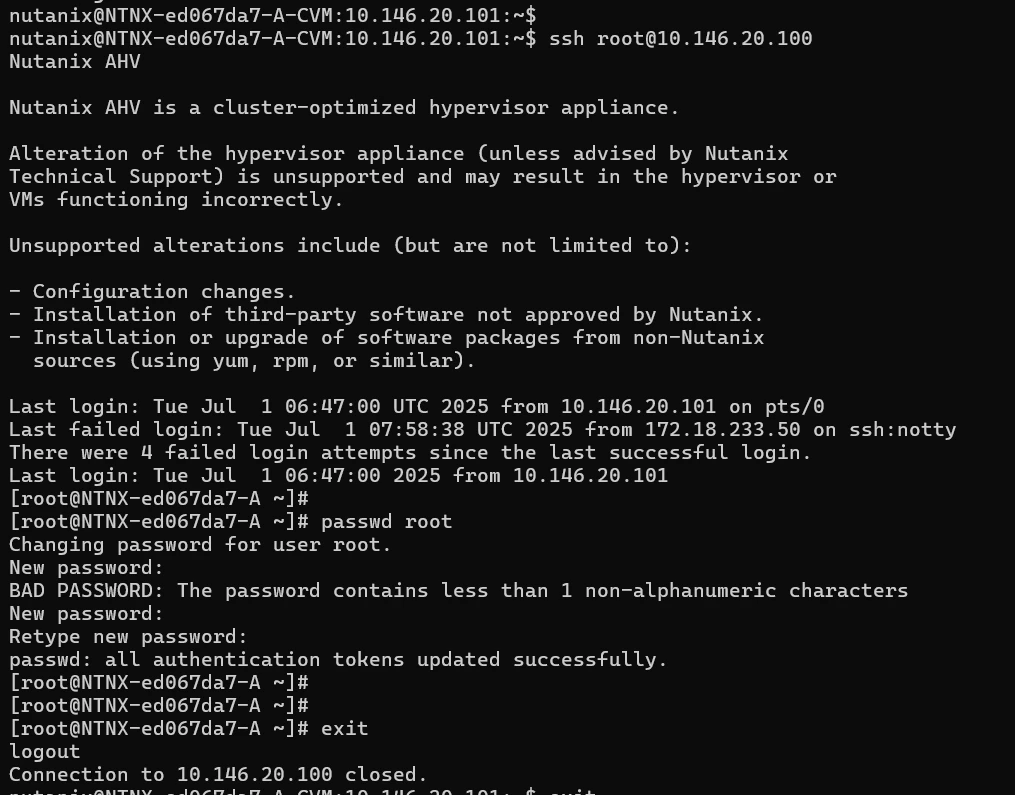

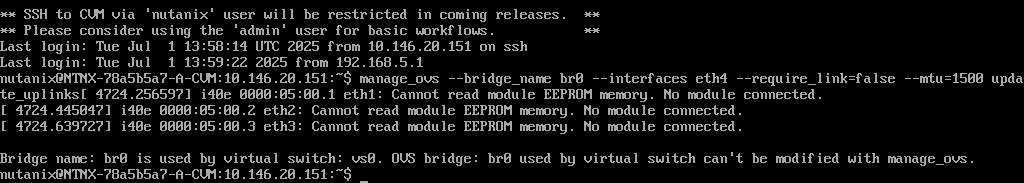

I reached out to my lab team, and they suggested to have eth4 alone in a bond. To do that, I tried to assign eth4 to vs0 alone but unable to do it.

Could you please let me know what are the series of steps that need to be followed so that I could successfully create VMs.

Thanks,