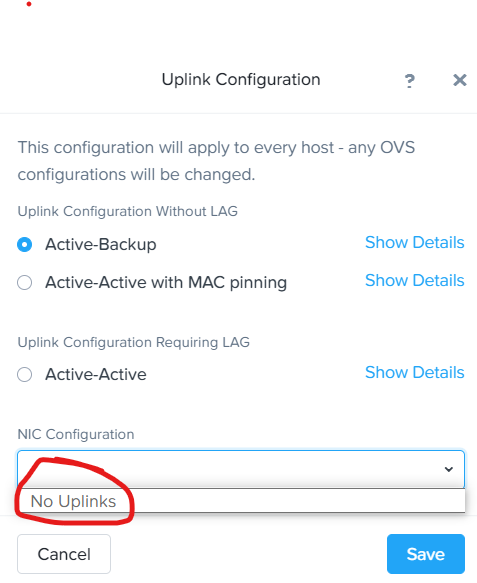

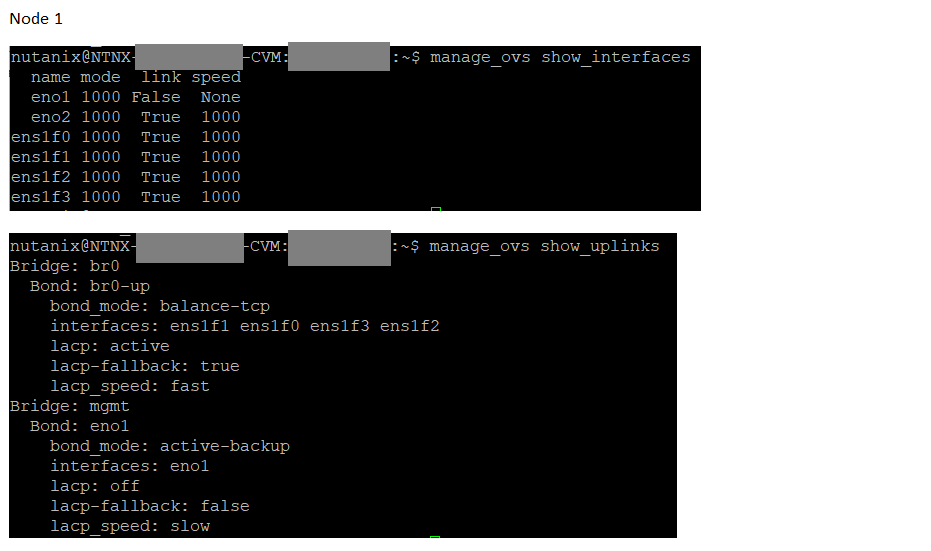

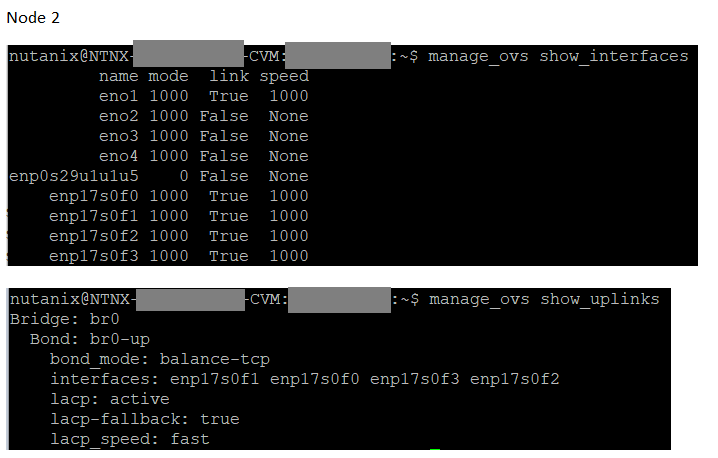

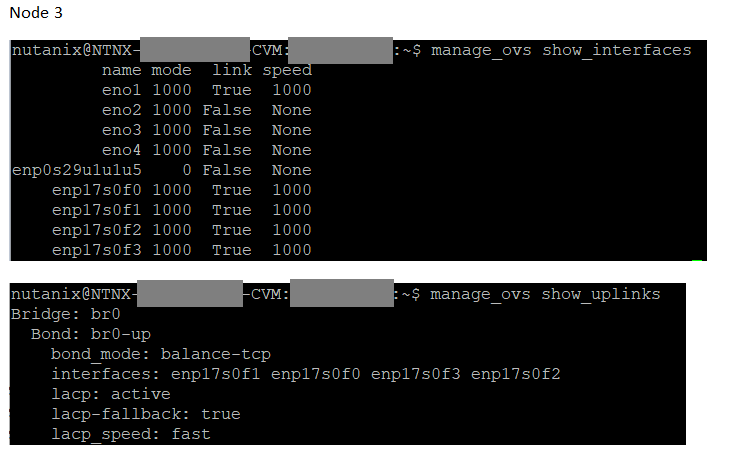

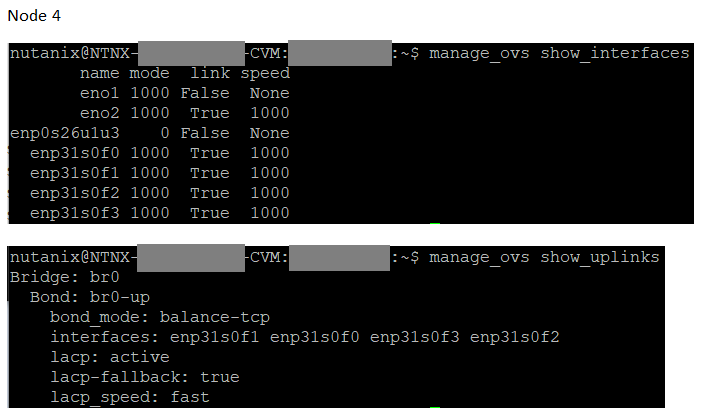

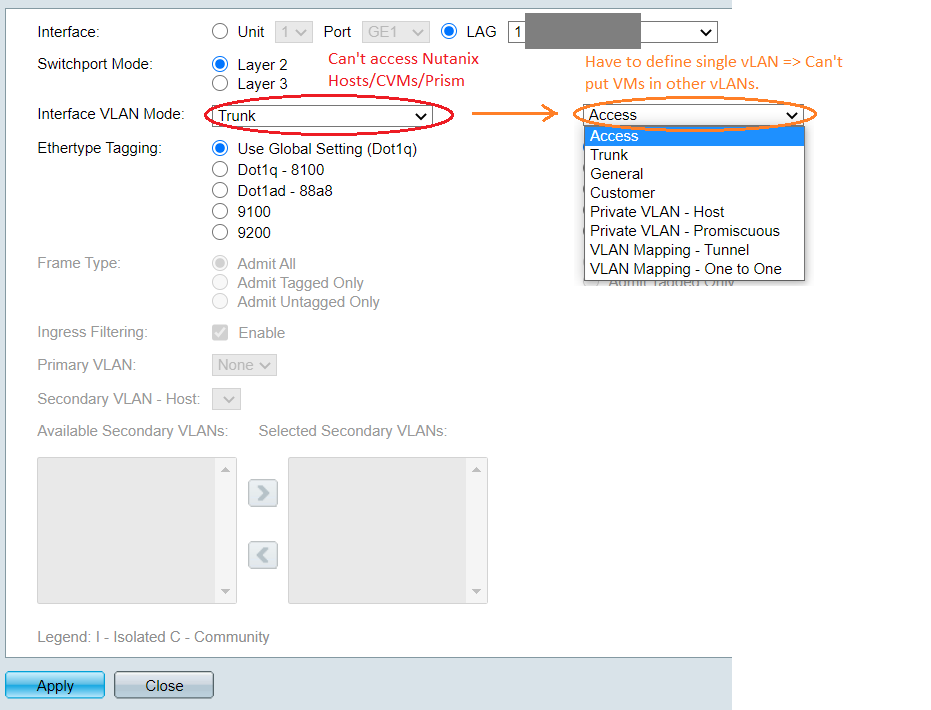

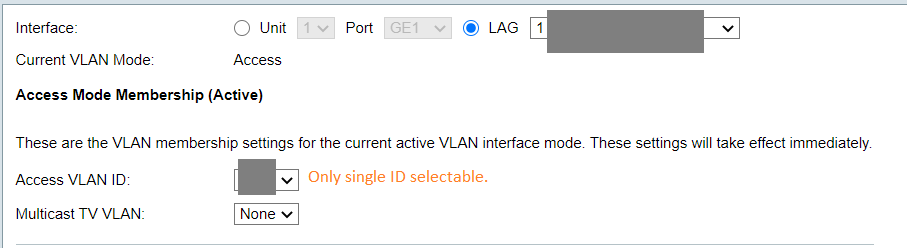

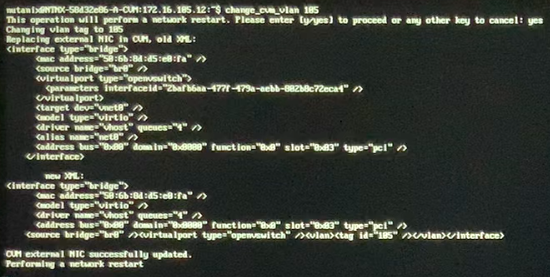

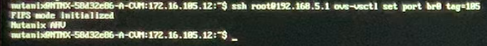

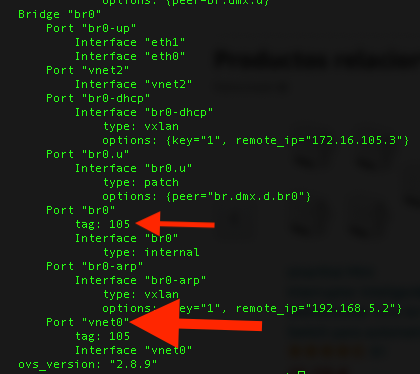

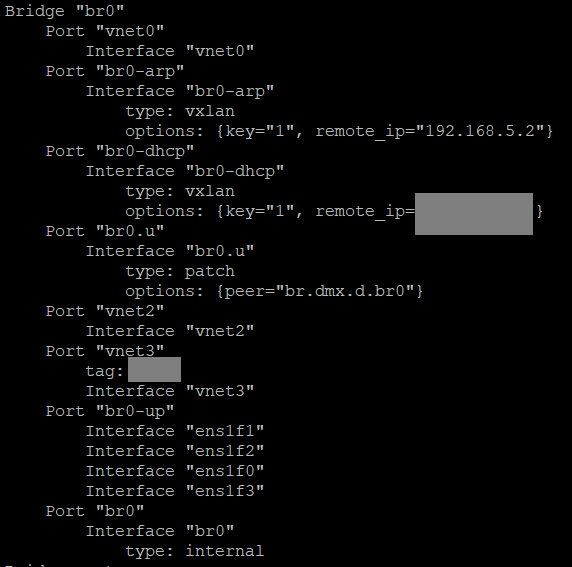

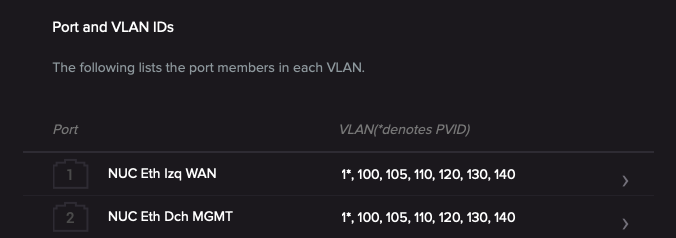

I set up a new cluster and I don’t know why “Uplink Configuration” shows “No Uplinks”. What can be the reason for that? (See: “PRISM/console/#page/network” » "+ Uplink Configuration" » “NIC Configuration” » Dropdown shows only one unselectable option: “No Uplinks”).