Hi All

Havoc struck this morning when I tried to move my AHV cluster from old switch stack to new switch stack - the hosts, I think went into panic mode and started to restart various VMs.

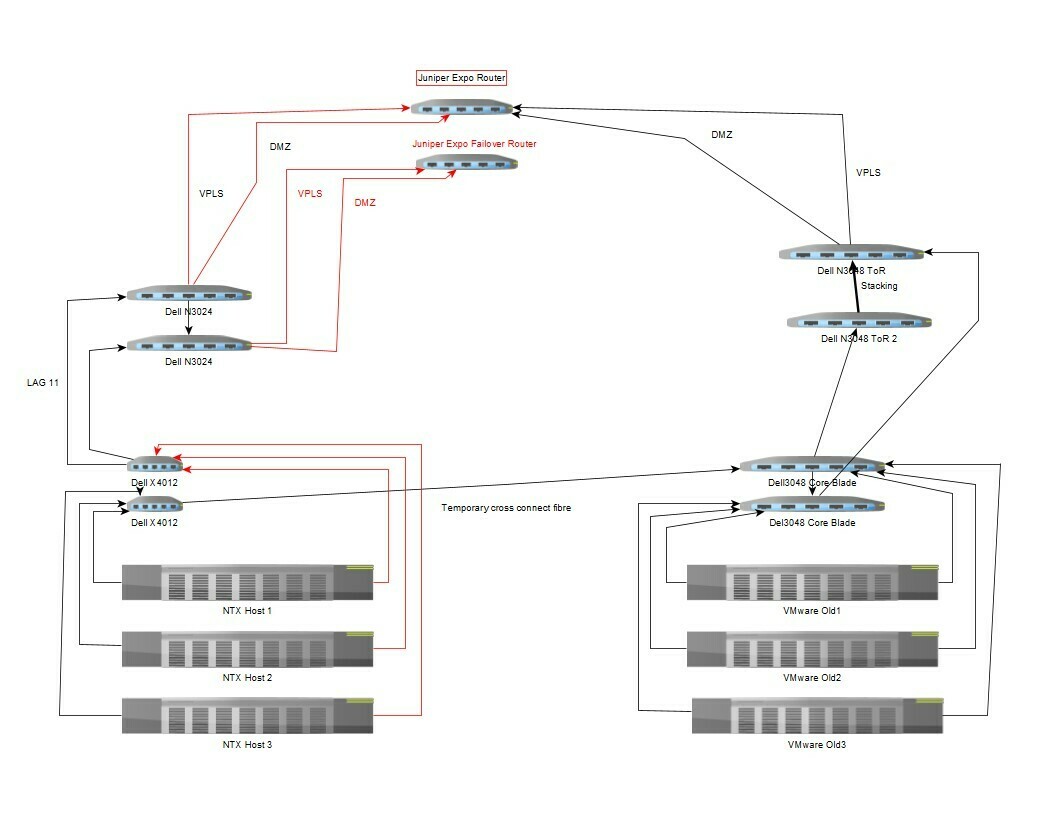

The background to this is: I have 2 x Dell X4012 core switches and 2 x Dell N3024 ToR switches, currently due to moving VMware environment to Nutanix only 1 x X4012 is connected to NIC 1 on each of the 3 hosts. From the X4012 there is a cross connect into the old VMware network Core blade switches (Dell 8024 x 2) which are connected to the old ToR N3048 switch stack, these are then connected to the router for VPLS and DMZ.

So to move the connectivity, I thought I could add the second NICs of the hosts to the second X4012 and disconect the crossconnect and the first NICs thus using the newer switches, albeit on the opposite NICs. I planned to reconfigure and update the first X4012 and add back into the stack with a LAG to the N3024 stack and no crossconnect. I moved the NIC connections and lost all pings so I rolled back - probably exacerbating the problem as I still could not ping - it was then I realised I had a restart storm.

Could anyone tell me a way of doing the changes without creating a storm? I have added a diagram, the parts in black are current , the parts in red are where I need to be.

Thanks in advance