Hello dear Nutanix community,

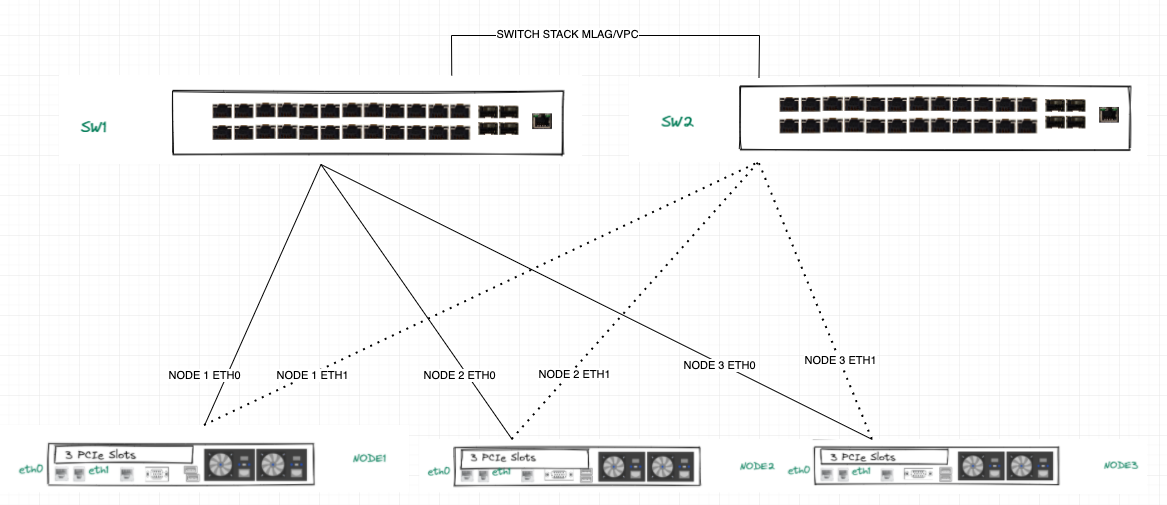

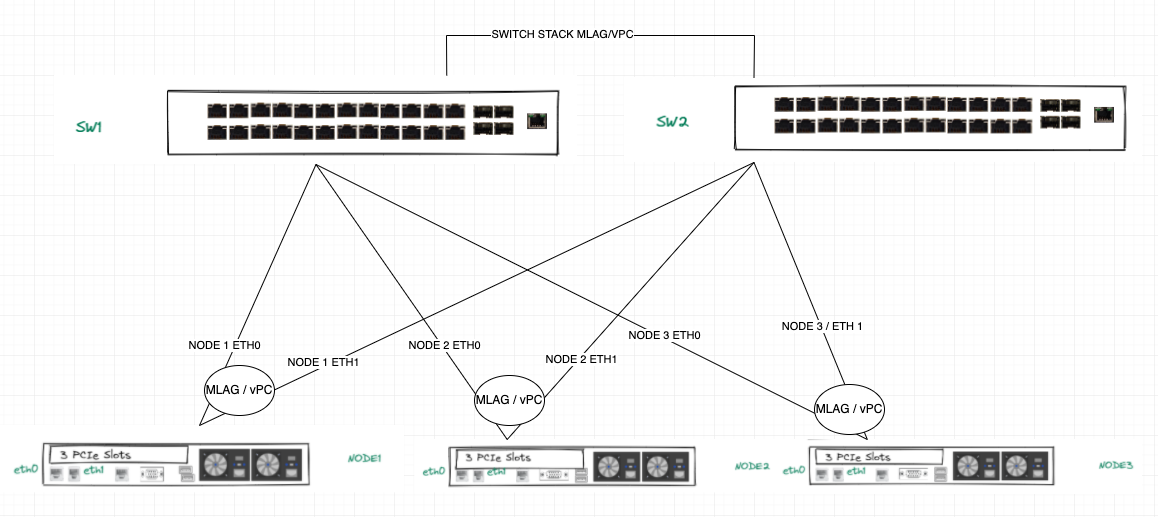

A client of my company has purchased 3 HX Lenovo nodes and 2 ToR Cisco switches, each node was equipped with only 2 ports 10GbE (the LOM ones).

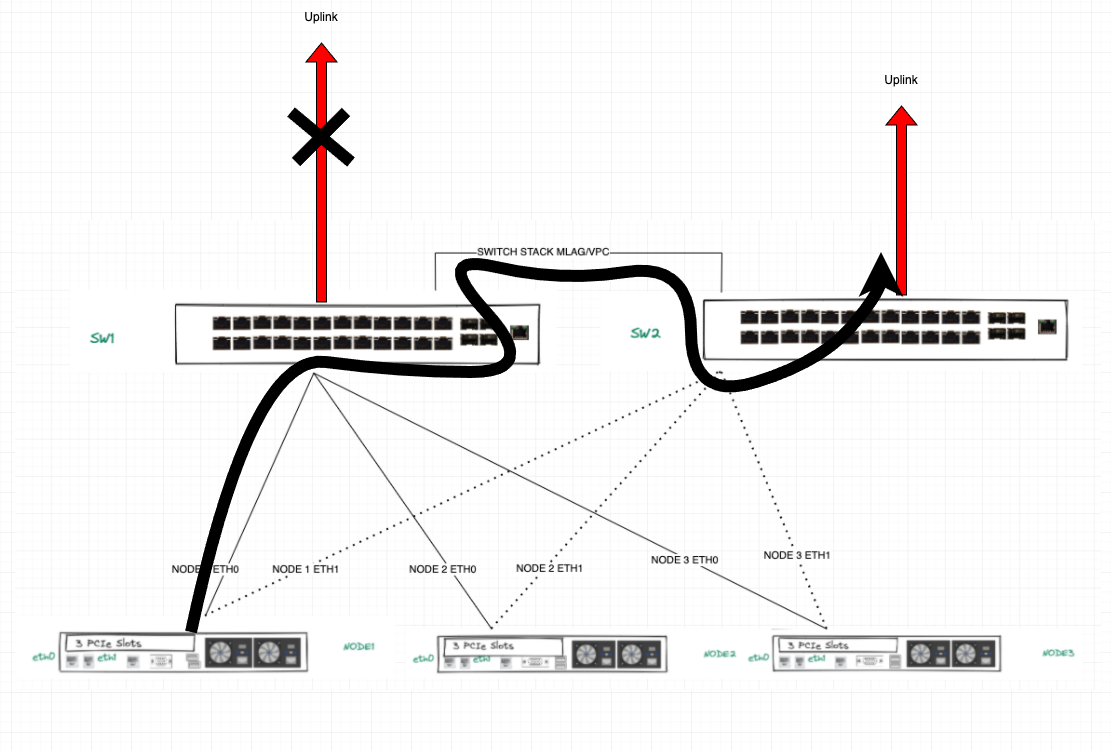

However, with only 2 ports, I don’t know how to setup the cluster in a way that there is a redundancy.

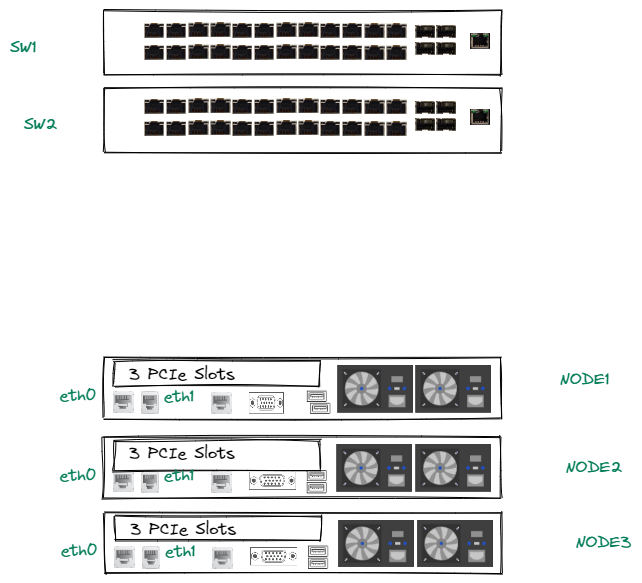

The current rack looks like this without any cabling yet:

The IPMI port will go to a Management Switch, so there’s no issue there. But so far, I am confused as to how setup the cabling for the two interfaces; Should eth0 go to a port on SW1 and eth1 go to a port on SW2 for each of the 3 servers? or should both eth0 and eth1 go to the same switch?

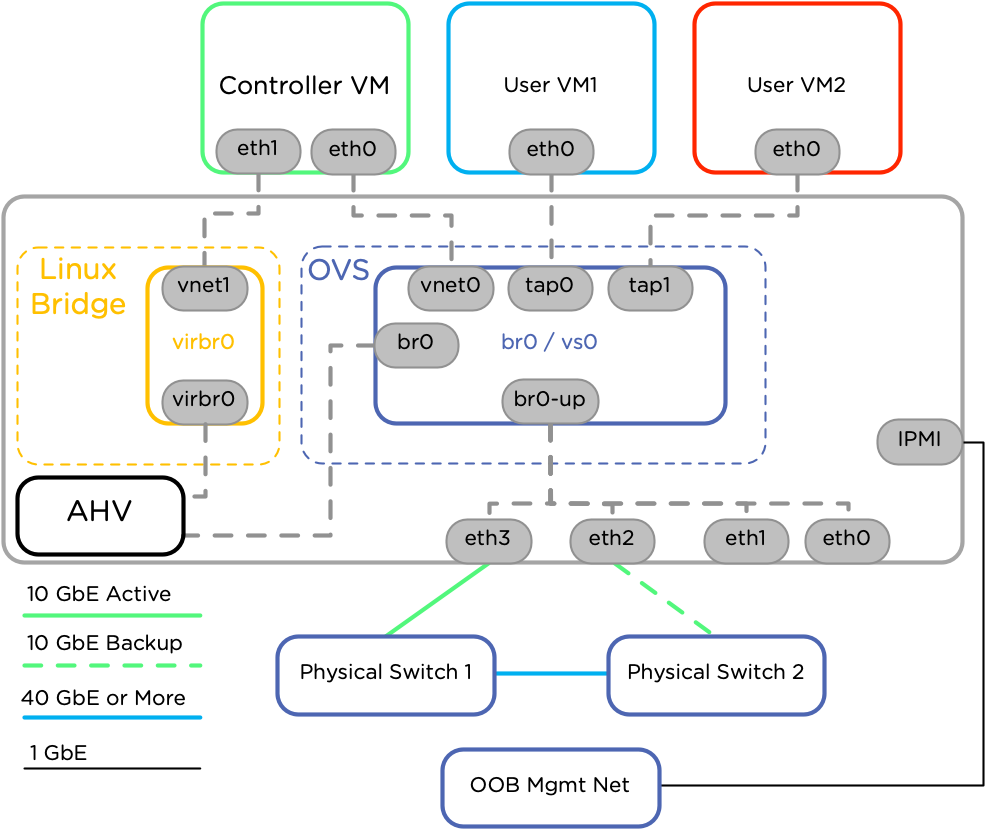

If I understood correctly the AHV Networking articles in Nutanix, eth0 and eth1 will form a logical bond (br0-up) inside the br0 bridge. And I’m guessing since we only have 2 uplinks per nodes (I’m going for the first scenario):

> eth0 will go to SW1Port1 (for example), SW1Port1 will be configured as a Trunk port, for which the CVM/AHV VLAN and User VMs VLANs will be declared.

> eth1 will go to SW2Port1, this port will have the same config as SW1Port1.

> eth0 and eth1 will be in the default active-standby br0-up bond post-Foundation set-up. (Except I’m assuming there won’t be no failover possible cause the adapters are connected to different switches?)

I’m aware this could be very simple but I’m just very confused. If anyone has an idea about how to best set this up. I would appreciate it. Thanks a lot!