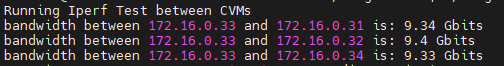

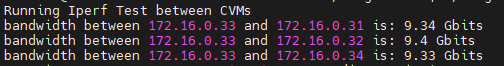

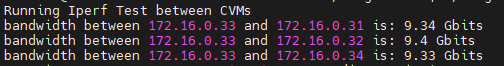

CVM On LACP networks, Physical switches use MLAG technology,CVM bandwidth performance cannot be improved, and the maximum can only be 10Gb.

CVM On LACP networks, Physical switches use MLAG technology,CVM bandwidth performance cannot be improved, and the maximum can only be 10Gb.

Best answer by JeroenTielen

CVM On LACP networks, Physical switches use MLAG technology,CVM bandwidth performance cannot be improved, and the maximum can only be 10Gb.

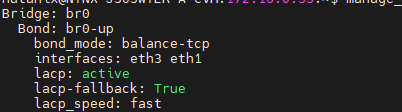

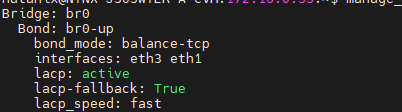

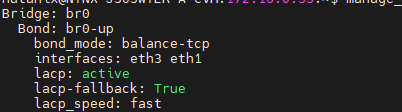

This is correct. Here some explanation: Will configuring LACP increase throughput on the link?

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.