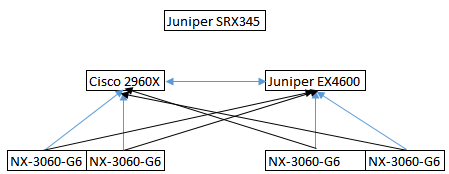

Hi, Im planning to add 2 more blocks NX-3060-G6 with 2 nodes on each block. Currently i have 2 Block Nutanix NX-3060-G6 with 2 Nodes on each block.

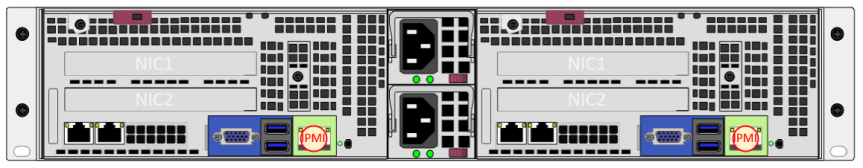

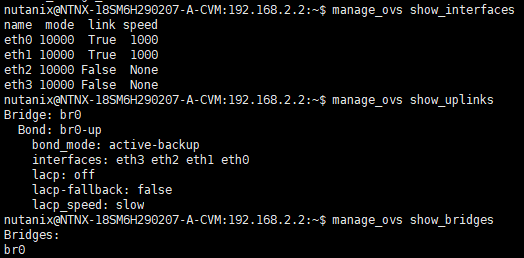

Current topology is 2 Block nutanix connected to Cisco 2960X with all eth interfaces connected to it. My question is, In NX-3060-G6 there are 3 Ethernet interfaces, 1 for MGMT (IPMI) and there are other 2 eth interfaces and 2 SFP+. For networking purpose,

- Do we need to use those 2 eth interfaces from each node in order to work? Or do we need to use only 1 eth interface only on each node?

- We’re adding 1 more switch Juniper EX4600, so if we need to use those 2 eth interfaces, can we separate them? 1 Eth interface to Cisco 2960X and the other goes to EX4600?

- Can i have different VLAN on each interfaces (exclude MGMT) that i connect to switch?

Sorry if my question isn’t clear enough. But i hope you do understand it. Thank you.