All,

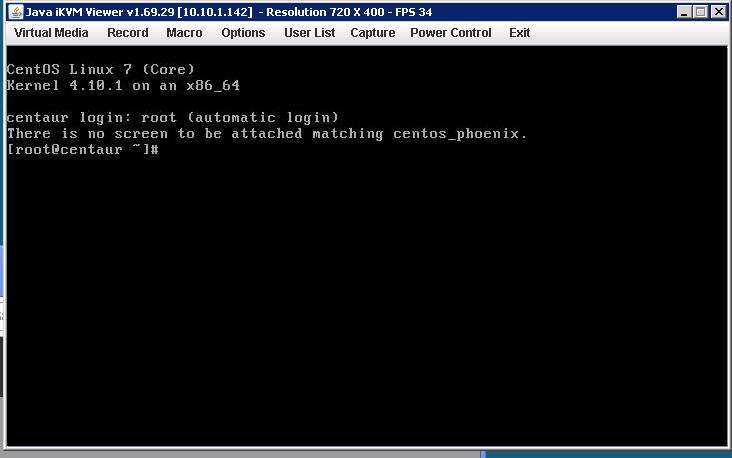

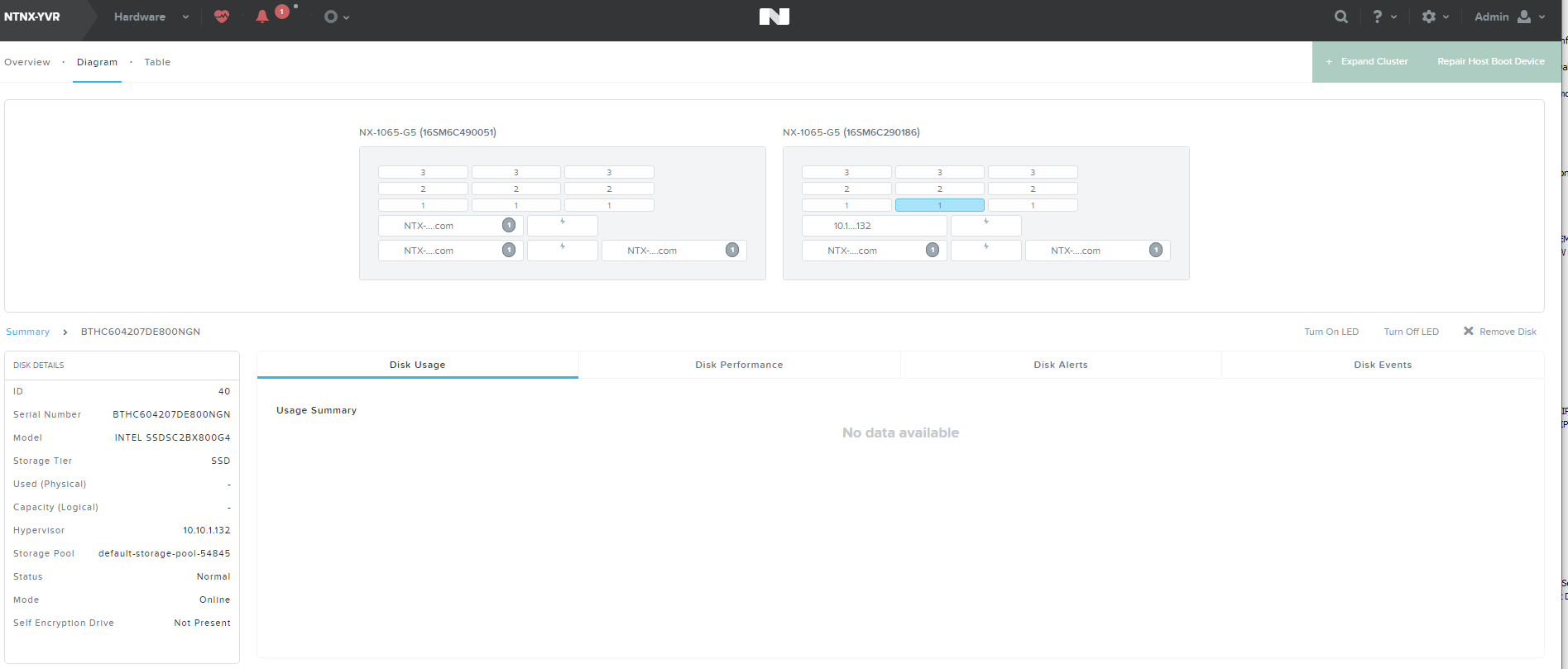

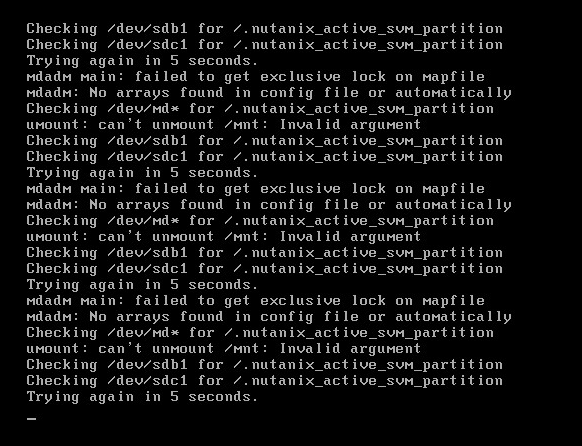

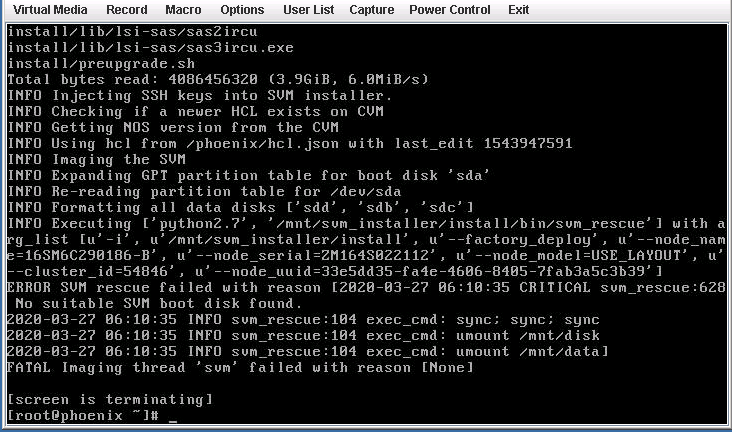

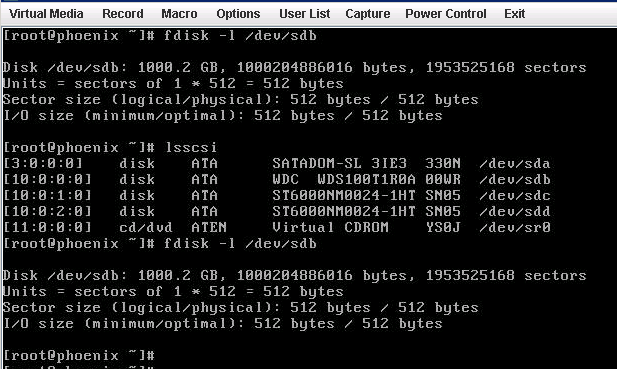

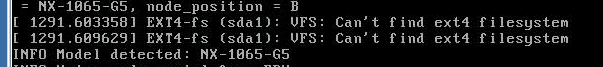

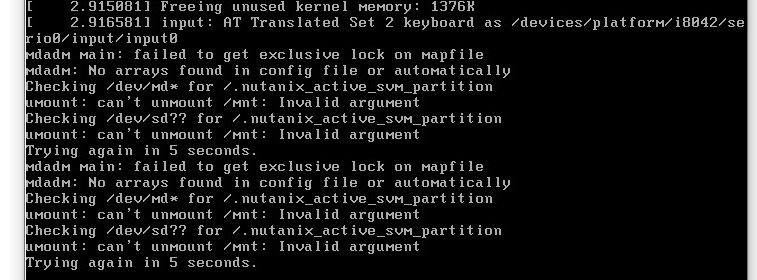

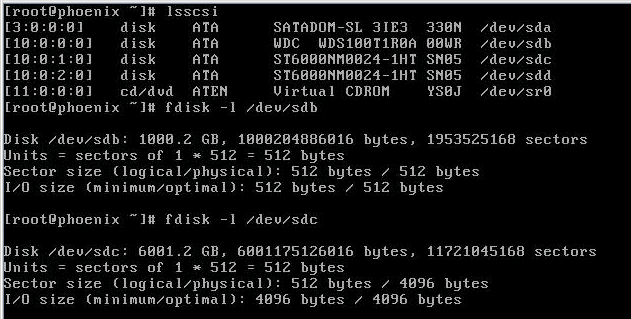

I have a 6 Node 1065 system in 2 blocks. Recently one of the CVMs (node 2, block A) crashed. When rebooted it was just going in loops. When diagnosed it seems the SSD (not the SATADOM) had failed and we replaced it. When we try to boot the CVM, it still just loops.

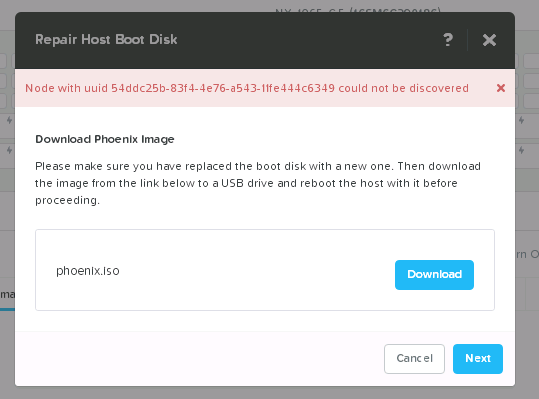

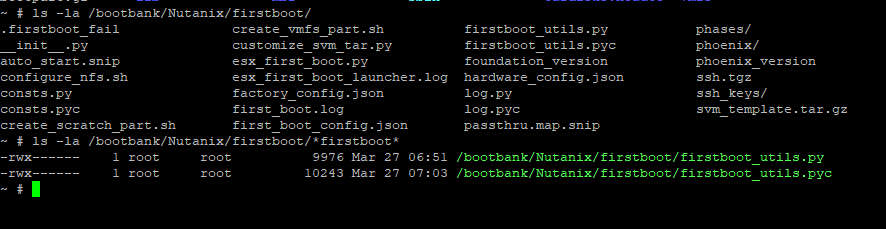

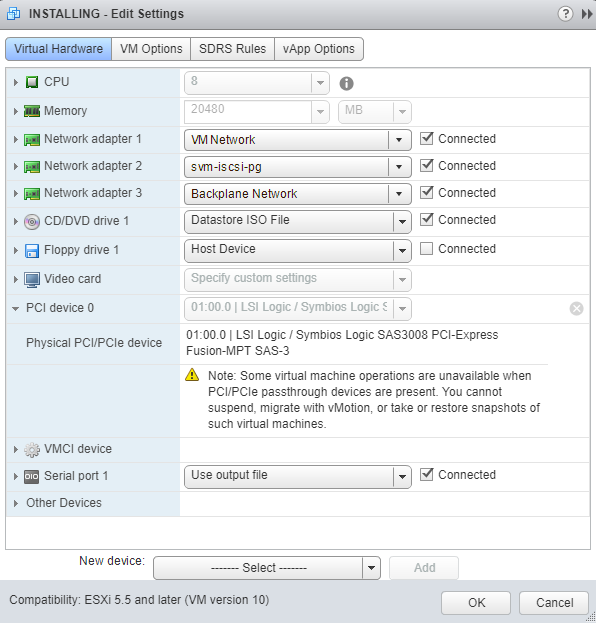

We were told to boot that node with Phoenix which the cluster provided me for download. I do that and it doesn’t load Phoenix and gets errors instead.

I’m looking for a suggestion of how to get the node back to 100%. At this point (and throughout) the ESXi on the SATADOM has booted fine and I guess if I didn’t care about the storage side I could just ignore this but I’d like the system to be fully healthy.

Any suggestion about how to get the CVM working again would be appreciated.

Thank you

Johan