Ever wondered what are some of the main services/components that make up Nutanix?

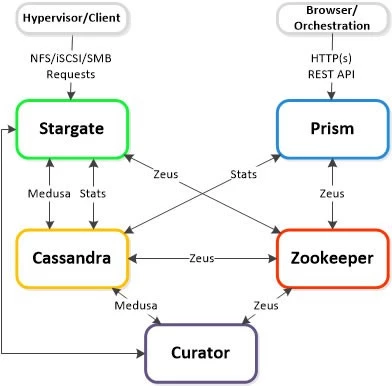

The following is a simplified view of the main Nutanix cluster components.

All components run on multiple nodes in the cluster and depend on connectivity between their peers that also run the same component.

Zeus

Key Role: Access interface for Zookeeper

· A key element of a distributed system, zeus is a method for all nodes to store and update the cluster's configuration. This zeus configuration includes details about the physical components in the cluster, such as hosts and disks, and logical components, like storage containers. The state of these components, including their IP addresses, capacities, and data replication rules, are also stored in the cluster configuration.

· Zeus is the Nutanix library that all other components use to access the cluster configuration, which is currently implemented using Apache Zookeeper.

Medusa

Key role: Access interface for Cassandra

· Distributed systems that store data for other systems (for example, a hypervisor that hosts virtual machines) must have a way to keep track of where that data is. In the case of a Nutanix cluster, it is also important to track where the replicas of that data are stored.

· Medusa is a Nutanix abstraction layer that sits in front of the database that holds this metadata. The database is distributed across all nodes in the cluster, using a modified form of Apache Cassandra.

Stargate

Key Role: Data I/O manager

· A distributed system that presents storage to other systems (such as a hypervisor) needs a unified component for receiving and processing data that it receives. The Nutanix cluster has a large software component called Stargate that manages this responsibility.

· From the perspective of the hypervisor, Stargate is the main point of contact for the Nutanix cluster. All read and write requests are sent across vSwitchNutanix to the Stargate process running on that node.

· Stargate depends on Medusa to gather metadata and Zeus to gather cluster configuration data.

· Stargate is responsible for all data management and I/O operations and is the main interface from the hypervisor (via NFS, iSCSI, or SMB). This service runs on every node in the cluster in order to serve localized I/O.

Cassandra

Key Role: Distributed metadata store

· Cassandra stores and manages all of the cluster metadata in a distributed ring-like manner based upon a heavily modified Apache Cassandra. The Paxos algorithm is utilized to enforce strict consistency.

· This service runs on every node in the cluster. The Cassandra is accessed via an interface called Medusa.

· Cassandra depends on Zeus to gather information about the cluster configuration

Zookeeper

Key Role: Cluster configuration manager

· Zookeeper stores all of the cluster configuration including hosts, IPs, state, etc. and is based upon Apache Zookeeper. This service runs on three or five nodes, depending on the redundancy factor in the cluster, one of which is elected as a leader. The leader receives all requests and forwards them to its peers. If the leader fails to respond, a new leader is automatically elected. Zookeeper is accessed via an interface called Zeus.

· Zookeeper has no dependencies, meaning that it can start without any other cluster components running.

Curator

Key Role: MapReduce cluster management and cleanup

· Curator is responsible for managing and distributing tasks throughout the cluster, including disk balancing, proactive scrubbing, and many more items.

· Curator runs on every node and is controlled by an elected Curator Leader who is responsible for the task and job delegation. There are two scan types for Curator, a full scan which occurs around every 6 hours and a partial scan which occurs every hour.

· Curator depends on Zeus to check node availability and medusa to gather data. It then sends commands to stargate based on the information collected.

Prism

Key Role: UI and API

· Prism is the management gateway for components and administrators to configure and monitor the Nutanix cluster. This includes Ncli, the HTML5 UI, and REST API.

· Prism runs on every node in the cluster and uses an elected leader like all components in the cluster. All requests are forwarded to the leader using Linux Iptables. This allows access to PRISM using any CVM Ip address.

· Prism communicates with Zeus for cluster configuration data and Cassandra for statistics to present to the user. It also communicates with the ESXi hosts for VM status and related information

These are only some of the essential services that make up the CVM functionality. For more information on all the services and various Nutanix Cluster components, refer to the portal documentation.

Pro tip :

Check service status using the following command from any CVM

genesis statuscluster statusUse KB 1518 to check and determine the various service health.