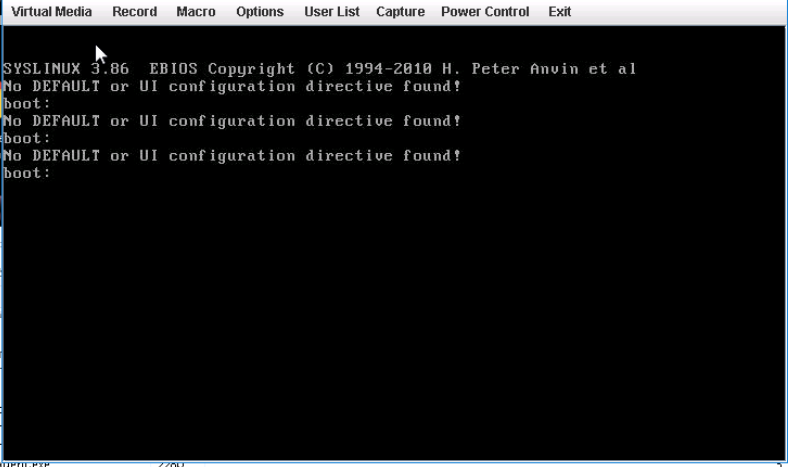

Using the LCM I updated one of our hosts to the latest firmware from G4G5T6.0 to G4G5T8.0, (BMC 3.64 to 3.65) and since then all I get is a black screen with the words, “boot error”

I can get into the bios via IPMI and see the boot device, “sSATA P3: SATADOM-SL 3ME” but it just not booting from it anymore. I also could not find any documents on how to trouble shoot it.

Could someone point me in the right direction please, thanks!

Note, out of the 6 hosts in our environment, 3 have updated fine already and nothing has changed, so a little confused. I’ve also been updating one host at a time.