This post was authored by Mike McGhee, Director TMM - NC2, NUS, NDB at Nutanix

Nutanix has long supported a feature called a volume group. A volume group is a logical construct used to create and group virtual disks for the purpose of storage presentation to VMs or physical servers. Database instances like Oracle (including RAC) and Microsoft SQL Server (including Failover Cluster Instances (FCI) are commonly used in conjunction with volume groups. Volume groups were designed to serve several purposes, including:

- Decoupling VM and storage lifecycles

- Snapshot and storage replication based on volume group (virtual disk) boundaries instead of VM boundaries

- Storage presentation for physical (bare metal) workloads

- Sharing storage for clustering between multiple VMs or physical servers

- Scaling up storage performance for applications running in a single VM or physical server

It is this last point of scaling up performance where I’d like to focus. Nutanix makes it possible to load balance virtual disk ownership, across all nodes in an AHV cluster, for volume groups directly attached to a VM. I realize there’s a lot to unpack from that last sentence, so let’s take a step back and go through how a volume group works by default and how they operate when configured for load balancing.

Volume Group Basics

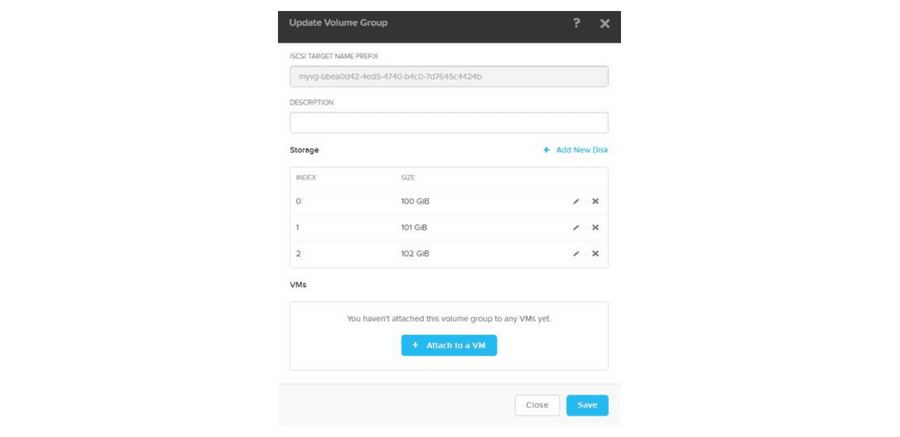

A volume group is really just a collection of virtual disks which are attached to VMs or physical servers.

The attachment method supported by Nutanix clusters running ESXi or Hyper-V is based on iSCSI. This combination of volume groups and iSCSI is called Nutanix Volumes. For Volumes, the operating system in the VM or physical server uses a software iSCSI initiator, in combination with physical or virtual NICs, to connect to a volume group and access the disks. The Nutanix Bible has an excellent overview of Volumes here if you’d like to dig further. We also have a Best Practices Guide if you’re planning to implement ABS.

For this post, however, I want to focus on AHV, which not only supports Volumes, but also supports another method of connecting to a volume group called VM attachment.

Volume Group VM Attachment

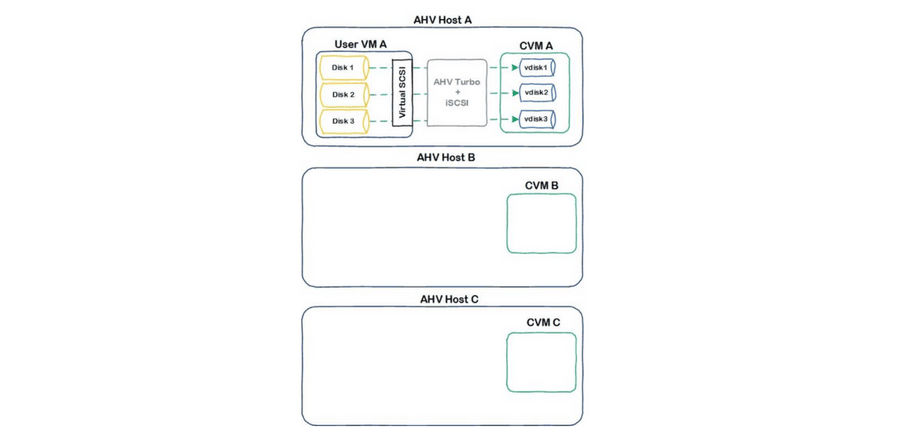

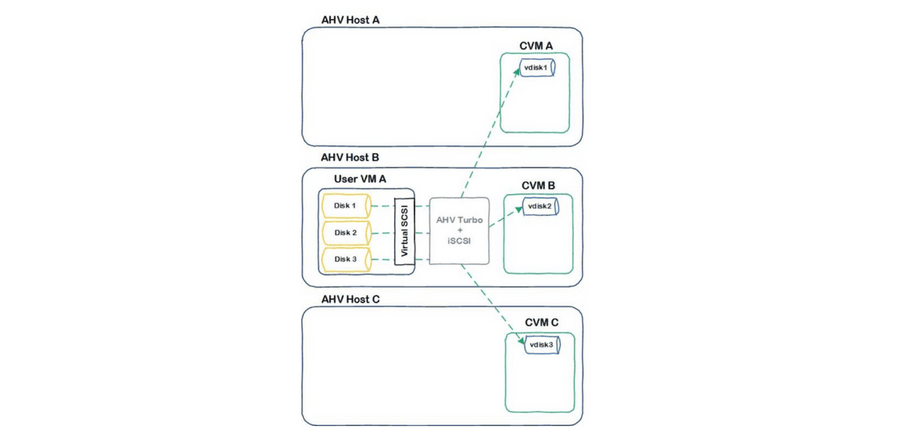

When attaching a volume group to a VM directly with AHV, the virtual disks are presented to the guest operating system over the virtual SCSI controller. The virtual SCSI controller leverages AHV Turbo and iSCSI under the covers to connect to the Nutanix Distributed Storage Fabric. By default, the virtual disks for a volume group directly attached to a VM are hosted by the local Nutanix CVM.

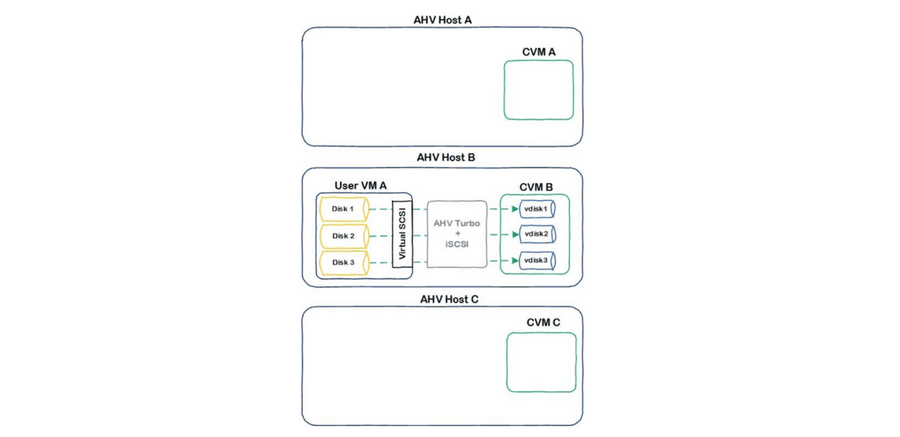

If the VM is live migrated to another AHV host, the volume group attachments will migrate as well and again be hosted by the local CVM.

Moving VM attachments to always be local to where the VM is running helps to maintain data locality and prevents unnecessary network utilization. In some cases, however, the storage workload of a VM could benefit from concurrently accessing multiple CVMs in parallel. This is where the new volume group load balancer functionality comes into play.

Volume Group VM Attachment With Load Balancing

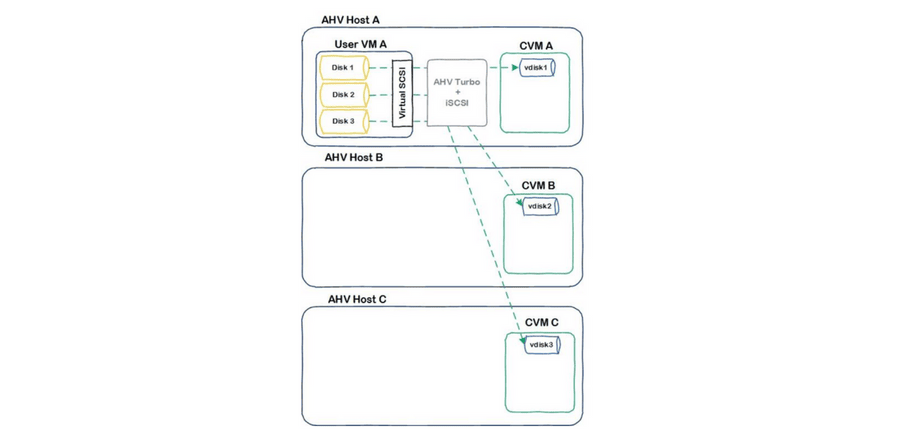

A unique aspect of AHV is each virtual disk is its own iSCSI connection. While these connections are preferred to be hosted locally to where the VM is issuing storage IO, they can be redirected and hosted by any CVM in a cluster. Take for example a rolling upgrade where a CVM is rebooted. Each virtual disk hosted by that upgrading CVM is temporarily redirected across any node in a cluster. This core capability of transparently redirecting virtual disk sessions is a building block for both Volumes and the volume group load balancer.

When enabled for a volume group, the load balancer will distribute virtual disk ownership across all CVMs in a cluster.

AHV Turbo threads work in conjunction with the virtual disk iSCSI connections to drive the storage workload throughout the cluster. Should a VM with load balanced sessions migrate to another node in the cluster, AHV Turbo threads in the new node will take ownership of the iSCSI connections. Virtual disks will continue to be hosted by their preferred CVMs based on the load balancing algorithm.

If desired, certain CVMs can be excluded for load balancing. This is helpful to avoid hosting sessions on nodes intended for other workloads. The Acropolis Dynamic Scheduler (ADS) also helps to move virtual disk sessions if CVM contention is discovered. If a given CVM utilizes more than 85 percent of its CPU for storage traffic (measured by the Nutanix Stargate process), ADS will automatically move specific iSCSI sessions to other CVMs in the cluster.

To enable load balancing, use ACLI to create the volume group with the load balancing attribute set to true, for example:

acli vg.create load_balance_vm_attachments=true

You can also update an existing volume group to leverage load balancing, but note you’ll need to first remove any VM attachments.

acli vg.update load_balance_vm_attachments=true

Final Thought

While most applications will easily have their storage performance requirements met under Nutanix default conditions, volume group load balancing, in combination with AHV Turbo helps scale up workloads meet the most demanding storage performance requirements. You can find some of the impressive test results, including a single VM with over 1 million IOPs here.

Disclaimer: This blog may contain links to external websites that are not part of Nutanix.com. Nutanix does not control these sites and disclaims all responsibility for the content or accuracy of any external site. Our decision to link to an external site should not be considered an endorsement of any content on such site.

©️ 2018 Nutanix, Inc. All rights reserved. Nutanix, the Nutanix logo and all other products and features named herein are registered trademarks or trademarks of Nutanix, Inc. in the United States and other countries. All other brand names mentioned herein are for identification purposes only and may be the trademarks of their respective holder(s).