In the first post in this series, I compared the actual Usable Capacity between Nutanix ADSF vs VMware vSAN on the same hardware.

We saw approximately 30-40% more usable capacity delivered by Nutanix.

Next, we compared Deduplication & Compression technologies where we learned Nutanix has outright capacity efficiency, flexibility, resiliency and performance advantages over vSAN.

Then we looked at Erasure Coding where we learned the Nutanix implementation (called EC-X) is both dynamic & flexible by balancing performance and capacity efficiencies in real-time.

vSAN, on the other hand, has a rudimentary “All or nothing” approach which can lead to higher front end impact and does not dynamically apply Erasure Coding to the most suitable data.

Now let’s learn how both platforms can scale storage capacity:

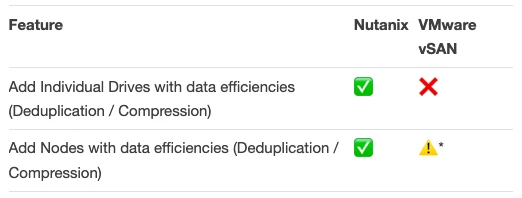

Here we see from a typical “tick-box” comparison that both platforms can add individual drives or additional nodes to scale capacity. As you’ve probably gathered from my previous few posts, I’ll now highlight that the above comparison is very misleading.

Customers have numerous critical factors to consider when choosing a platform OR scaling an existing implementation.

vSAN Problem #1 – When a drive is added to a disk group with deduplication and compression enabled, the newly added drive does not automatically participate in the deduplication and compression process.

The new drive is used to store data, but that data is not deduplicated and compressed. The disk group must be deleted and recreated so that all of the capacity drives in the disk group (including the new drive) store deduplicated and compressed data.

With Nutanix, when drives are added to one or more nodes, the capacity becomes available almost immediately and all the configured data efficiency technologies apply without the need for reformatting or bulk movements of data.

Nutanix does not have the constraint of disk groups so there is also no requirement to remove disk groups and recreate them for any reason.

Now let’s learn how both platforms can scale storage capacity with data efficiencies:

* For the capacity to be utilised, rebalancing must be performed as we discuss in Problem #3.

Here we see a clear problem when scaling vSAN capacity with individual drives, the vSAN environment does not benefit from Deduplication and Compression without manual and high impact intervention from the administrator (recreating disk groups).

This makes scaling capacity on vSAN by adding more drives to existing nodes unattractive and further reduces the potential value of vSAN’s Compression and Deduplication as we’ve discussed previously.

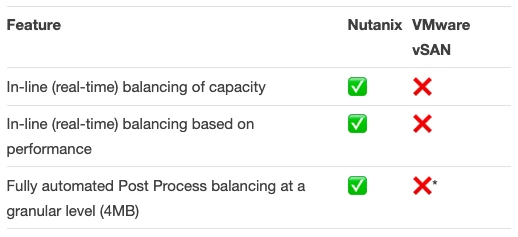

vSAN Problem #2 – vSAN balances data/capacity at a large “object” level which can be up to 255GB.

This forces vSAN customers to use vSAN Cluster Rebalancing, where the cluster will initiate a rebalance when the cluster hits 80% (i.e.: Reactive balancing) OR configure Automatic Rebalancing which according to VMware impacts performance and may need to be turned off:

vSAN automatically rebalances data on the disk groups by default. You can configure settings for automatic rebalancing.

Your vSAN cluster can become unbalanced due to uneven I/O patterns to devices, or when you add hosts or capacity devices. If the cluster becomes unbalanced, vSAN automatically rebalances the disks. This operation moves components from over-utilized disks to under-utilized disks.

You can enable or disable automatic rebalance, and configure the variance threshold for triggering an automatic rebalance. If any two disks in the cluster have a capacity variance that meets the rebalancing threshold, vSAN begins rebalancing the cluster.

Disk rebalancing can impact the I/O performance of your vSAN cluster. To avoid this performance impact, you can turn off automatic rebalance when peak performance is required.

Reference: https://docs.vmware.com/en/VMware-vSphere/6.7/com.vmware.vsphere.vsan-monitoring.doc/GUID-968C05CA-FE2C-45F7-A011-51F5B53BCBF9.html

Because vSAN rebalancing is a post process (or “reactive”) action, performed either automatically or manually at an object/component level, it’s not very granular and requires bulk movement of data. As VMware state, it has a significant performance impact and may need to be turned off.

vSAN’s implementation of capacity management, is really a compromise between either capacity and performance. Much like Deduplication and Compression needing to both be either ON or OFF, and Erasure Coding being again, ON and Inline applying to all data, or OFF.

Nutanix on the other hand uses proactive intelligent replica placement in the write path (a.k.a “Inline”) to place new data in real time based on individual node/drive capacity and performance utilisation.

As a result, new nodes/drives are dynamically utilised to ensure an even balance throughout the cluster which improves resiliency and performance and avoids fragmented/unusable capacity we discussed previously.

This also minimises the requirement for and potential workload of post process Disk Balancing which Nutanix applies if a cluster becomes significantly out of balance. The key difference is Nutanix balances at an Extent Group (4MB) level, not an Object (up to 255GB) level, meaning the amount of data needing to be moved is lower and fragmentation inefficiency is all but zero.

vSAN Problem #3 – When adding nodes, capacity is not used until such time as new vDisks or VMs are created OR with manual intervention from the administrator.

The exception to this is when individual drives are >=80% utilised when vSAN automatically rebalances the cluster, however at this point the cluster is not maintaining the VMware recommended 25-30% “slack space” which can lead to issues.

After you expand the (vSAN) cluster capacity, perform a manual rebalance to distribute resources evenly across the cluster. For more information, see “Manual Rebalance” in vSAN Monitoring and Troubleshooting.

Reference: https://docs.vmware.com/en/VMware-vSphere/6.7/com.vmware.vsphere.virtualsan.doc/GUID-41F8B336-D937-498E-AE87-94953A66DF00.html

When adding individual drive/s or node/s with Nutanix, the performance of existing VMs immediately improves due to ADSF having either more drives to send data too AND/OR more Controller VMs (CVMs) to process the workload from the distributed storage fabric.

vSAN requiring customers to choose between capacity management and performance is unreasonable and unrealistic.

So we’ve learned while you can scale vSAN capacity by adding individual drives, it comes with plenty of headaches, unlike Nutanix where things just work thanks to the Acropolis Distributed Storage Fabric (ADSF).

Let’s summarise what we’ve learned so far:

* vSAN will automatically balance at >=80% utilization of any storage device but it is done at a large object level (up to 255GB).

What about scaling only storage capacity without CPU/RAM resources?

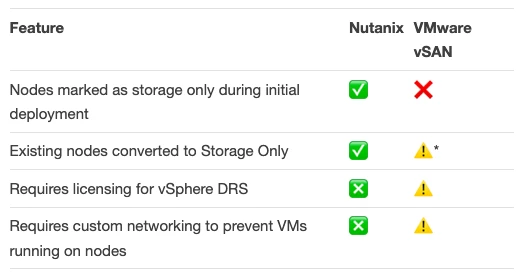

Let’s refer back to the tick-box comparison:

Here we see both platforms can provide “storage only nodes” but this is where things get very interesting.

VMware doesn’t typically talk about the concept of storage only nodes because (long story short) vSAN can only achieve a fraction of the value Nutanix delivers from their Storage Only nodes. It requires the introduction of significant complexity/risk into a vSAN environment with DRS rules and considerations around what networking to present to hosts.

This disaster of a process is described in a post titled How to make a vSAN storage only node? (and not buy a mainframe!) which tries to downplay the value of storage only nodes. It also highlights a general lack of understanding of the value of HCI combined with Storage Only nodes, I suspect, due to the authors preferred product not having the capabilities.

The post defines storage only nodes as the following:

A storage only node is a node that does not provide compute to the cluster. It can not run virtual machines without configuration changes.

A storage only node while not providing compute adds storage performance and capacity to the cluster.

So based on this vSAN and Nutanix are equivalent right? Think again!

Let’s address the first definition – “A storage only node is a node that does not provide compute to the cluster. It can not run virtual machines without configuration changes. “

For vSAN, as the VMware employees blog described, this can be achieved by in two ways depending on the vSphere licensing (another point I’ll raise) the customer is entitled too.

-

Deploy a separate vDS for the storage hosts, and do not setup virutal machine port groups. A virtual machine will not power up on a host that it can not find it’s port group on

- . you can leverage DRS “Anti-affinity” rules to keep virtual machines from running on a host. Make sure to use the “MUST” rules, and define that virtual machines will never run on a host.

Let’s now learn how Storage Only nodes (released in 2015) are deployed with Nutanix.

- During initial setup (called Foundation), a node can be marked as Storage Only node and you’re done.

- If you wish to convert an existing Compute+Storage node into a Storage only node, this can be done by marking the node as “Never schedulable”.

- A HCI node added to a cluster acts essentially as a storage only node until VMs are manually or dynamically moved onto the host.

With Nutanix you don’t need vSphere licensing even for ESXi environments as Nutanix storage only nodes run Acropolis Hypervisor (AHV).

Even with Storage Only nodes running AHV, they integrate seamlessly to the Nutanix cluster and provide all the value almost immediately to the storage presented to the vSphere or Hyper-V cluster.

In short, vSAN does not really have a concept of Storage Only nodes and the method described by the VMware employees blog is at best a hack while Nutanix provides a comprehensive storage only capability.

Here is a summary of setting up Storage Only nodes with the two products:

* Only by using manual hacks (DRS rules & custom networking)

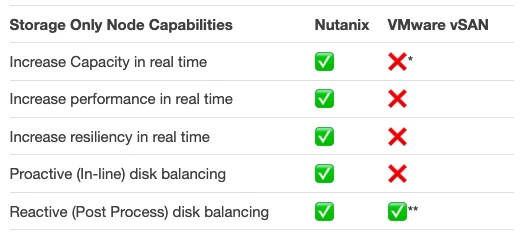

Now let’s assume you disagree with me and you firmly believe vSAN can and does support the concept of Storage Only nodes. Let’s talk about how the storage only nodes are used by vSAN and Nutanix ADSF. After this section, I’m confident you’ll see the major architectural differences and resulting value Nutanix delivers.

Example: A four-node cluster, which has plenty of available CPU/RAM but storage has grown at a faster than expected rate and utilization is near 75%. This also means the environment would be crossing the boundary where it no longer has N+1 capacity available.

Requirement: The customer needs to double their storage capacity.

Let’s start with vSAN assuming a default configuration.

We add 4 new nodes to the cluster and perform the required configuration of DRS or networking as described by our VMware friend.

By default, vSAN won't start an automatic rebalance as the capacity devices in the cluster are <=80 percent full. The process would need to be manually initiated by an administrator.

The performance of the VMs has not changed as the VMs objects are not yet distributed across the new nodes.

The new vSAN nodes are basically not providing value to the cluster.

The new vSAN nodes are basically not providing value to the cluster.

Now let’s compare Nutanix ADSF assuming a default configuration.

We add 4 nodes to the cluster which have been marked as Storage Only nodes via Nutanix Foundation (bare metal imaging) tool.

Note: If the new nodes were HCI nodes the same process described below would occur.

The cluster wide average latency immediately lowers, with both the read and write latency for VMs reduces before our eyes.

VM IOPS also increases significantly and we see a reduction in CPU utilization across the virtual machines.

Wait what? Nutanix delivered Increased storage performance and more efficient VM resource utilization because of storage only nodes?

That’s right, thanks to ADSF being a truly distributed storage fabric, the moment the storage only nodes are added write replicas are immediately being proactively balanced throughout the now eight (8) node cluster.

This means all VMs are now benefiting from eight storage controllers (CVMs) and the additional SSD or SSD & HDD for hybrid environments.

Due to the bulk of the write replicas being dynamically placed onto storage only nodes, the Controller VMs (CVM) on the HCI nodes are performing less work, freeing up CPU resources for more VMs and/or better VM performance.

This isn’t a theory or some marketing nonsense, it’s something that’s easy to test and I’ve demonstrated this a long time ago in my article: Scale out performance testing with Nutanix Storage Only Nodes.

Interesting to note, the performance increase (total IOPS) was almost exactly linear while write and read latency were reduced significantly.

This dramatic improvement in performance can only be achieved because Nutanix ADSF does not rely on large objects like vSAN and has a proactive write path that balances writes based on cluster performance and capacity.

What about Nutanix Data Locality?

Doesn’t storage only nodes break this concept and advantage?

Quite the opposite, adding storage only nodes reduces the amount of write replicas stored on the HCI nodes which frees up more capacity to store data locally to maximize the benefit of data locality.

The reason vSAN suffers much of its complexity and in this case, an inability to deliver real world value from storage only nodes is because of its underlying architecture being very limited by the concepts of disk groups and large 255GB objects.

What happens if we used Storage Only nodes and the cluster has a node failure?

With Nutanix, all nodes within the cluster actively participate in the rebuild process with ADSF dynamically balancing the rebuild across all nodes (HCI & Storage Only) based on capacity utilization and performance which results in faster restorations and a balanced cluster after the rebuild is complete.

On the other hand vSANs rebuilds are performed at a much less granular scale where an object (up to 255GB) is replicated from one node to another node which leads to fragmentation and hot spots within clusters. Writes are not dynamically balanced in the write path to the most optimal location in the cluster due to vSAN’s underlying Object store architecture.

Let’s summarise the Storage Only node capabilities between the platforms:

* The capacity of the vSAN datastore is technically increased, however, the capacity is not used/usable until the rebalance operation is completed OR new VMs / vDisks are created.

** By default: Only applied when storage devices exceed 80% utilization.

Summary

While both platforms share similar capabilities when compared at the “tick-box” marketing OR “101” level. We have learned it’s essential to look under the covers as the real-world capabilities vary significantly from the marketing slides.

We’ve learned Nutanix Acropolis distributed storage fabric (ADSF) allows customers to scale storage capacity by adding individual drives, HCI node/s or storage only nodes without manual intervention.

In addition, Nutanix customers enjoy improved performance, resiliency and all data efficiency technologies without compromise. (Dedupe, Compression and Erasure Coding).

Nutanix also provides unique storage only node capability which provides increased storage capacity, performance and resiliency without any complex administration/configuration.

vSAN simply does not have the underlying architecture to scale capacity in an efficient and performant manner.

This article was originally published at http://www.joshodgers.com/2020/02/10/scaling-storage-capacity-nutanix-vs-vmware-vsan/

2020 Nutanix, Inc. All rights reserved. Nutanix, the Nutanix logo and all Nutanix product, feature and service names mentioned herein are registered trademarks or trademarks of Nutanix, Inc. in the United States and other countries. All other brand names mentioned herein are for identification purposes only and may be the trademarks of their respective holder(s). This post may contain links to external websites that are not part of Nutanix.com. Nutanix does not control these sites and disclaims all responsibility for the content or accuracy of any external site. Our decision to link to an external site should not be considered an endorsement of any content on such a site.

2020 Nutanix, Inc. All rights reserved. Nutanix, the Nutanix logo and all Nutanix product, feature and service names mentioned herein are registered trademarks or trademarks of Nutanix, Inc. in the United States and other countries. All other brand names mentioned herein are for identification purposes only and may be the trademarks of their respective holder(s). This post may contain links to external websites that are not part of Nutanix.com. Nutanix does not control these sites and disclaims all responsibility for the content or accuracy of any external site. Our decision to link to an external site should not be considered an endorsement of any content on such a site.