This post was authored by Yashesh Mankad Sr. Member of Technical Staff Nutanix

PostgreSQL is a powerful, open-source relational database system that is robust and reliable with a fully extensible architecture. It runs on all major operating systems and offers a number of add-ons that can transform a PostgreSQL instance into diverse applications like time-series databases, messaging queues, and so on.

Due to Postgres’ strengths, it is a popular data persistence choice for complex applications, especially when considering open-source databases. Postgres can be deployed as a standalone instance or in the form of a cluster. Most serious deployments quickly hit limits of scale and availability with single-instance deployments. Postgres offers building blocks for high availability like replication, read replicas, single vs. multi-master master deployment, and so on. However, it does not offer a built-in solution for clustering and high availability.

Nutanix database as a service (known as Nutanix Era) solves this problem by offering a one-click capability to deploy a highly available Postgres database. Era uses a number of technologies driven by Postgres’ best practices to provision this cluster. This guide provides details on these technologies, what role they play, along with some key configuration aspects to create an operational cluster.

Elements of a Postgres Cluster

A Postgres cluster should offer elasticity—this is the ability to add/remove nodes from the cluster with 100% availability of Postgres. For high availability, the cluster should be able to track state changes of its member nodes to ensure there is always a master to accept database writes consistently. Additionally, it should provide data durability through replication to account for disk or node failures. The Postgres software is not sufficient to meet all these requirements. We need to bring in other elements to the ecosystem. We describe below some of these elements and the role each element plays in the cluster.

Cluster Manager

A cluster manager sits at the heart of the entire architecture. It is responsible for configuring a Postgres node based on its role in the cluster (master vs. replica). It keeps track of nodes coming in and going out of the cluster. When a new node gets added, it needs to be configured as a replica first. As a replica, the node is responsible for syncing its state to the master. Cluster members are also responsible for maintaining archive logs for recovery later. The cluster manager maintains the state of every node, including which node is master and which nodes are replicas.

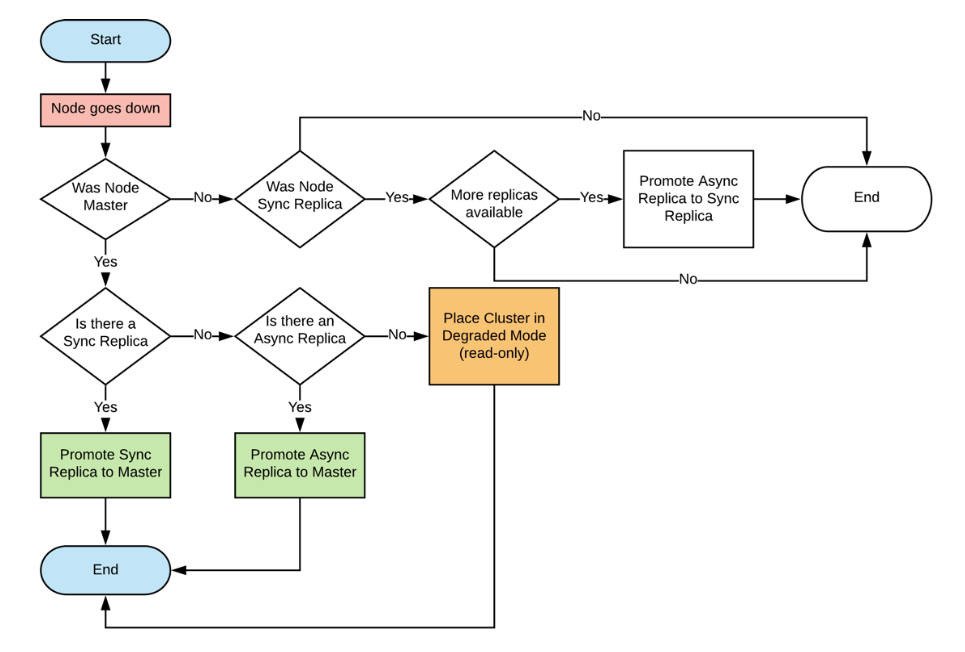

Among replicas, we can have synchronous replicas that reflect the exact state of the master, whereas some replicas can be asynchronous that could lag the master. Cluster manager keeps track of which replicas are synchronous versus asynchronous and the amount by which each replica lags the master. All of this state should be maintained and updated in real-time to ensure the highest availability of the database cluster. Cluster managers are complex pieces of software. This flowchart gives an idea about how many state transitions a cluster manager needs to perform when a node goes down in the cluster.

The Postgres community recommends a number of cluster managers, like Barman and Stolon. Nutanix Era uses the most popular cluster manager, Patroni, as the cluster manager in all Postgres clusters provisioned for its customers. Patroni is highly recommended due to capabilities like automatic failure detection and recovery, distributed consensus for every action in the cluster, and an API-first approach for configuration, switchovers, and tooling. An instance of Patroni runs on every Postgres node.

Distributed Configuration Store

Patroni needs a strongly consistent, distributed key-value store to save all the states it is tracking for members in the cluster. This key-value store is also used to track state changes by setting up an active HTTP session with health checks for all nodes in the cluster. Since the store provides strict consistency while updating keys, it is a good solution for leader election in a quorum of Postgres and Patroni nodes. Patroni supports a number of key-value stores like etcd, Consul, and Zookeeper.

However, etcd is highly performant and is used in lot of distributed systems like Kubernetes, CockrochDB, and so on. Nutanix Era uses etcd as well for purposes of distributed consensus and persistence. An instance of etcd runs on every Postgres node. The etcd ring can be maintained outside of Postgres and Patroni, but Era chose this approach to minimize the physical resources needed for the cluster.

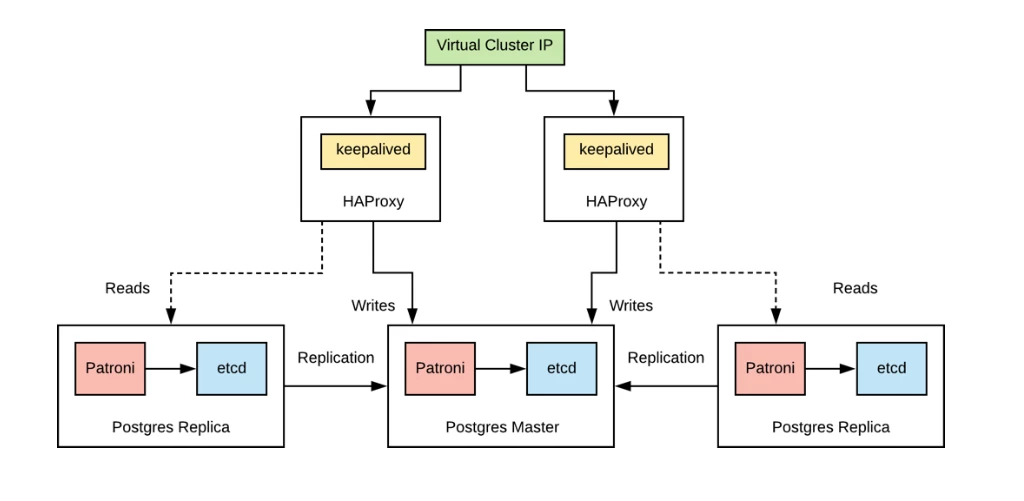

Proxy Servers

Applications should be unaffected when there are master or leadership changes in the cluster. To achieve this, the cluster is front-ended with a proxy, and the applications connect to this proxy instead of the master node directly. Era uses HAProxy to offer this capability. The proxy should not be a single point of failure. As a result, Era deploys two instances of the proxy for redundancy. The application has to be unaffected by changes in these proxies, too. As a result, the proxies are exposed to the application through a reserved virtual IP that never changes. Era uses a daemon called keepalived that is responsible for mapping requests on the virtual IP to the appropriate proxy. Both HAProxy and keepalived are reliable, open-source technologies that have been hardened over the years.

Deployment Topology

If we put all the pieces together, we end up with a topology that looks like this:

The Postgres cluster consists of 3 or more nodes with one node maintaining the master role and the rest of the nodes acting as replicas (hot standbys). We can have up to two HAProxy nodes for redundancy with one virtual IP that is used by applications to use the cluster.

Database writes are directed to the master, whereas we have various options for reads. Reads can be directed to the master, all the replicas, or a subset of them.

Replication

Replicas pull data from the Master in two forms: synchronously and asynchronously. Era supports both forms of replication when it provisions a cluster. In case the user chooses synchronous replication, we configure only one node of the cluster as a sync replica to limit database performance degradation for table updates. The rest of the replicas are asynchronous. Postgres replication is a vast topic, and one can learn more about it from Postgres documents.

Key Configuration

The majority of configurations for the cluster happen in Patroni. Here are some key configuration details that are essential for a healthy cluster:

- Replication User: A dedicated Postgres user specified in the Patroni configuration that is used to connect to the Master, stream WAL logs, and apply them.

- Storage for Archive Logs: Maintaining archive logs is recommended in a cluster. If the replicas lose connection to the master, they can sync up from the archives. Era archives WAL logs of the database to enable this capability. The deployment needs sufficient storage if archiving is desired,

- Archival Command: Command used to archive WAL logs when acting as master.

- Restore Command: Command used to archive WAL logs when acting as a Replica.

- Synchronous Mode Settings: synchronous_mode and synchronous_mode_strict should be “on” in case the user wants synchronous replication.

Nutanix Era - Database as a Service

Nutanix Era provides DBaaS functionality with one-click database management (provision, clone, patch, refresh, and backup) in just a few minutes. Nutanix Era automates and simplifies database management, bringing one-click simplicity and invisible operations to database provisioning and lifecycle management (LCM). Provisioning and copy data management (CDM) are cornerstone capabilities that enable database admins to provision, clone, refresh, and restore their databases to any point in time. Through a rich but simple-to-use UI and CLI, they can restore to the latest application-consistent transaction.

Era utilizes the above technologies to not only quickly provision Postgres clusters for its customers but also to best practice and standards. The clusters have a single master and multiple read replicas with an option to deploy a synchronous replica. Era supports deploying up to two HAProxies depending on the desired redundancy. Archive logs are managed by Era as well. They are stored on and outside the Postgres cluster to provide recovery options in case the entire cluster has to be recreated. Era also offers discrete-time storage level snapshotting for database backups and recovery.

References

https://www.postgresql.org/docs/9.1/high-availability.html

https://patroni.readthedocs.io/en/latest/dynamic_configuration.html

© 2019 Nutanix, Inc. All rights reserved. Nutanix, the Nutanix logo and all Nutanix product and service names mentioned herein are registered trademarks or trademarks of Nutanix, Inc. in the United States and other countries. All other brand names mentioned herein are for identification purposes only and may be the trademarks of their respective holder(s). This article may contain links to external websites that are not part of Nutanix.com. Nutanix does not control these sites and disclaims all responsibility for the content or accuracy of any external site. Our decision to link to an external site should not be considered an endorsement of any content on such a site. Certain information contained in this article may relate to or be based on studies, publications, surveys and other data obtained from third-party sources and our own internal estimates and research. While we believe these third-party studies, publications, surveys and other data are reliable as of the date of this article is published, they have not independently verified, and we make no representation as to the adequacy, fairness, accuracy, or completeness of any information obtained from third-party sources.