This post was authored by Amin Aflatoonian, Enterprise Architect Nutanix

Juniper Contrail is a software defined networking (SDN) controller deployed widely in the Telco cloud industry for a variety of use cases. Recently, Nutanix and Juniper announced that Contrail is validated for deployment on AHV, the native Nutanix hypervisor.

In this post, I’ll present these two technologies architecturally and describe how they can be integrated.

Architecture

In this section I present briefly the architecture of both Contrail and AHV products to show how these two products can be coupled to provide added value services. To learn more about these products, please visit the Nutanix and Juniper official websites.

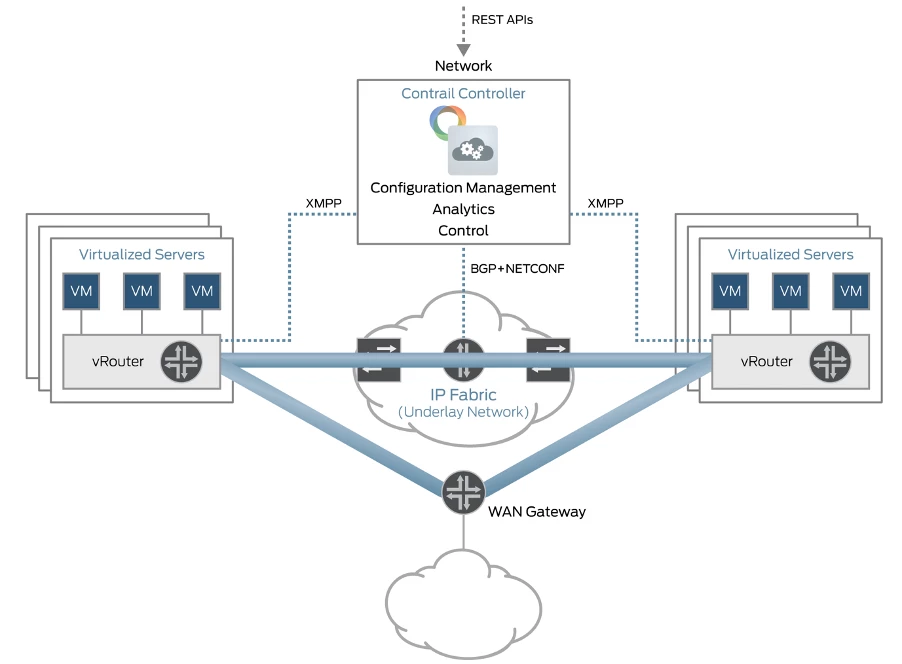

Juniper Contrail

Contrail is an SDN controller that creates and manages overlay networks on top of the physical and virtual infrastructure.

Contrail consists of two main components:

- Contrail vRouter: a distributed router that runs on the hypervisor and provides networking capabilities to the VMs.

- Contrail Controller: a logically centralized control plane that provides control, management, orchestration and analytics to the vRouters.

On the highest level of Contrail, via its Northbound interface (NBI), the controller receives high-level network configuration (including virtual network, vNIC, routing, policies, etc.) and programs the routing table of the vRouters accordingly. To send these instructions to the vRouter, a Controller uses the XMPP protocol.

On the data plane level, when the vRouter receives traffic from attached VM interfaces (vNIC), it encapsulates that traffic using several technologies (for example, VXLAN, MPLSoUDP, MPLSoGRE) and sends it to the underlay network.

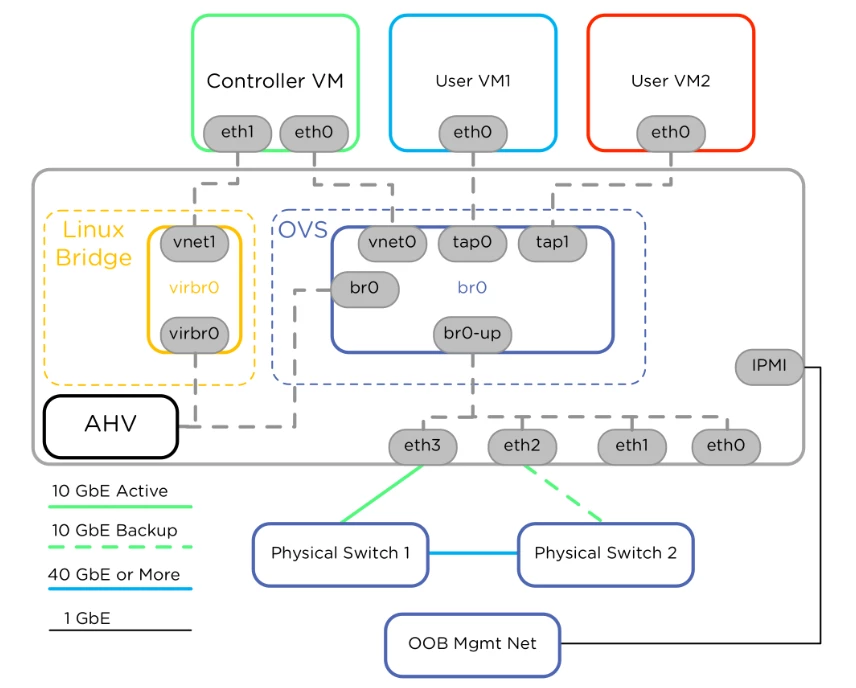

Nutanix AHV network design

Nutanix AHV is distributed alongside Nutanix Acropolis OS (AOS), which is present on each Nutanix node. An AHV node is composed of three main components: A controller VM (CVM) running AOS, AHV as the bare metal hypervisor, and Open vSwitch (OVS).

- Each CVM runs the Nutanix software and serves all of the I/O operations for all VMs running on the host. CVM contains the core functionality of the Nutanix platform and handles services such as storage I/O, UI, API, and upgrades.

- AHV leverages OVS for all VM networking. In a default AHV deployment, an OVS br0 bridge connects each VM tap interface.

The CVM is connected to both Linux Bridge virbr0 and OVS br0. Nutanix workloads (VMs) are connected to the OVS br0 and can reach the external network via br0-up interface.

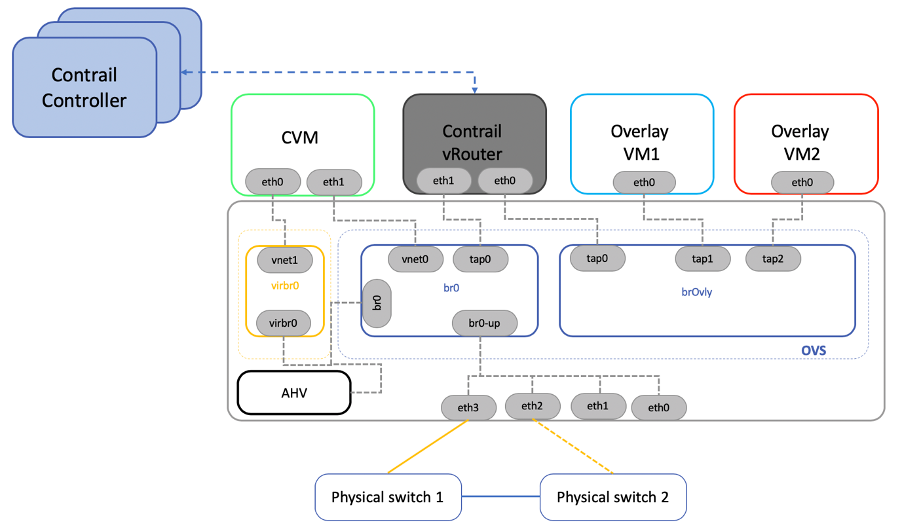

Contrail AHV Integration

The figure below shows the integration of Contrail in AHV. This integration involves creating three main components in an AHV default deployment:

OVS overlay bridge

A separate OVS bridge, called brOvly, is created on each node to isolate the underlay traffic from the overlay traffic. All VMs that must be connected to the overlay network are connected to the brOvly bridge.

Contrail control cluster

Contrail controllers are created to manage the overlay network of the Nutanix cluster. These controllers can be physical machines or virtual ones, depending on the required performance and the capacity of each cluster.

Contrail vRouter VMs

A vRouter VM is created on each Nutanix host to ensure the overlay connectivity. It’s worth noting that the vRouter VM has at least two interfaces, one connected to the brOvly OVS bridge and another connected to the br0 OVS bridge.

Each Nutanix node has a vRouter VM deployed using a host affinity rule. There are one or three Contrail controllers (based on HA policy) for the whole cluster. These controllers communicate to the vRouter through the underlay network (that is, OVS br0).

The brOvly bridge is an isolated bridge without any connection to the external network. All VM inbound or outbound traffic go through the vRouter VM via the brOvly.tap0 interface.

The VM sends outbound traffic to the tap interface, which directs traffic to the vRouter via its eth0 interface. vRouter encapsulates the traffic based on configured technology (for example, VXLAN, MPLSoUDP, or MPLSoGRE) and sends out traffic to the underlay network via br0. The vRouter decapsulates inbound traffic and then sends it out to the destination tap interface via brOvly bridge. See Contrail reference documentation for detailed information on the encapsulation, decapsulation, and lookup mechanisms.

Juniper’s Contrail is a validated SDN controller that can be used to create overlay networks for bringing value-added services into a Nutanix cluster. In the next post, we will describe how to deploy Contrail on an AHV cluster.

Be sure to continue the conversation on our community forums and share your expertise with others.

2020 Nutanix, Inc. All rights reserved. Nutanix, the Nutanix logo and the other Nutanix products and features mentioned on this post are registered trademarks or trademarks of Nutanix, Inc. in the United States and other countries. All other brand names mentioned on this post are for identification purposes only and may be the trademarks of their respective holder(s). This post may contain links to external websites that are not part of Nutanix.com. Nutanix does not control these sites and disclaims all responsibility for the content or accuracy of any external site. Our decision to link to an external site should not be considered an endorsement of any content on such a site.

2020 Nutanix, Inc. All rights reserved. Nutanix, the Nutanix logo and the other Nutanix products and features mentioned on this post are registered trademarks or trademarks of Nutanix, Inc. in the United States and other countries. All other brand names mentioned on this post are for identification purposes only and may be the trademarks of their respective holder(s). This post may contain links to external websites that are not part of Nutanix.com. Nutanix does not control these sites and disclaims all responsibility for the content or accuracy of any external site. Our decision to link to an external site should not be considered an endorsement of any content on such a site.

This post may contain express and implied forward-looking statements, which are not historical facts and are instead based on our current expectations, estimates and beliefs. The accuracy of such statements involves risks and uncertainties and depends upon future events, including those that may be beyond our control, and actual results may differ materially and adversely from those anticipated or implied by such statements. Any forward-looking statements included in this post speak only as of the date hereof and, except as required by law, we assume no obligation to update or otherwise revise any of such forward-looking statements to reflect subsequent events or circumstances.