This post was authored by Amin Aflatoonian, Enterprise Architect Nutanix

In our previous post, we presented an architectural overview of Contrail integration to the AHV cluster. In this post, we describe the deployment of this controller in detail.

A detailed description of virtual network and VM creation will be presented in the next post.

Deployment

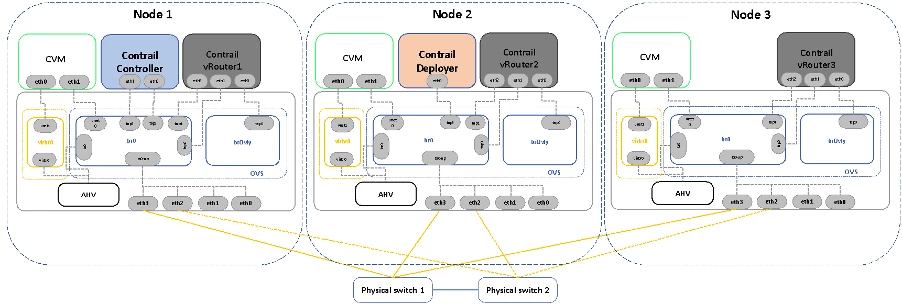

To explain the deployment of Contrail on AHV, we used a lab environment containing a three node Nutanix cluster. We present the lab architecture in the following figure.

Because we don’t need to have a Contrail control HA, we deploy only one Contrail controller VM and three vRouter VMs (one vRouter per node).

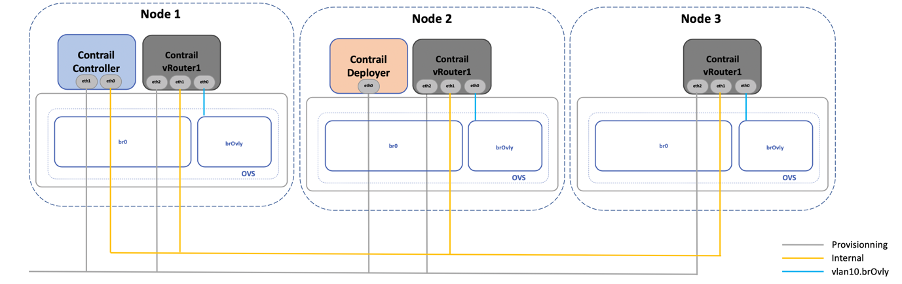

We used Ansible to deploy Contrail. In this lab, we also created an Ansible deployer VM (called Contrail deployer) that manages the deployment lifecycle. For this purpose, we created a provisioning network in the Nutanix cluster and attached all Contrail VMs (controller and vRouters) to this network. The figure below shows the lab’s network configuration.

Lab preparation

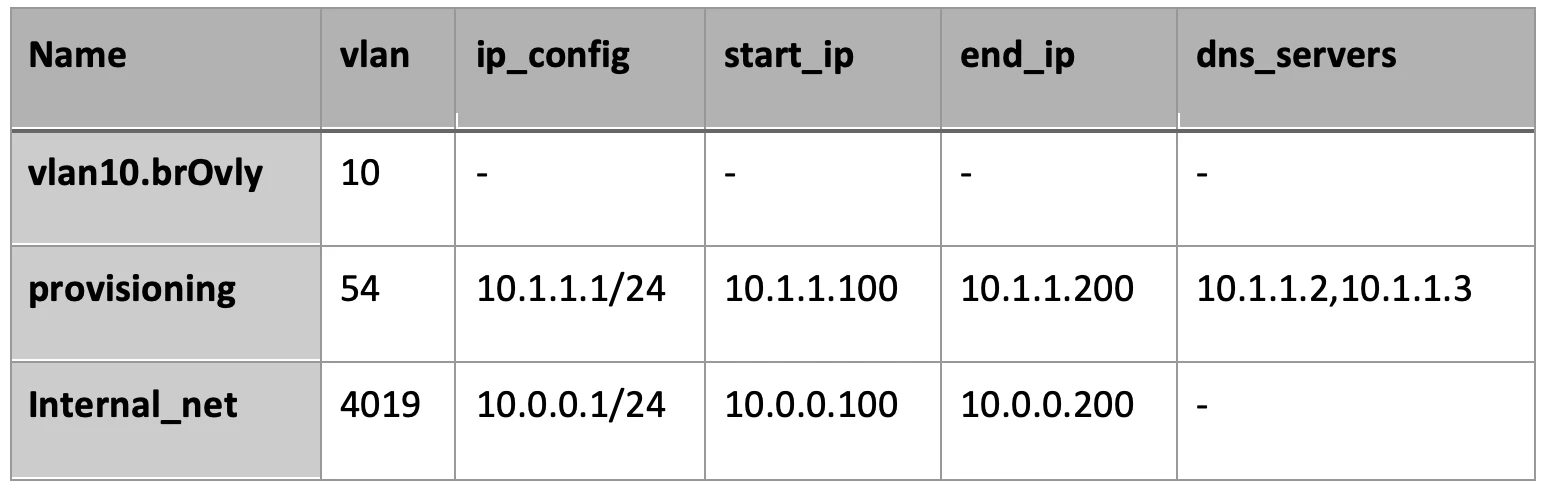

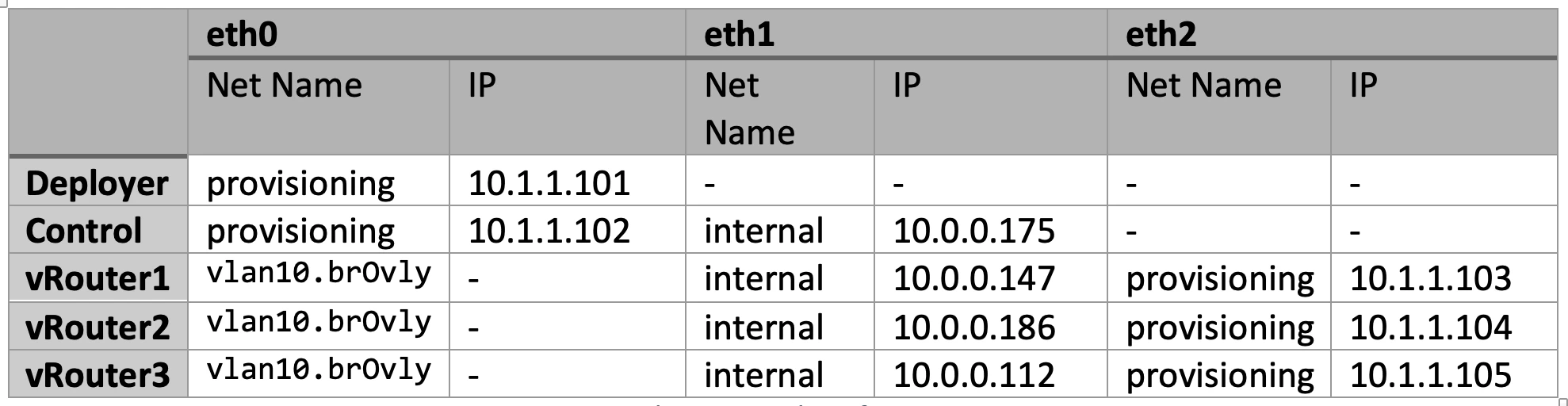

The following table shows the network configuration of this lab used to deploy Contrail VMs.

Overlay bridge and vRouter overlay network

Before deploying Contrail VMs, create an overlay bridge (brOvly) on all Nutanix nodes. This bridge is isolated, without any link to the physical NIC or to other OVS bridges. To create this bridge, you can use the allssh command from a CVM.

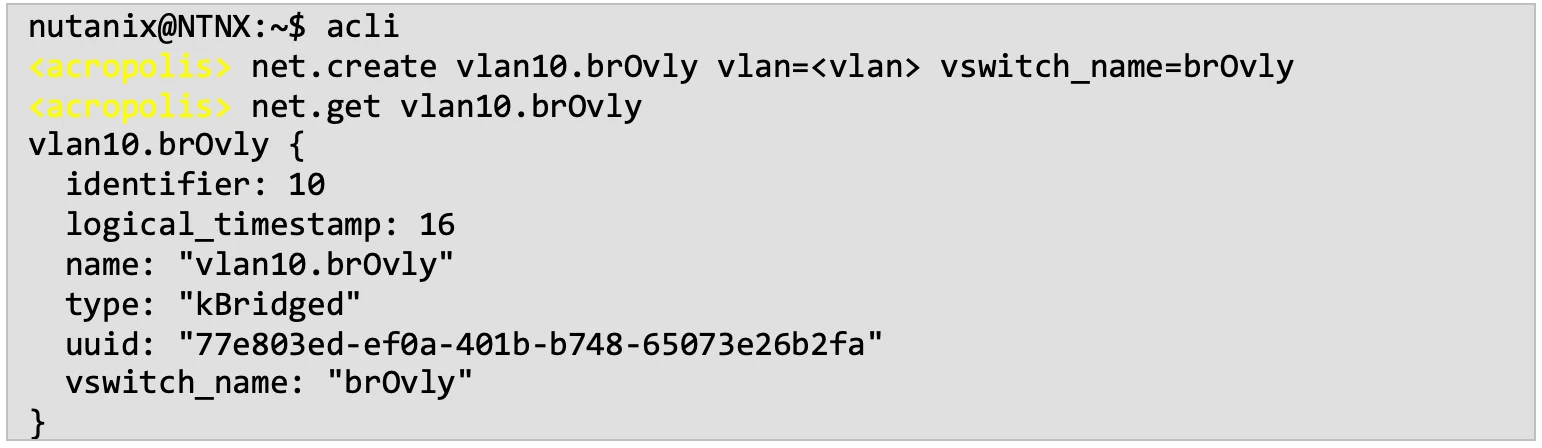

After creating the overlay bridge, create an overlay network that connects the vRouter VM to this bridge. To create this bridge, we used the Nutanix Acropolis CLI (acli).

Provisioning network

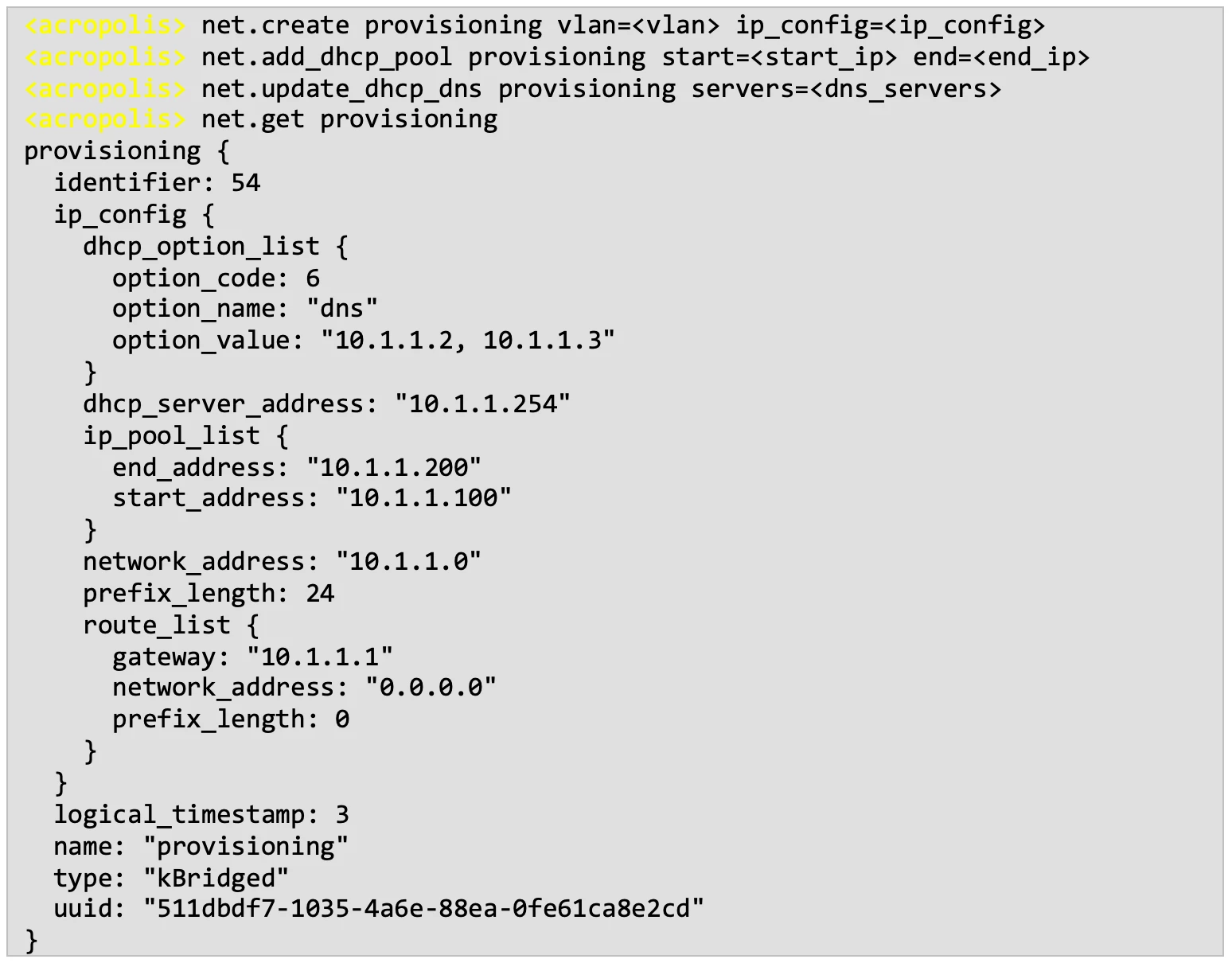

Contrail deployer, control, and vRouter VMs are all attached to the provisioning network. This network is used specifically by Contrail Deployer to install required packages on all Contrial nodes. In our lab, this network is created using the acli with VLAN ID 54.

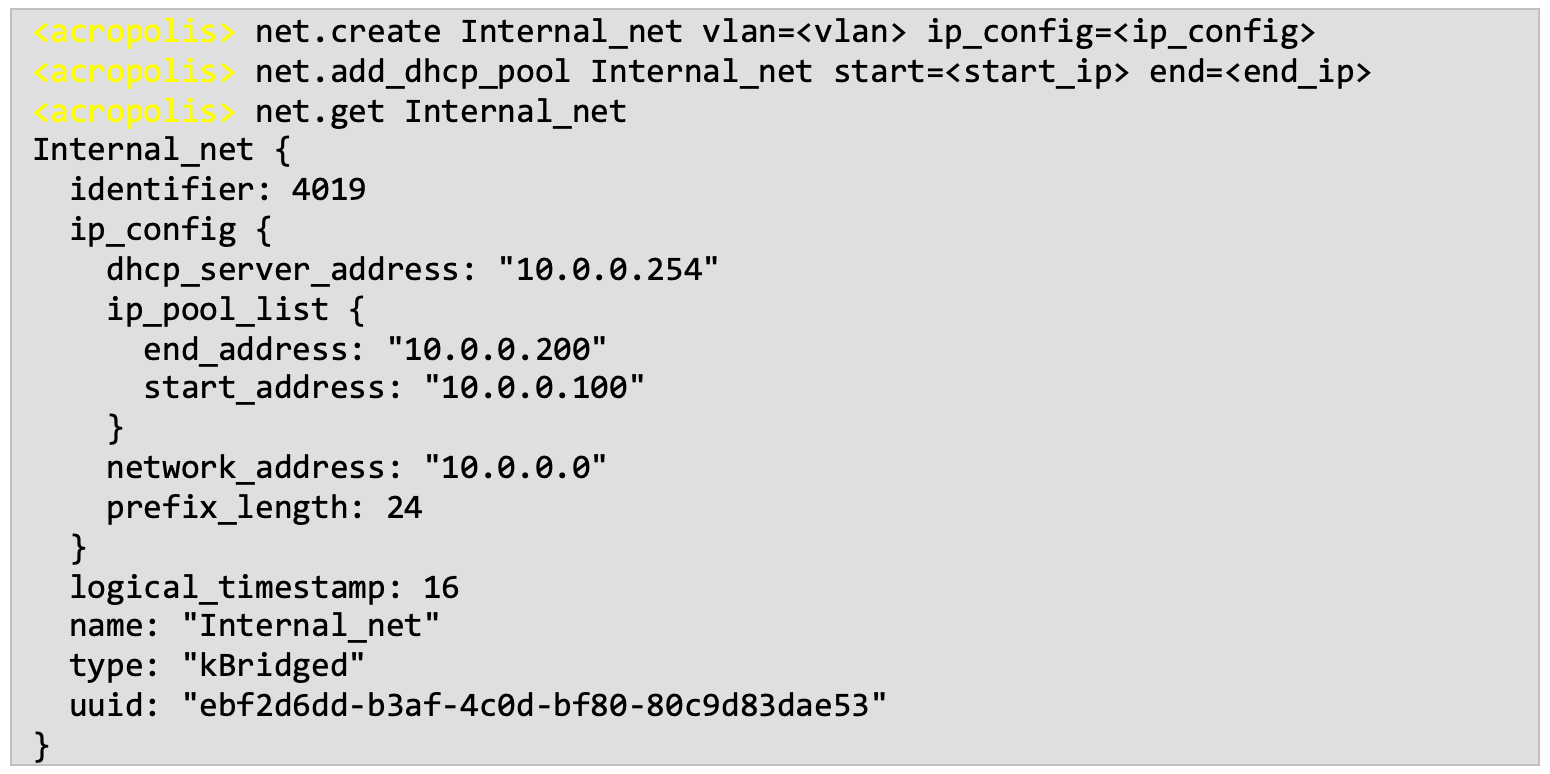

Internal network

The data and control communication between Contrail nodes (Control and vRouter) is done through the internal network. Each Contrail VM is attached to this network.

Contrail VMs

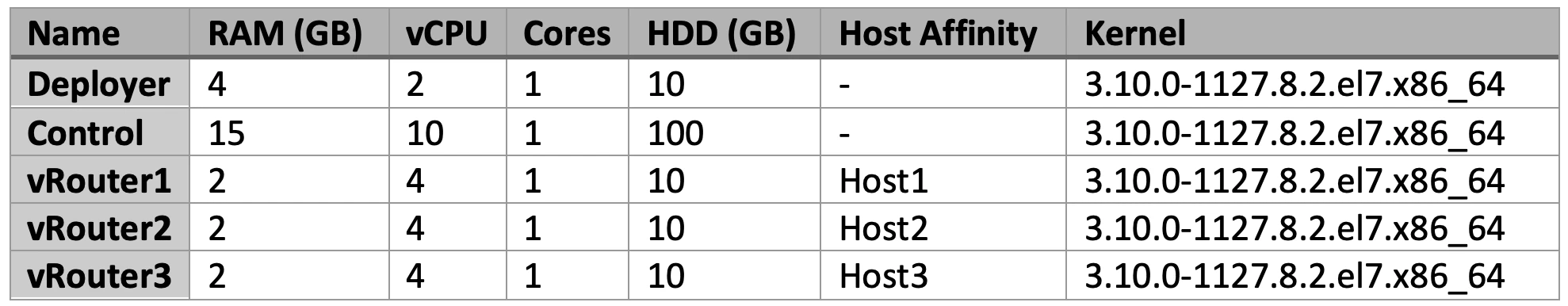

Once all required bridges and networks are created, we can start spawning Contrail VMs. Before starting the deployment you need to spawn Contrail VMs. These VMs are based on CentOS 7 OS. The table below recaps all VMs we use in this deployment, including their resources, their host affinity rule (if exists), and their kernel version.

These VMs are deployed via Nutanix Prism Central (PC). For the sake of simplicity, we don’t describe the creation of each VM in detail.

The following table shows the IP address of each Contrail VM interface.

Contrail deployment

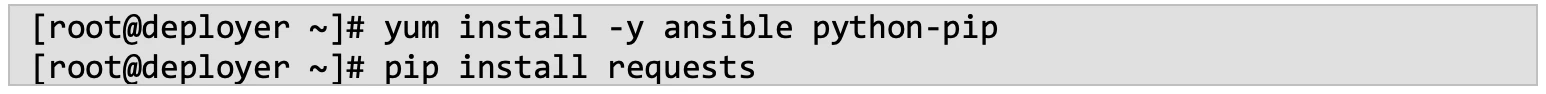

The whole contrail deployment process passes inside the Contrail deployer VM. As mentioned previously, the deployment is done by Ansible. Before going further, we need to install three packages on this VM: ansible, python-pip, and requests.

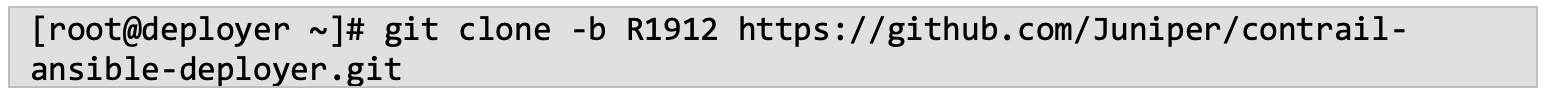

Then, we clone all required Ansible playbooks from Juniper github.

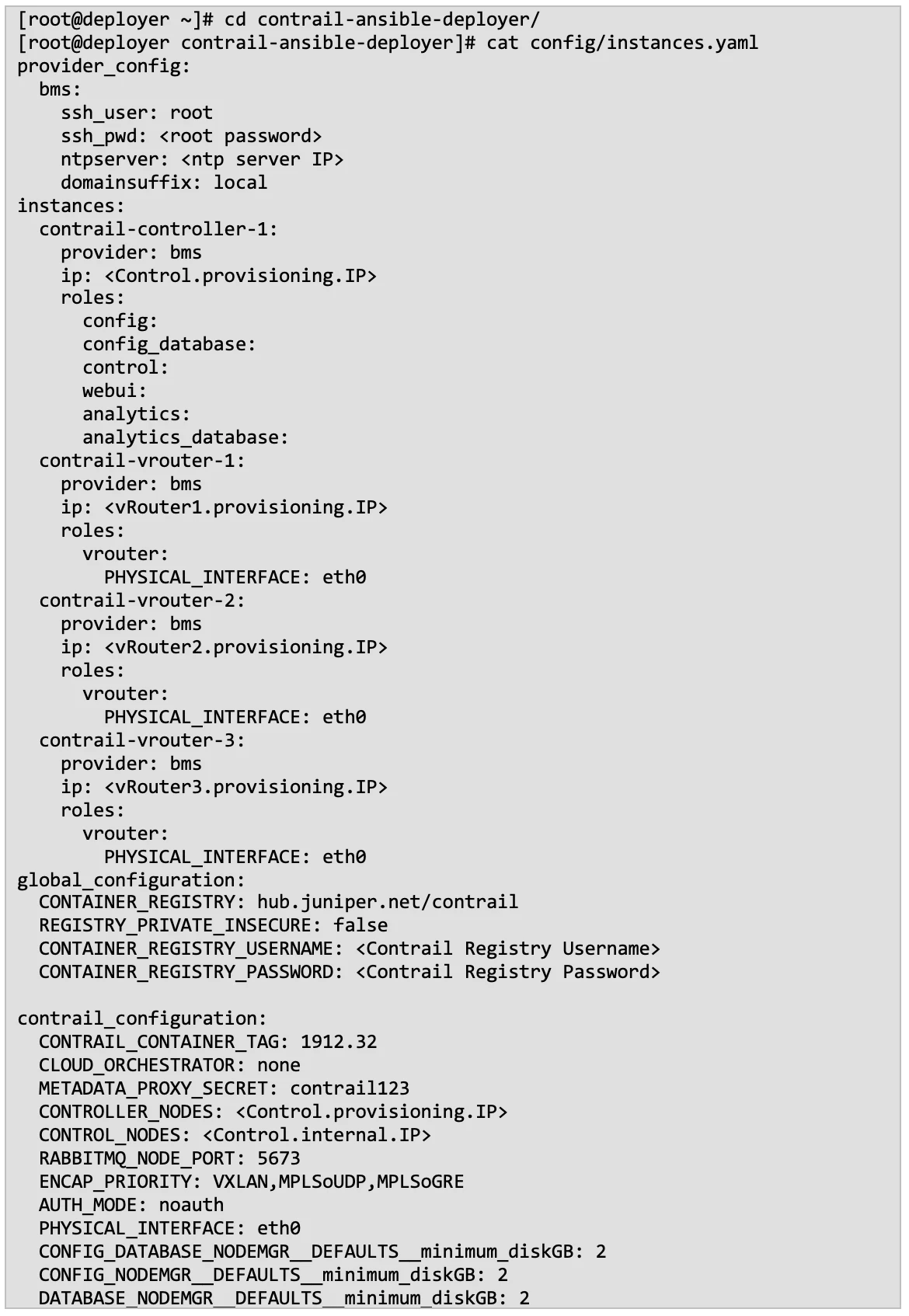

Finally, we customize the instances.yaml file. The following is the instances file we use for this deployment. Note that you need to get the container registry username and password from Juniper Networks.

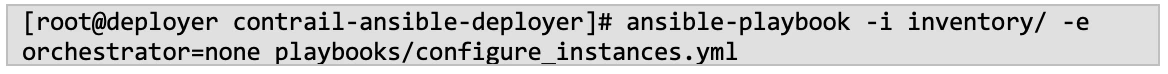

Once the instances file is completed, we use Ansible to configure our instances.

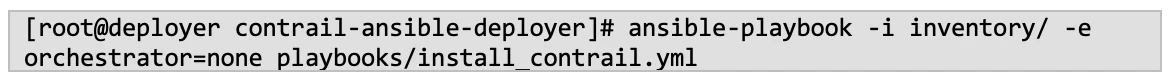

Then we deploy Contrail.

Contrail post deployment

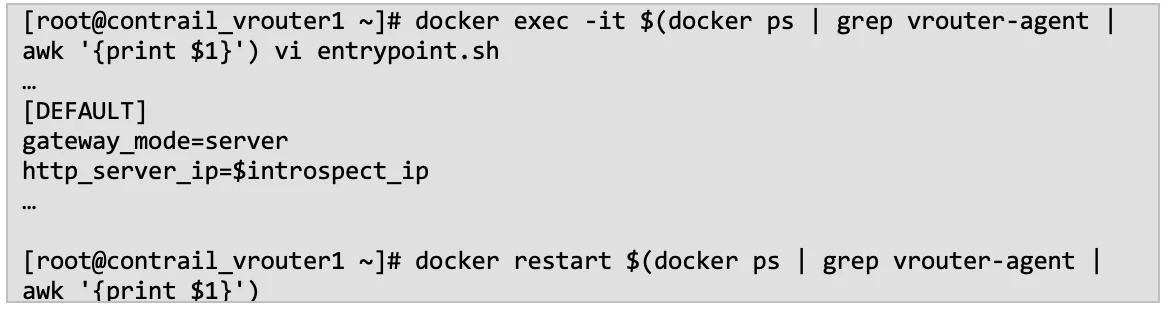

Once the deployment is done successfully, you activate the vRouter on the Nutanix cluster by enabling the gateway mode on each vRouter.

To do so, on each vRouter VM edit the entrypoint.sh of the Contrail vRouter agent container; add “gateway_mode=server” in the [DEFAULT] section of that file; and restart the container.

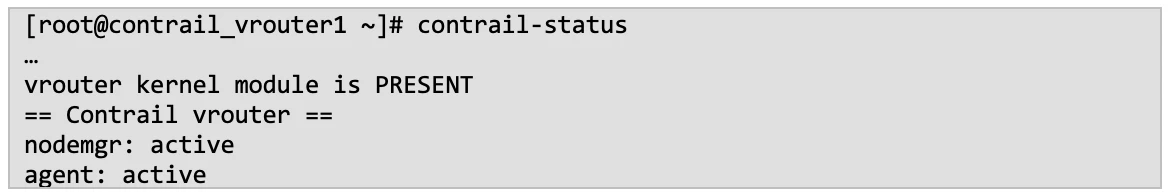

Make sure that the vRouter is started and is running correctly.

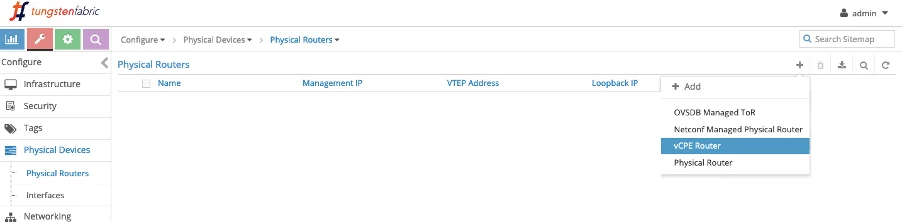

The next step is to add three vRouters to the Contrail controller and establish the peering between the controller and vRouters. These steps are done via the Contrail UI.

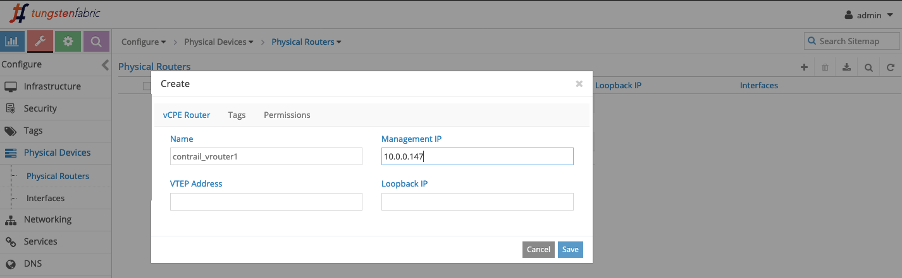

From Contrail UI > Configure > Physical Devices > Physical Routers, click the “+ Add” dropdown menu and choose vCPE Router.

In the popup window, add the vRouter hostname (gathered by hostname command from vRouter VM) and its Internal IP address.

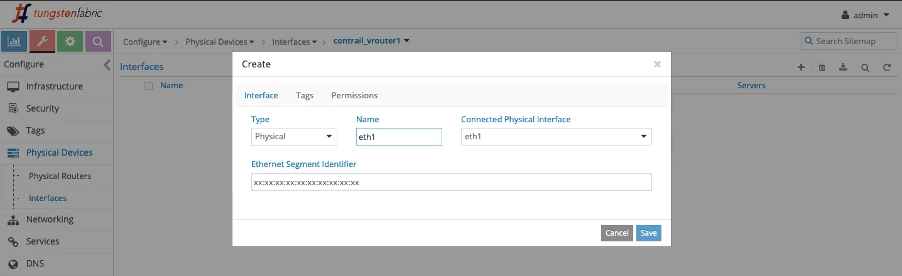

Then add the physical interface to the vRouter. From Configure > Physical Devices > Interfaces, choose the vRouter name and add a new interface (+ button). The type of the interface should be Physical, the name is eht1, and the connected interface is also eht1.

Repeat these two steps to add all the vRotuers to the Contrail controller.

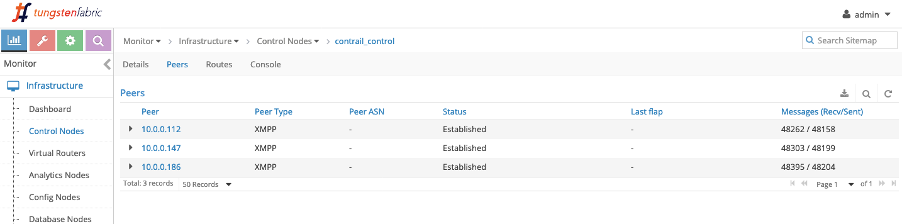

Once all three vRouter are added to the list, from Monitor > Infrastructure > Control Nodes > contrail_control > Peers, you can see that the Peering between vRouters and the Contrail controller is established.

In this post we have discussed how to deploy Contrail on a Nutanix cluster. This example was based on a lab environment, and the size of VMs used for this lab was for the purposes of demonstration. If you want to use such a deployment for a production environment, please review the sizing of Contrail VMs based on your cluster capacity and Juniper Network recommendations.

© 2020 Nutanix, Inc. All rights reserved. Nutanix, the Nutanix logo and the other Nutanix products and features mentioned on this post are registered trademarks or trademarks of Nutanix, Inc. in the United States and other countries. All other brand names mentioned on this post are for identification purposes only and may be the trademarks of their respective holder(s). This post may contain links to external websites that are not part of Nutanix.com. Nutanix does not control these sites and disclaims all responsibility for the content or accuracy of any external site. Our decision to link to an external site should not be considered an endorsement of any content on such a site.

This post may contain express and implied forward-looking statements, which are not historical facts and are instead based on our current expectations, estimates and beliefs. The accuracy of such statements involves risks and uncertainties and depends upon future events, including those that may be beyond our control, and actual results may differ materially and adversely from those anticipated or implied by such statements. Any forward-looking statements included in this post speak only as of the date hereof and, except as required by law, we assume no obligation to update or otherwise revise any of such forward-looking statements to reflect subsequent events or circumstances.