Solved

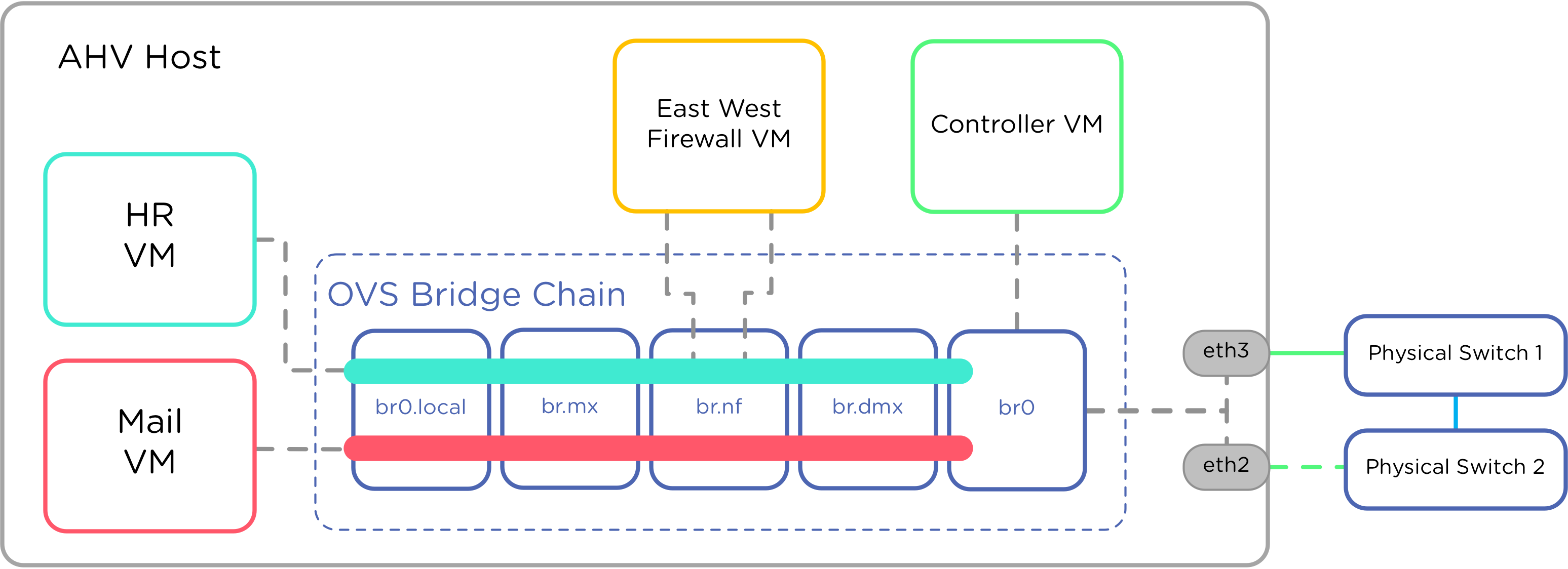

Acropolis open vSwitch

Hi, How many vLANs are supported per open vswitch in acropolis open vswitch? Is traffic shaping is possible in acropolis open vswitch? Thanks in advance. Regards, Vivek

Best answer by Jon

Keep in mind that when you configure a VLAN in Acropolis, it doesn't program it to any sort of OVS until a VM is provisioned on a host. When that happens, we configure a Tap device on that OVS, and program the VLAN to that tap device.

Completely different construct from the typical vSwitch, where you program the vSwitch, then attach VM's to pre-configured "port groups".

Traffic shaping it not yet available. If you have a use case for it, please submit a support ticket with priority RFE Request for Enhancement, so we can track demand for the feature.

Completely different construct from the typical vSwitch, where you program the vSwitch, then attach VM's to pre-configured "port groups".

Traffic shaping it not yet available. If you have a use case for it, please submit a support ticket with priority RFE Request for Enhancement, so we can track demand for the feature.

This topic has been closed for replies.

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.