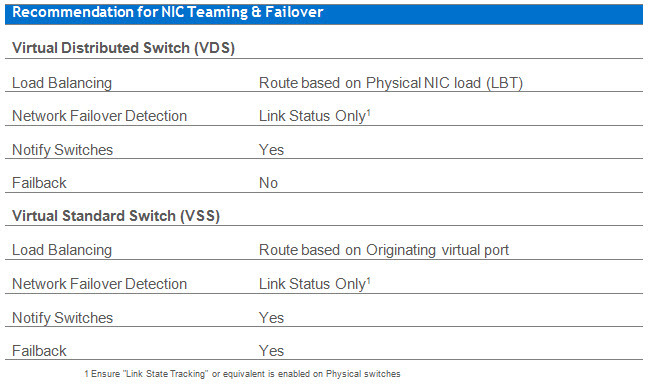

The following shows the available Load Balacing options currently available in vSphere 5.5 along with the recommended configuration for Nutanix environments for both Virtual Distributed Switches (VDS) and Virtual Standard Switches (VSS).

NIC Teaming & Failover Recommendation

Page 1 / 1

We use Enhanced LACP on vDS 5.5 to create a LAG across both 10 Gig ports on our NX6220's. Would you recommend this configuration and if so what would you recommend as the best algorithm for balancing the load across the LAG interfaces? I believe we are currently running in Source IP mode.

With the introduction of Load Based Teaming (LBT) on the Virtual Distributed Switch in vSphere 4.0, the requirement to consisder other forms of NIC teaming & load balancing in my opinion have all but been eliminated which is why when releasing our vNetworking Best Practices, the numerous VCDXs involved including myself, concluded LBT (Option 1 in the BPG) should be our recommendation.

There are many reasons LBT is excellent including:

1. Simplicity

No dependancy on the switch type or configuration. Stardard Access points with 802.1q is all that is required for LBT and multiple VLAN support.

No requirement to design IP address ranges around IP Hash to ensure the hash from the IPs ensures a balance of traffic.

2. Performance

LBT detects NIC utilization of >=75% and dynamically load balances VMs across the available NICs in real time.

3. LBT works with "Link Status" failover policy

No complexity with failover and failback, Link Status and (if supported by physical switch) "Link State Tracking" ensure in the event of a link failure, everything is managed automatically.

In Summary

In my opinion, LACP would at best provide a minimal benefit under very limited circumstances. The route based on physical NIC load (LBT) setting ensures in the event of >75% utilization of a vmNIC that traffic will be dynamically spread over the other available adapters. As such LACP is not really required, and with multiple 10Gb connections I have not seen an environment where the network was (truly) a bottleneck that needed to be addressed.

If there was a bottleneck, Route based on physical NIC load combined with NIOC would be a excellent solution without the need for the complexity of LACP.

Here is a link to our full BPG: http://go.nutanix.com/TechGuide-Nutanix-VMwarevSphereNetworkingonNutanix_LP.html

There are many reasons LBT is excellent including:

1. Simplicity

No dependancy on the switch type or configuration. Stardard Access points with 802.1q is all that is required for LBT and multiple VLAN support.

No requirement to design IP address ranges around IP Hash to ensure the hash from the IPs ensures a balance of traffic.

2. Performance

LBT detects NIC utilization of >=75% and dynamically load balances VMs across the available NICs in real time.

3. LBT works with "Link Status" failover policy

No complexity with failover and failback, Link Status and (if supported by physical switch) "Link State Tracking" ensure in the event of a link failure, everything is managed automatically.

In Summary

In my opinion, LACP would at best provide a minimal benefit under very limited circumstances. The route based on physical NIC load (LBT) setting ensures in the event of >75% utilization of a vmNIC that traffic will be dynamically spread over the other available adapters. As such LACP is not really required, and with multiple 10Gb connections I have not seen an environment where the network was (truly) a bottleneck that needed to be addressed.

If there was a bottleneck, Route based on physical NIC load combined with NIOC would be a excellent solution without the need for the complexity of LACP.

Here is a link to our full BPG: http://go.nutanix.com/TechGuide-Nutanix-VMwarevSphereNetworkingonNutanix_LP.html

Very thorough explanation - thanks! We went with LACP as a natural progression from our previous blade technology, we will probably take a look at migrating to Load-Based teaming as we grow our cluster.

Solid information -

I am assuming you are using A/A ports in that particular port group. My question is why is the setting for failback set to no? In my experience I have always configured or worked with the network team to leverage RSTP with Portfast on the uplink ports.

I saw mention something on his blog as well about this (with the vmkernel connected mgmt port group), curious why this is the configuration of choice.

I am assuming you are using A/A ports in that particular port group. My question is why is the setting for failback set to no? In my experience I have always configured or worked with the network team to leverage RSTP with Portfast on the uplink ports.

I saw mention something on his blog as well about this (with the vmkernel connected mgmt port group), curious why this is the configuration of choice.

Came across this thread as we are preparing a brand new cluster that we will be putting into production soon. Are the settings above recommended for all port groups (ie management, cvm, vm)? Would appreciate any insight.

We also use LACP, fast as our standard as we must support a transparent failure to our users.

What speeds of convergence can we expect from LBT as opposed to LACP?

thanks,

What speeds of convergence can we expect from LBT as opposed to LACP?

thanks,

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.