Hi guys, we're planning to move/migrate my MS SQL workload from physical standalone host to a new Nutanix cluster. We already engaged with local Nutanix representative for this. However, we encountered a few hurdles in terms of performance during and we can't be sure whether we have the right sizing for our performance requirement.

Current Production Physical host:-

Processor: Intel Xeon E5-2690v3 @ 2.6GHz (single socket, 12C 24T)

RAM: 96GB (However only 64GB usable for the MS SQL 2008R2 DB)

Disks: Database MDF resides in a SSD RAID 5 diskgroup. (6 disks), OS and LDF etc resides in other disk groups, SAS disks 10K, 15K RAID 10, RAID 1 etc.

Test Unit ( 3 nodes):-

Processor: Intel Xeon E5-2650 v4 @ 2.2 GHz (dual socket, 24C 48T) per node

RAM: 512GB per node

Disks: 6 x 1.6TB Intel S3610 SATA (All Flash)

Hypervisor: AHV 5.9.2.4

Network: 10GbE RJ45

SQL Test VM created in Nutanix test cluster:-

12 vCPU

96GB RAM

CVM size:

12 vCPU

32GB RAM

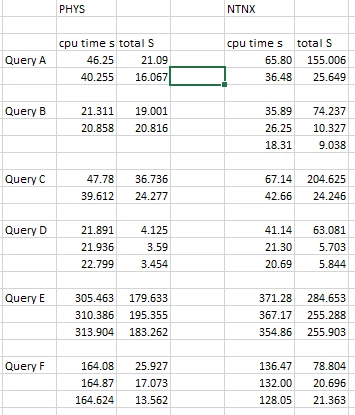

Test Result from MS SQL set statistics time on:

Questions:

We tested different type of queries and compared the results from both the physical host and test units. However, the results was not what we expected.

As observed above, the initial run of the SQL in Nutanix took much longer compared to physical host.

The initial run will always query from disks and the query will then "cached" in the RAM.

Hence second run of the query for both Nutanix and Physical will have almost same result.

We have roughly identified that the clock speed of the CPU could be the reason of such difference in terms of speed. But we do not have a very concrete proof to prove this.

1) How can we be sure that it's due to the lower clock speed of the CPU?

2) How do we know what's the maximum performance we can yield from the cluster?

Question

How to "size" a Nutanix cluster based on performance requirement?

This topic has been closed for replies.

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.