Prism Central in Azure provides the control plane for Flow Virtual Networking. The subnet for Prism Central is delegated to Microsoft.BareMetal/AzureHostedService, so you can use native Azure networking to distribute IP addresses for Prism Central.

Once you deploy Prism Central, the Flow Virtual Networking Gateway (FGW) VM deploys into the same VNet that Prism Central uses. The FGW allows communication between the guest VMs using the VPCs and the native Azure services. Using the FGW, guest VMs have parity with native Azure VMs for elements such as:

- User-defined routes: You can create custom or user-defined (static) routes in Azure to override Azure’s default system routes or to add routes to a subnet’s route table. In Azure, you create a route table, then associate the route table to zero or more virtual network subnets.

- Load balancer deployment: You can balance services offered by guest VMs with the Azure-native load balancer.

- Network security groups: You can write stateful firewall policies.

The FGW is responsible for all north-south VM traffic from the cluster. During deployment, you can pick different sizes for the FGW based on how much bandwidth you need. Traffic sent to the bare-metal nodes and to Prism Central are not in the network path of the Flow Gateway VMs.

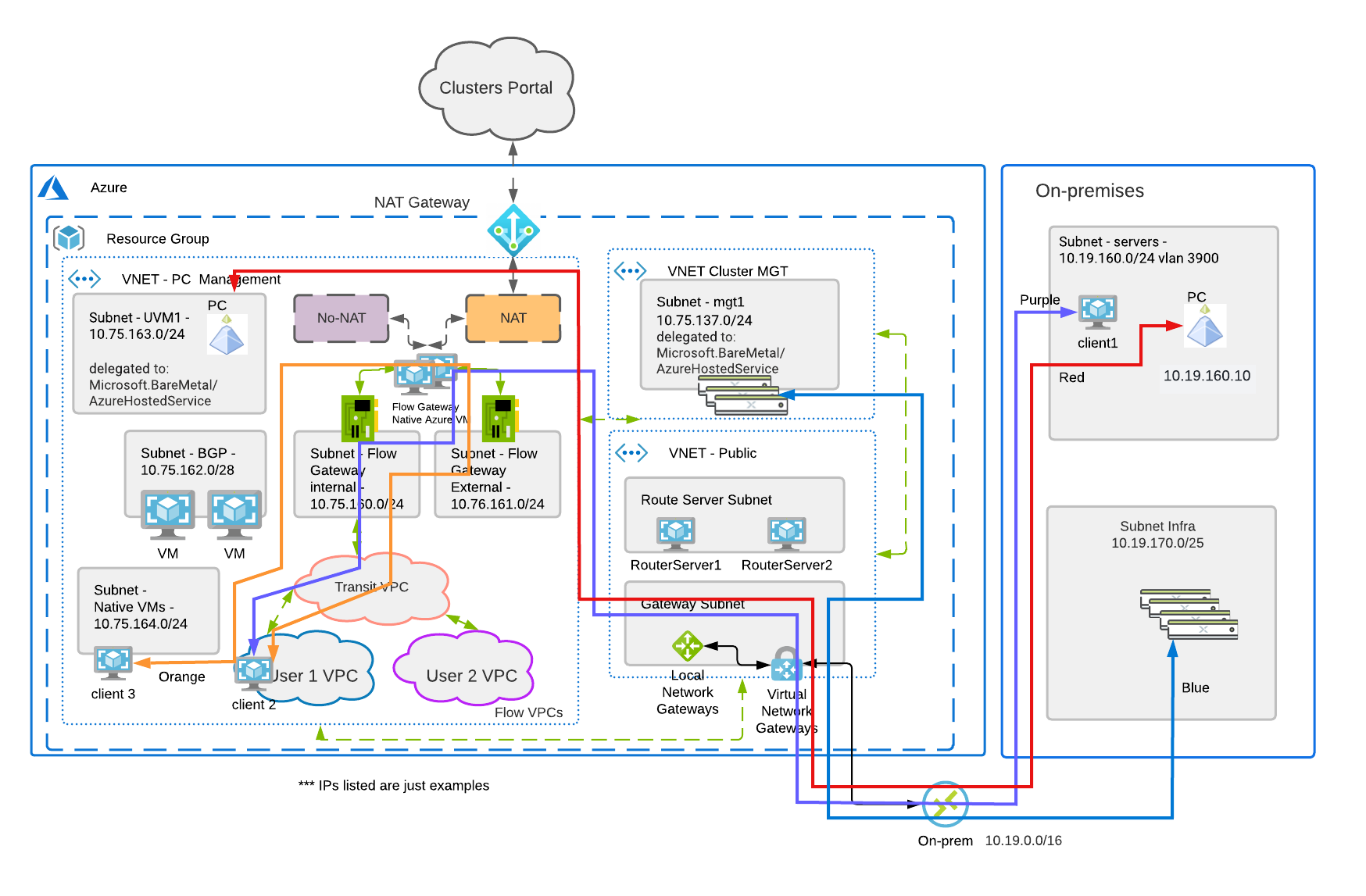

In the diagram above we see the network path of various resources residing in Azure with NC2. On-premises has its network path extended to Azure using an Azure Virtual Network Gateway. The purple path starts on an on-premises datacenter and flows through the FGW VMs. Network considerations for the FGW and the VPN throughput would have to be considered when sizing.

The red path starts at Prism Central on-premises and contacts the Prism Central in Azure. This would be a common scenario when performing DR/migrations. The network path is outside of the FGW since we are not connecting resources running on the NC2 Cluster.

The blue path starts on the on-premises CVMs(storage controllers running on the nodes) communicating with the CVMs of the NC2 Azure cluster. This would be a typical network path of replicating VMs and volume groups from on-premises to the NC2 cluster. Its network flows through the Virtual Network Gateway but not the FGW.

The orange path starts at a native azure subnet running client 3 contacting client 2 running on the NC2 Azure cluster. This traffic flows through the FGW to contact client 2.

Flow Gateway Bandwidth Sizing Best Practices

- Start the FGW resources small as you can always scale up the solution after deployment.

- Incorporate sizing for backup traffic if you're using agent based backup in your VMs. It’s preferred to use backup vendors that use Nutanix backup APIs.

Guest VMs on the NC2 Cluster that communicate with AHV, CVMs, Prism Central, and Azure resources use the external network card on the FGW VM, and NAT uses a native Azure address to ensure routing to all resources. You can also provide user-defined routes in Azure to communicate directly with Azure resources if you don’t want to use NAT. With this method, fresh installs can communicate with Azure right away, and you have more advanced configuration options.

If you have any questions about Azure networking in NC2 please ask away.