This one may be an obvious question, but its probably too obvious to the point that I am overthinking it.

Model: NX-6035-G4

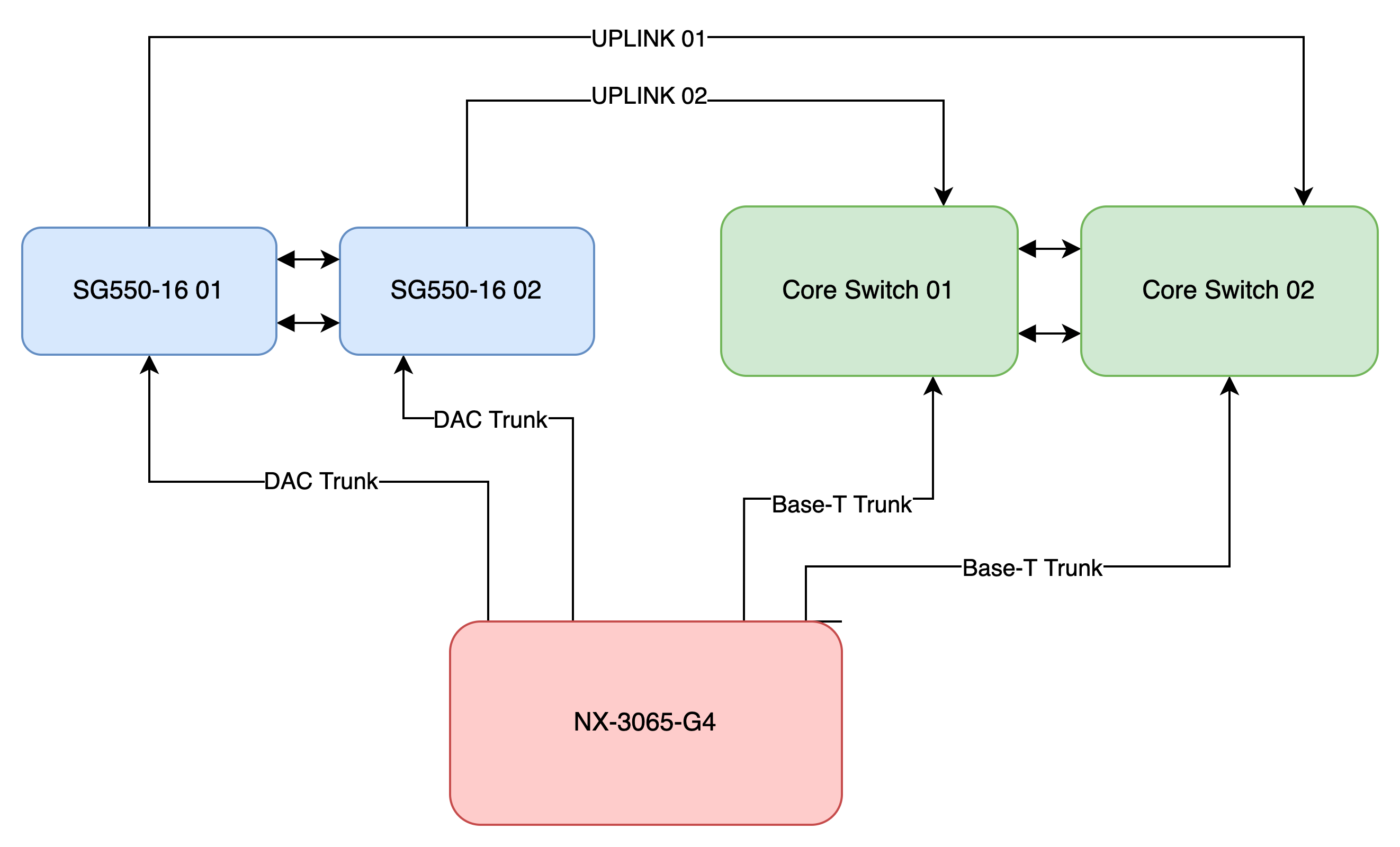

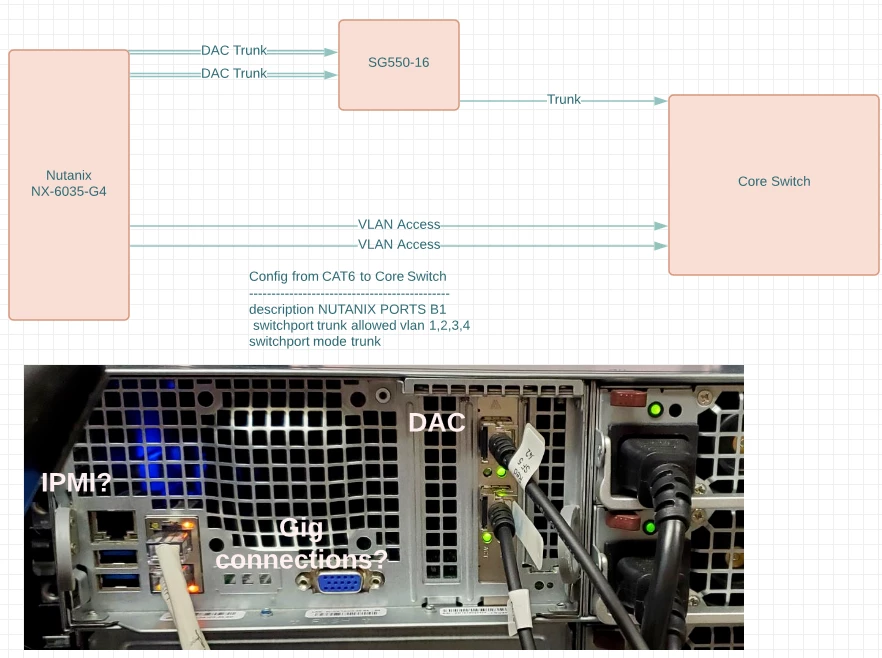

I came into an environment where there are 5 clusters. Each clusters. Each one has both NIC’s on each node connected directly to the core switch. Each specifying the VLAN access on the core switch.

Now the SFP ports are running DAC cables to a 10 Gig switch that is just in trunk mode. That switch then Trunks to the core switch.

So are you suppose to use both? I thought you only had to use one or the other, normally this doesn't matter, but the Core switch is over saturated and I could close out 10 Unnecessary wires.

I drew a diagram encase this was wordy.

Best answer by bcaballero

View original