Best answer by Jon

View originalAcropolis open vSwitch

Hi, How many vLANs are supported per open vswitch in acropolis open vswitch? Is traffic shaping is possible in acropolis open vswitch? Thanks in advance. Regards, Vivek

This topic has been closed for comments

+4

+4

Valid ID's are 0-4094, so the max number of VLANs allowed would be 4095 if you include a VLAN that doesn't tag (id 0).

No, we have not enabled traffic shaping in OVS. I certainly know there are valid use cases, and we've been working on a few of them internally already.

For most use cases, keep in mind that in Nutanix, each node has full network access, such that (for example) a 3 node cluster would have (at minimum) 60 Gbits of bandwidth going into it (assuming 2x 10Gbits per node). That math, of course, goes up linearly with node count or with an increase in NIC speed (like 25/40/100g interfaces).

For folks like Service Providers, this makes more sense, so that they can shape the traffic of specific tenants or applications within a tenant, which is where we've been exploring this use internally.

On a related note, we're releasing service chaining with OVS in the very next release as part of the microsegmentation feature, which is quite interesting.

For most use cases, keep in mind that in Nutanix, each node has full network access, such that (for example) a 3 node cluster would have (at minimum) 60 Gbits of bandwidth going into it (assuming 2x 10Gbits per node). That math, of course, goes up linearly with node count or with an increase in NIC speed (like 25/40/100g interfaces).

For folks like Service Providers, this makes more sense, so that they can shape the traffic of specific tenants or applications within a tenant, which is where we've been exploring this use internally.

On a related note, we're releasing service chaining with OVS in the very next release as part of the microsegmentation feature, which is quite interesting.

No worries, everyone's gotta start somewhere.

In general, its not a problem due to the reasons I mentioned, given you've got copius amounts of bandwidth and live migration events are relatively rare in Nutanix. Stacked together with data locality, where reads are mostly kept off the network, those network adapters will be sitting at lower-ish utilization that you'd expect.

We're huge fans of the kiss principle here at nutanix, as most things "just work", which is quite nice.

That said, its good to know whats what and know the reasoning behind what we do, so I'd recommend checking out the AHV networking guide here: https://portal.nutanix.com/#/page/solutions/details?targetId=BP-2071-AHV-Networking:BP-2071-AHV-Networking

That should give you some good background. After you read that, you'll find that you'll likely want to use either balance-slb or balance-tcp for load balancing policy on the OVS side, which does give you better load distribution than the default (active/backup), which is the default simply because its the most compatible for almost anyones network setup, so its very easy to get going.

Even if you kept the default though, you'll still have copius amounts of bandwidth that scales linearly per node.

In general, its not a problem due to the reasons I mentioned, given you've got copius amounts of bandwidth and live migration events are relatively rare in Nutanix. Stacked together with data locality, where reads are mostly kept off the network, those network adapters will be sitting at lower-ish utilization that you'd expect.

We're huge fans of the kiss principle here at nutanix, as most things "just work", which is quite nice.

That said, its good to know whats what and know the reasoning behind what we do, so I'd recommend checking out the AHV networking guide here: https://portal.nutanix.com/#/page/solutions/details?targetId=BP-2071-AHV-Networking:BP-2071-AHV-Networking

That should give you some good background. After you read that, you'll find that you'll likely want to use either balance-slb or balance-tcp for load balancing policy on the OVS side, which does give you better load distribution than the default (active/backup), which is the default simply because its the most compatible for almost anyones network setup, so its very easy to get going.

Even if you kept the default though, you'll still have copius amounts of bandwidth that scales linearly per node.

Keep in mind that when you configure a VLAN in Acropolis, it doesn't program it to any sort of OVS until a VM is provisioned on a host. When that happens, we configure a Tap device on that OVS, and program the VLAN to that tap device.

Completely different construct from the typical vSwitch, where you program the vSwitch, then attach VM's to pre-configured "port groups".

Traffic shaping it not yet available. If you have a use case for it, please submit a support ticket with priority RFE Request for Enhancement, so we can track demand for the feature.

Completely different construct from the typical vSwitch, where you program the vSwitch, then attach VM's to pre-configured "port groups".

Traffic shaping it not yet available. If you have a use case for it, please submit a support ticket with priority RFE Request for Enhancement, so we can track demand for the feature.

Thanks How many VLAN we can create on single host / maximum vlan allowed?

+2

+2

Can you please confirm if traffic shaping has been made available to Acropolis Open vSwitch? Thank you.

+2

+2

Jon,

Thank you for your quick reply. My organization is new to Nutanix and HCI, my apologies if I'm asking basic questions...

We are a VMware shop but one of the clusters we're building is AHV only. Since Network I/O Control or traffic shaping is not currently available on AHV Open vSwitch, what recommendation(s) do you provide your customers in handling VM live migrations since it could potentially saturate the 10Gb link (as we've seen in VMware vMotion events) that's also carrying data and replication traffic? Or is this not an issue with Nutanix as you've illustrated in your initial reply to my question? Thanks again.

Thank you for your quick reply. My organization is new to Nutanix and HCI, my apologies if I'm asking basic questions...

We are a VMware shop but one of the clusters we're building is AHV only. Since Network I/O Control or traffic shaping is not currently available on AHV Open vSwitch, what recommendation(s) do you provide your customers in handling VM live migrations since it could potentially saturate the 10Gb link (as we've seen in VMware vMotion events) that's also carrying data and replication traffic? Or is this not an issue with Nutanix as you've illustrated in your initial reply to my question? Thanks again.

+2

+2

Jon,

We've decided to use only the 2x10Gb adapters for our deployment and will be using OVS balance-slb LB policy. With this configuration, is it possible to pin the Live Migration traffic, management traffic, etc. to a particular host NIC? If so, what happens to the pinning assignment when a link fails and when the link comes back online? I understand Nutanix wants to keep things simple but just wondering if this option is available.

Again, I'd like to express my sincere gratitude for all the information you've provided.

We've decided to use only the 2x10Gb adapters for our deployment and will be using OVS balance-slb LB policy. With this configuration, is it possible to pin the Live Migration traffic, management traffic, etc. to a particular host NIC? If so, what happens to the pinning assignment when a link fails and when the link comes back online? I understand Nutanix wants to keep things simple but just wondering if this option is available.

Again, I'd like to express my sincere gratitude for all the information you've provided.

No, there isn't the same construct of pinning in OVS (at least what we expose on the ntnx side). All of those traffic types will exist on the same bridge within OVS.

happy to help

- jon

happy to help

- jon

+2

+2

Jon, how about ERSPAN, does Open vSwitch support it? If not, what would be an alternate solution? Thanks.

Check out the general OVS product level FAQ here:

http://docs.openvswitch.org/en/latest/faq/configuration/

TLDR - no, OVS doesn't support ERSPAN but does have some other tunneling technologies. Either way, we dont have that particular tunneling technology plumbed into our side, so we can't set up that tunnel automatically, etc

http://docs.openvswitch.org/en/latest/faq/configuration/

TLDR - no, OVS doesn't support ERSPAN but does have some other tunneling technologies. Either way, we dont have that particular tunneling technology plumbed into our side, so we can't set up that tunnel automatically, etc

+2

+2

Can we set up the GRE tunnel manually? In doing so, will this be a supported configuration and can we ask Nutanix support to assist us in troubleshooting set up or configuration issues?

(technically yes), but no, it would not be supported, and we really wouldn't recommend it.

Doing an unsupported change like that would very likely break every time you do any sort of operation on a given VM, like power on/power off, migration, high availability restarts, cloning, etc. This is because it would be a change that our control plane didn't program in, so it would just override it as it went about its business. Thats best case. Worst case, we haven't tested it, so we dont know any unintended side effects.

That said - Could you expand on what you're hoping to accomplish here? I know what tech you're talking about, but I'm wondering what your specific use case is, so I can take it back to the team here.

Doing an unsupported change like that would very likely break every time you do any sort of operation on a given VM, like power on/power off, migration, high availability restarts, cloning, etc. This is because it would be a change that our control plane didn't program in, so it would just override it as it went about its business. Thats best case. Worst case, we haven't tested it, so we dont know any unintended side effects.

That said - Could you expand on what you're hoping to accomplish here? I know what tech you're talking about, but I'm wondering what your specific use case is, so I can take it back to the team here.

+2

+2

Here's our use case... 2 VMs on the same host, on the same network segment talking to each other. How do we capture traffic between these 2 VMs?

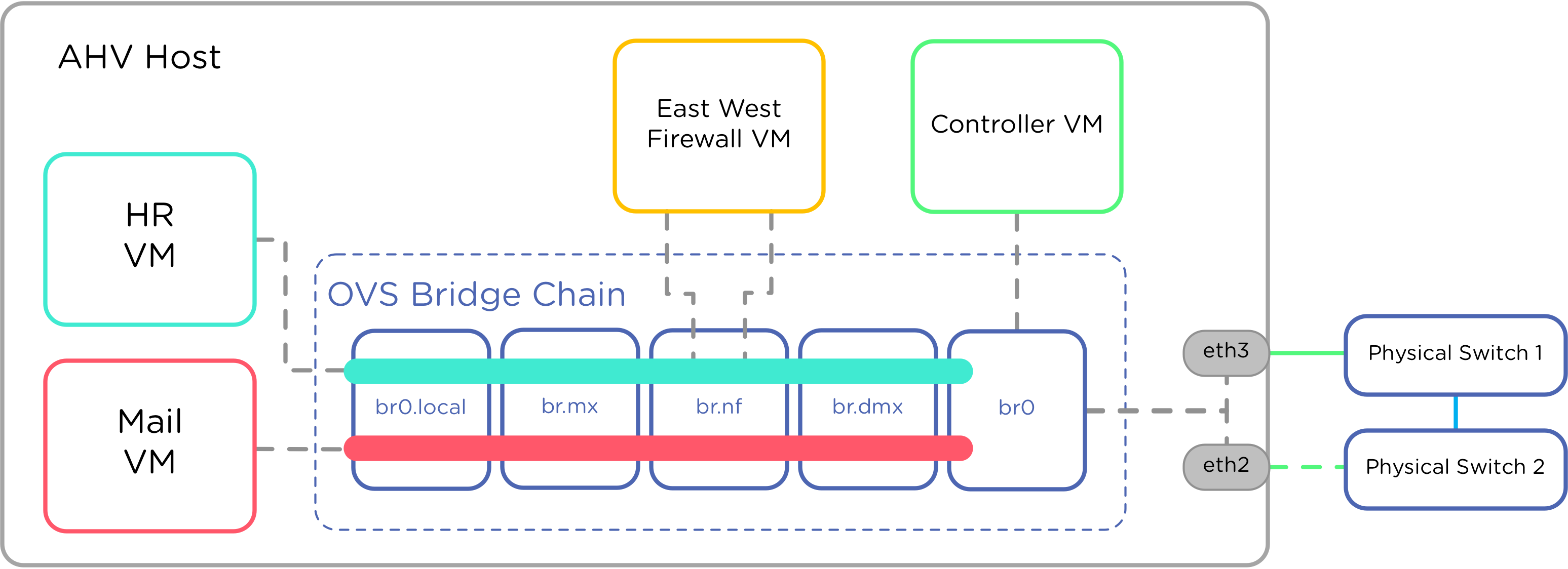

Network function chains can do this today in AHV. You would create a tap mode network function VM and put it in the network that these VMs use. This would allow you to capture traffic between VMs on the same "Network" regardless of whether or not they were on the same host. All traffic to and from a VM MUST flow through the network function chain when it's enabled.

https://portal.nutanix.com/#/page/docs/details?targetId=AHV-Admin-Guide-v55:ahv-ahv-integrate-with-network-functions-intro-c.html

I'm working on a blog post to cover this use case. Here is an image to show how it would work. You can do an inline port or a tap port.

https://portal.nutanix.com/#/page/docs/details?targetId=AHV-Admin-Guide-v55:ahv-ahv-integrate-with-network-functions-intro-c.html

I'm working on a blog post to cover this use case. Here is an image to show how it would work. You can do an inline port or a tap port.

+2

+2

Thank you Jason. I have a few questions...

Currently, we're sending the captured traffic to our Viavi appliance, is it possible to do the same with the Network Function VM? Are the NFV's running Linux, are they accessible via the console (or any other means) and managed using CLI? Is ERSPAN supported by the NFV's? Thanks again.

Currently, we're sending the captured traffic to our Viavi appliance, is it possible to do the same with the Network Function VM? Are the NFV's running Linux, are they accessible via the console (or any other means) and managed using CLI? Is ERSPAN supported by the NFV's? Thanks again.

Depends on where you're capturing the traffic from, where you're sending it to, and how you're sending it.

The NFV I referred to is a special VM that runs on every single AHV host in the cluster. You provision this VM and mark it as an agent VM. Then you add it to a network function chain. This VM can run any OS that's supported on AHV, and you can decide whether to hook up a single interface as a tap, or multiple interfaces as inline.

This NFV VM can receive, inspect, and capture in tap mode. In inline mode it can do these function AND decide to reject or transmit the traffic. In the example diagram above, imagine that VM as a Palo Alto Networks VM-Series firewall. I've also used the Snort IDS in my own lab.

With this type of NFV configured in a network function chain, you can only capture traffic sent or received by VMs running on AHV. You cannot capture traffic sent by physical hosts, or send in ERSPAN type traffic to the NFV VM.

If you setup a regular VM on AHV, you can use this to receive ERSPAN traffic from outside sources, since all that's required is the IP address of the VM. It's up to you to decide what software you want to install inside this VM. You could use something as simple as tcpdump if you wanted, or you could install a VM with software from a 3rd party vendor for analyzing traffic.

The NFV I referred to is a special VM that runs on every single AHV host in the cluster. You provision this VM and mark it as an agent VM. Then you add it to a network function chain. This VM can run any OS that's supported on AHV, and you can decide whether to hook up a single interface as a tap, or multiple interfaces as inline.

This NFV VM can receive, inspect, and capture in tap mode. In inline mode it can do these function AND decide to reject or transmit the traffic. In the example diagram above, imagine that VM as a Palo Alto Networks VM-Series firewall. I've also used the Snort IDS in my own lab.

With this type of NFV configured in a network function chain, you can only capture traffic sent or received by VMs running on AHV. You cannot capture traffic sent by physical hosts, or send in ERSPAN type traffic to the NFV VM.

If you setup a regular VM on AHV, you can use this to receive ERSPAN traffic from outside sources, since all that's required is the IP address of the VM. It's up to you to decide what software you want to install inside this VM. You could use something as simple as tcpdump if you wanted, or you could install a VM with software from a 3rd party vendor for analyzing traffic.

When we say network function VM, in your case we'd be referring to Viavi. It would have to be running on the same host as the system(s) you want to capture traffic from.

To be clear, this isn't some special VM we're providing. The chaining feature in AHV allows you to either put "tap mode" devices where you get a local mirror

or

in-line mode devices, which would be like a IDS/IPS/Firewall type setup

To be clear, this isn't some special VM we're providing. The chaining feature in AHV allows you to either put "tap mode" devices where you get a local mirror

or

in-line mode devices, which would be like a IDS/IPS/Firewall type setup

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.