In this series, we’ve learned Nutanix provides more usable Capacity along with greater capacity efficiency, flexibility, resiliency and performance when using Deduplication & Compression as well as Erasure Coding.

We’ve also learned Nutanix provides far easier and superior storage scalability and greatly reduces the impact from Drive failures.

We then switched gears and covered heterogeneous cluster support and learned how critical this is to an HCI platform’s ability to scale and deliver a strong ROI without rip and replace or the creation of silos.

In this part of the blog series, we cover each product’s I/O Path for write operations with mirroring (a.k.a FTT1 for vSAN and RF2 for Nutanix).

I will be publishing a separate detailed comparison between deploying software-defined storage “In-Kernel” vs “Controller VM”, this post will focus on how the traffic traverses and utilises the cluster.

Let’s assume a brand new 4 node cluster for both vSAN and Nutanix ADSF and a single VM with a 600GB vDisk. The value of 600GB was chosen for two reasons.

- The VMware article I reference later in this document used this size

- 600GB means vSAN will split the disk into multiple objects which is to vSANs advantage (<255GB would mean a single object).

Starting with vSAN:

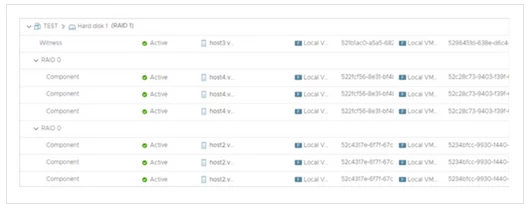

vSAN will split the vDisk into 3 objects due to the 255GB object size limit. meaning we will have 3 objects with 3 replica along with a witness.

The objects will be placed on the node where the VM is hosted and on a single other node as shown below. (Screenshot courtesy of URL below).

vSAN Write Path Summary:

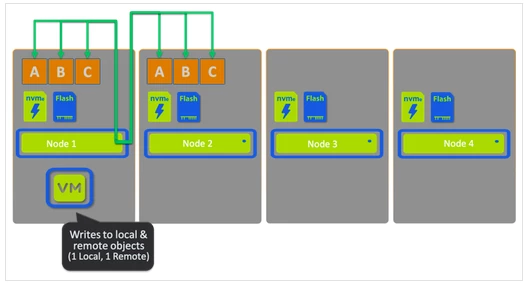

In the best case scenario, if the VM has not moved from the host it was created on OR it has been moved back onto the original host, one write will be to the local objects and one write will be to the remote objects.

Scenario 1: vSAN

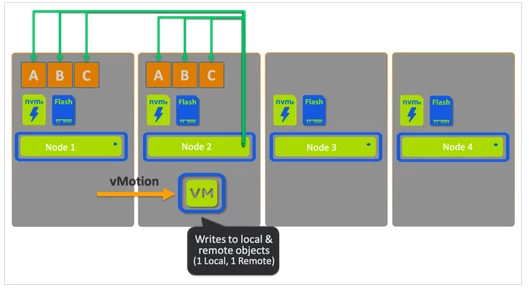

If the VM is vMotion’d manually or via DRS, if it ends up by chance on Node 2, it will have the same optimal write path as shown below.

Scenario 2: vSAN

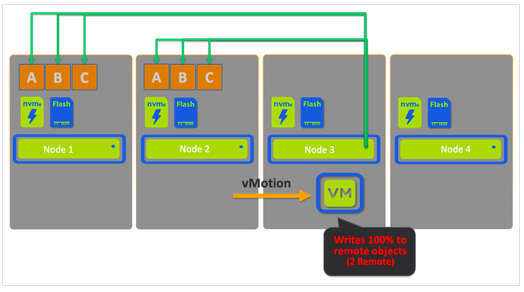

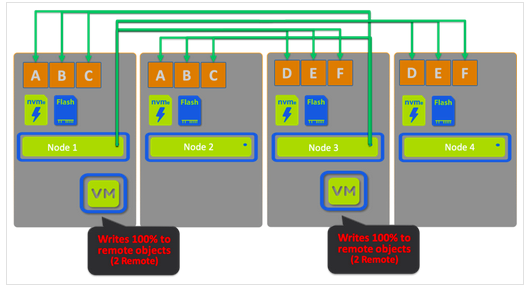

If the VM is on a host where no objects are hosted (50% chance in a 4 node cluster), all writes will be performed remotely across the network the same as they would in a traditional SAN environment as shown below.

Scenario 3: vSAN

These scenarios highlight that even if we accept than vSAN’s “In-kernel” architecture is the most optimal (or shortest) IO path, in cases where IO is remote, it would be fair to say that theoretical advantage is eliminated.

Let’s dive a little deeper and see what happens as VMs move around a cluster (as they typically do).

Let’s use a simple example with two VMs which have moved around due to maintenance or DRS balancing the cluster. They’ve each ended up on a node which does not host any of their objects. How does the IO path look for these VMs?

The below image highlights the problem, we now have two VMs with 100% of their write I/O going remotely. To make matters worse, the network traffic on Node 1 and Node 3 are both serving 2 writes out and 2 write in.

Scenario 4: vSAN

As previously mentioned, as a cluster grows, the chance of VMs being hosted where their objects are located decreases so this scenario becomes more and more likely putting unnecessary resource overheads on the environment.

If the environment is configured with Failures to Tolerate 2 (FFT2), then the overhead of VMs IO being 100% remote will also increase.

Scenario 5: vSAN

When vSAN is under maintenance and VMs move throughout the cluster, it’s possible they will land on a node hosting one or more of the VMs objects and write I/O may be optimal, but as we’ve mentioned this becomes more unlikely as the cluster size increases.

I refer to this as “Data locality by luck”.

vSAN IO Path Summary:

* Odds of the optimal scenario decreases as the cluster size increases. For an 8 node cluster, the chance drops to 25% and for a 32 node cluster it’s just 6.25%.

As a result, during maintenance you will likely end up in a situation where the majority of write I/O is performed over the network potentially causing contention with other VMs as explained earlier.

Note: When using the default vSAN setting of “Ensure accessibility” during maintenance, data is not fully compliant with the VM storage policy as explained in the vSAN 6.7 documentation (Place a Member of vSAN Cluster in Maintenance Mode) below:

Typically, only partial data evacuation is required. However, the virtual machine might no longer be fully compliant to a VM storage policy during evacuation. That means, it might not have access to all its replicas. If a failure occurs while the host is in maintenance mode and the Primary level of failures to tolerate is set to 1, you might experience data loss in the cluster.

Reference: https://docs.vmware.com/en/VMware-vSphere/6.7/com.vmware.vsphere.virtualsan.doc/GUID-521EA4BC-E411-47D4-899A-5E0264469866.html

A Chief Technologist in the Office of CTO of the HCI BU at VMware., Duncan Epping, wrote an article titled: VSAN 6.2 : Why going forward FTT=2 should be your new default.

I agree with Duncan’s recommendation for vSAN, and the above maintenance scenario where data is at risk highlights the need for FFT2 on vSAN.

Note: Nutanix ADSF always maintains write I/O integrity by default with RF2 (FTT1) which is a major capacity, resiliency and performance advantage for ADSF.

I will cover more critical resiliency considerations in a future post.

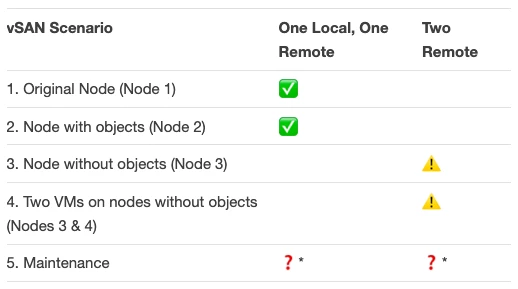

Now let’s review Nutanix ADSF:

Here we see a VM with the same configuration as the VM on vSAN, one 600GB virtual disk. Nutanix ADSF doesn’t have the concept of large, inflexible objects. All data regardless of how many vDisks is split into 1MB extents and 4MB extent groups.

1 replica remote w/ Intelligent Replica placement.

We see in all cases, 1 replica is written to the host running the VM and the other replica is written and distributed throughout the cluster.

Nutanix is delivering capacity efficiency and performance in the write path.

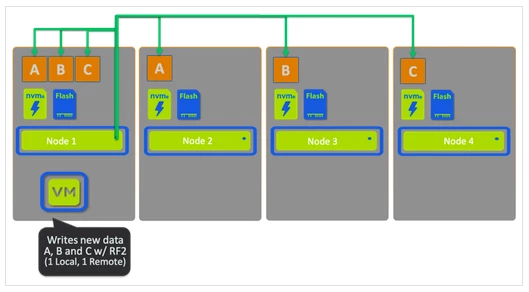

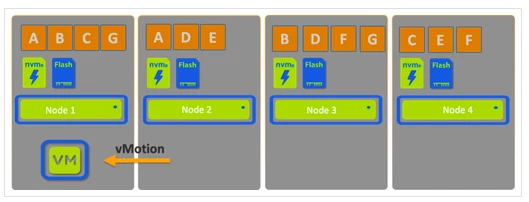

Next let’s vMotion the VM from Node 1 to Node 2.

1 replica remote w/ Intelligent Replica placement.

We still see in all cases, 1 replica is written to the host running the VM and the other replica is written and distributed throughout the cluster.

Nutanix continues to deliver capacity efficiency and performance in the write path even after a vMotion.

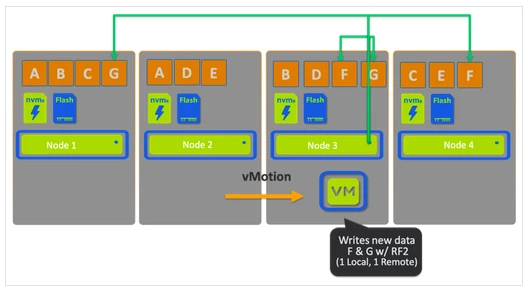

Now let’s vMotion the VM to Node 3.

consistent with 1 replica local, 1 replica remote w/ Intelligent Replica placement.

Now let’s vMotion the VM back to the original host.

The resulting situation is 4 out 7 pieces of data are local, with 3 being remote which if accessed, will be localised. If it’s never accessed it will remain remote and have no impact on the VMs performance.

The key point here is regardless of where the VM was moved, the Write path ALWAYS wrote 1 replica locally and 1 remotely delivering consistent write performance.

The Write path also used Intelligent replica placement to ensure the cluster was as balanced as possible. We can see from this simple example, nodes have either 3 or 4 pieces of data and all nodes participated in the write IO in a distributed manner.

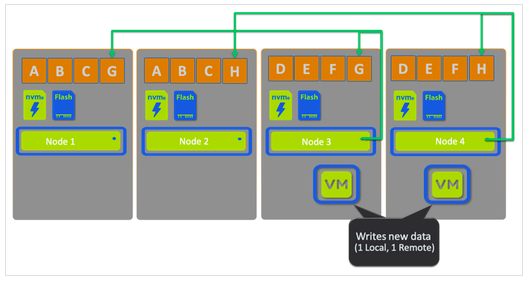

What about the scenario I highlighted with vSAN where two VMs are moved to hosts they were not created on?

Nutanix ADSF IO Path Summary:

What we see below is regardless of where the VM/s are within the cluster, the write path remains the same. One replica is written to the node running the VM and the 2nd (or 3rd in the case of RF3) replica is intelligently distributed throughout the cluster.

Consistency is key, and that is exactly what Nutanix unique Data Locality delivers for Write I/Os.

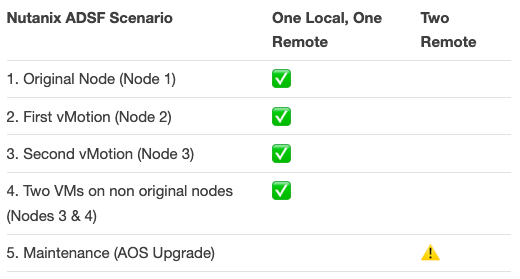

Are there any scenarios where Nutanix write I/O won’t be optimal with 1 local and 1 remote (2 remote for RF3)?

Yes there are three scenarios:

- If the local node is FULL

- If the local nodes CVM is unavailable or any reason

- Overwrites (which are done “in-place”)

In the first two cases, all write (and read) I/O will be remote.

With overwrites, random I/O is still written locally to the oplog for optimal performance. When IO is drained to the extent store, this operation is done in place which may be local or remote. If the overwritten data is read, it will be localised for optimal future overwrite (and read) operations.

So in short, the worst case scenario (all IO remote) for Nutanix ADSF is a typical scenario for vSAN.

The CVM can be unavailable as a result of planned maintenance such as AOS upgrades. In the unlikely event of a CVM being unexpectedly offline, VMs continue to run and IO will be served remotely, but critically intelligent replica placement still applies. Once the CVM comes back online, the optimal write path resumes.

Next up, we’ll cover the Read I/O path for vSAN and Nutanix ADSF.

Summary

- vSANs write path varies significantly depending on where a VM resides within the cluster.

- vSANs static, object based write path does not allow for incoming IO to be protected during maintenance without bulk movements (host evacuation) of data

- Nutanix ADSF always maintains write I/O integrity based on the configured storage policy (resiliency factor).

- Nutanix ADSF delivers a consistent write path regardless of where a VM resides or is moved to within a cluster.

- Nutanix ADSFs worst case scenario of two write replicas being sent remotely (during maintenance or CVM failure) is a typical scenario fo vSAN.

- Nutanix ADSF enjoys data locality by design, not by luck.

This article was originally published at http://www.joshodgers.com/2020/02/27/write-i-o-path-ftt1-rf2-comparison-nutanix-vs-vmware-vsan/

2020 Nutanix, Inc. All rights reserved. Nutanix, the Nutanix logo and all Nutanix product, feature and service names mentioned herein are registered trademarks or trademarks of Nutanix, Inc. in the United States and other countries. All other brand names mentioned herein are for identification purposes only and may be the trademarks of their respective holder(s). This post may contain links to external websites that are not part of Nutanix.com. Nutanix does not control these sites and disclaims all responsibility for the content or accuracy of any external site. Our decision to link to an external site should not be considered an endorsement of any content on such a site.

2020 Nutanix, Inc. All rights reserved. Nutanix, the Nutanix logo and all Nutanix product, feature and service names mentioned herein are registered trademarks or trademarks of Nutanix, Inc. in the United States and other countries. All other brand names mentioned herein are for identification purposes only and may be the trademarks of their respective holder(s). This post may contain links to external websites that are not part of Nutanix.com. Nutanix does not control these sites and disclaims all responsibility for the content or accuracy of any external site. Our decision to link to an external site should not be considered an endorsement of any content on such a site.